It started with a brief story in the New York Times about Luke Robitaille, a 13-year-old student from Euless, Texas, who won the Raytheon Mathcounts National Competition by correctly answering the following question:

In a barn, 100 chicks sit peacefully in a circle. Suddenly, each chick randomly pecks the chick immediately to its left or right. What is the expected number of unpecked chicks?

Robitaille took less than a second to buzz in with the correct answer, according to the Times.

The next day, Jordan Ellenberg tweeted a followup problem:

Since I don’t have to squeeze this story into 140 characters, I’ll fill in some details of Ellenberg’s question, as I understand it. Where the original problem called for a single round of synchronized random pecking, we now have multiple rounds. During a round, each chick randomly turns either left or right and pecks one of its neighbors. However, once a chick has been pecked, it will never peck again, even if it continues to receive pecks. When two adjacent chicks peck each other in the same round, they both drop out of the pecking game for all future rounds. If an unpecked chick winds up sitting between two pecked neighbors, it can never be pecked and will therefore keep on pecking forever. The question is, what proportion of the flock will survive to become invulnerable peckers?

Spoilers below, so now’s the time to work out the answers for yourself. While you’re busy with that, I’m going to say a few words about chickens, and about the rhetoric and semiotics of mathematical “word problems.”

My only direct knowledge of poultry comes from boyhood visits to my Aunt Noretta’s farm in southern New Jersey. That’s not much of a claim to expertise, but for what it’s worth I never saw her chickens sit in a circle, and they didn’t peck randomly. (They had a pecking order!) Furthermore, nothing I observed in their social interactions resembled the turn-the-other-cheek behavior of the chickens described in this problem. Why does a pecked chick never peck again? This is a bigger riddle than the quantitative question we are asked to address. Has the chick suddenly discovered the wisdom and power of nonviolence? I can think of another explanation, but it’s not for the squeamish: Maybe pecked chicks don’t peck back because pecks are lethal.

I know it’s silly to demand narrative realism in a story like this one. Mathematical word problems belong to a genre where no one expects verisimilitude. They are set in a world where knaves always lie and knights always speak the truth, where shipwrecked sailors obsess about the divisibility properties of a pile of coconuts, where people don’t know the color of the hat on their own head. Even the laws of physics yield to mathematical necessity: A fly shuttling between oncoming locomotives instantaneously reverses direction. Those chicks sitting in a circle are not fluffly bundles of yellow plumage; they are mathematical abstractions. They have coordinates and state variables rather than feathers.

I’m okay with abstraction; by all means, let us strip away extraneous detail. Nevertheless, isn’t the point of word problems to connect the mathematics to some aspect of familiar experience? Consider the ancient and famous river-crossing problem, where the fox must not be left alone with the chicken, which must not be left alone with the bag of corn. These constraints are easy to understand when you know something about the dietary preferences of foxes and chickens. That kind of intuitive boost is not to be found in the pecking problem. On the contrary, a little knowledge of avian behavior actually makes the problem more perplexing.

But no matter. Onward! Have you come up with your answers?

The single-round problem from the Mathcounts Competition yields to the oldest trick in the probability book. A chick remains unpecked only if both of its neighbors turn away and peck in the other direction. On both the left and the right, the probability of escaping a peck is \(\frac{1}{2}\), and the two events are independent, so the probability of staying unpecked on both sides is \(\frac{1}{2} \times \frac{1}{2} = \frac{1}{4}\). This argument applies identically to all the birds in the circle, so you can expect 25 percent of the chicks to come through unscathed.

Do you agree with this analysis? I came up with it pretty quickly when I read the Times article (though not nearly fast enough to beat Luke Robitaille to the buzzer). But then I began to have doubts. Is it strictly true that a chick’s left and right neighbors are totally independent? After all, they are connected by a chain of other chicks. Perhaps some influence can propagate around the circle, creating a correlation between left and right and altering the probability of survival.

Time for an experiment: Write the program, run the simulation. Set up a ring of 100 unpecked chickens and allow a single round of random simultaneous pecking. Repeat many times and calculate the mean number of unpecked birds remaining. (Some quick notation: Let \(N\) be the number of chicks in the ring and \(S\) be the number that survive unpecked. I’ll use \(\bar{S}\) for the mean value of \(S\) averaged over \(R\) repetitions of the experiment.) My results:

| \(R\) | \(\bar{S}\) |

|---|---|

| 100 | 24.79 |

| 10,000 | 24.9881 |

| 1,000,000 | 25.000274 |

| 100,000,000 | 24.99991518 |

As expected, the mean is quite close to 25 survivors. Furthermore, each time the sample size increases by a factor of 100, the accuracy of the approximation improves about tenfold. This pattern conforms to a statistical rule of thumb—that the fluctuations in a random process are proportional to the square root of the sample size. Thus the slight departures from \(\bar{S} = 25\) appear to be innocent random noise, not some systematic bias.

So that settles it, right?

Well, the simulation looks pretty convincing for the specific case of \(N = 100\) chicks, but the result might differ for other values of N. In particular, perhaps there’s some finite-size effect that becomes apparent only when N is small. Consider a “circle” of just two chicks. In this situation the left neighbor and the right neighbor are one and the same chicken! No matter what random choices are made, the two chicks immediately peck each other, and the proportion of survivors is not 25 percent but zero.

The next-larger “circle” consists of three chicks arranged in a triangle. The two neighbors of a chick are distinct, but they are also neighbors of each other. What happens when the three chickens are set loose on one another? The system has \( 2^3 = 8\) possible pecking patterns, and we can easily examine all of them. In the diagram, the arrows indicate where the chicks choose to direct their pecking.

In two cases, where all the chicks peck left or all peck right, there are no survivors. In every other instance exactly one chick remains unpecked. Aggregating the eight patterns, we find six unpecked chicks out of 24 total chicks, for a proportion of \(\bar{S} = \frac{1}{4}\). Thus it appears the finite-size anomaly afflicts only the two-chick version of the problem.

But wait! There’s another possible confounding factor. Can we be sure of seeing the same outcome for both even and odd numbers of chicks? For any odd value of N there is just one way to annihilate all the chicks in a single round: They must all peck in the same direction. For even N, however, another pattern also leads to immediate extinction: Adjacent chicks can pair up, knocking each other out. Won’t this extra pathway slightly alter the overall probability of survival?

Let’s see what happens with N = 4. Now there are \(2^4 = 16\) possible outcomes:

As expected, four patterns leave no survivors at all. On the other hand, there are also four patterns that leave two chicks unpecked rather than just one. Miraculously, the extra losses and the extra gains balance exactly. In all we have 16 survivors out of 64 chicks, so the ratio is again \(\bar{S} = \frac{1}{4}\).

After that long and twisty detour through the combinatorics of chicken pecking, we are right back where we started. The probability of surviving unpecked after a single round of pecking is \(\frac{1}{4}\) for any \(N \gt 2\). All of my fretting about finite-size effects and odd-even disparities was a waste of time. So why have I inflicted it on you? Well, although those worries turn out to be unfounded, they are not farfetched. Making just a small change to the pecking protocol leads to a different outcome. Let the pecking be sequential rather than simultaneous. Some designated chick initiates the sequence of pecks, and then the birds take turns, proceeding clockwise around the circle. When a chick’s turn comes, if it has already been pecked, it does nothing. If it is unpecked, it pecks either its left or its right neighbor, choosing randomly. The round ends when every chick has had a turn.

For \(N = 2\) it’s easy to see that the first chick to peck always survives and the other chick always dies, for a survival rate of \(\frac{1}{2}\). With a little more pencil-and-paper chicken scratching, you can establish that the 50 percent survival rate also holds for \(N = 3\). Looking at very large values of \(N\), computer experiments indicate that the survival fraction again approaches \(\frac{1}{2}\) as N goes to infinity. Between these extremes, however, there’s some funny business:

At \(N = 4\) the survivor rate dips below 0.47. (The exact probability is \(\frac{30}{64} = 0.46875\).) This is a minimum. But as the rate recovers back toward 0.5, there is some telltale wiggling in the curve that reveals an odd-even bias: The survival probability is depressed further for even N than for odd N. This is just the kind of behavior I was looking for (but not finding) in the original Mathcounts version of the problem.

Let us now take up Ellenberg’s problem of iterated pecking (using the simultaneous rather than the sequential protocol). We already know that after the first round we can expect to find about one-fourth of the chicks still unpecked. Clearly, the unpecked fraction cannot increase after multiple rounds. Thus in the final state the expected surviving fraction \(\bar{S}\) must lie somewhere between zero and \(\frac{1}{4}\).

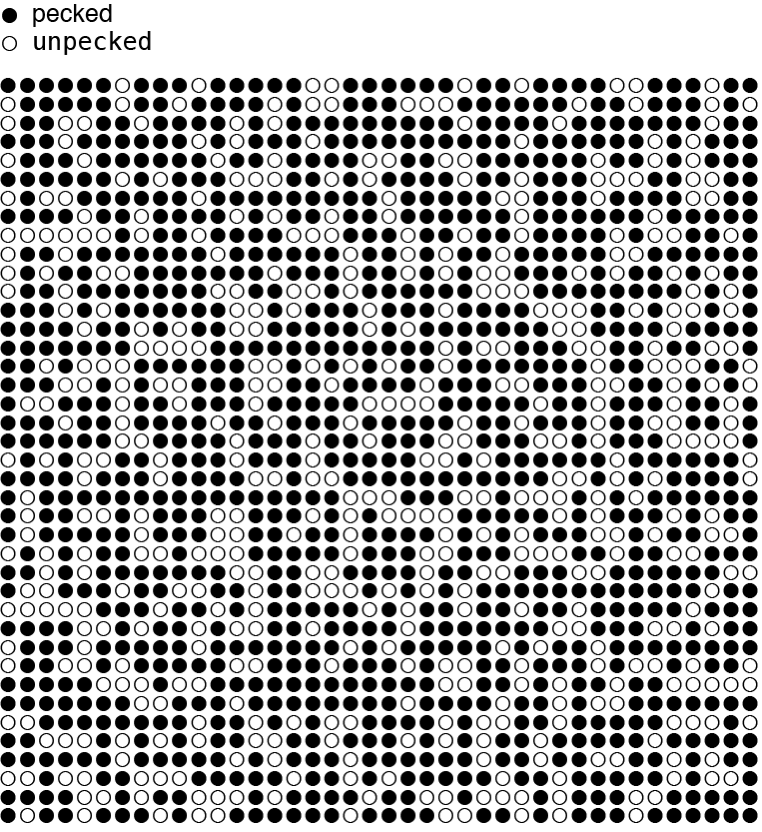

It’s helpful to look at a typical configuration of pecked (●) and unpecked (○) chicks after a single round of synchronized pecking:

●○●●●○○●●●●○●●○●●○○●●●○○●●●●●○●●●●●●●●●○○●●●●●●○○●●●○●●●●●●○●●●○○●●●●●

(You’ll have to use your imagination to connect the left and right ends of this array and thereby form a ring.) Notice that there are long strings of pecked chicks, but the unpecked chicks appear in only two configurations. They are either singletons (●○●) or pairs (●○○●). The cause of this pattern is not hard to understand. After a round of pecking, a group of three consecutive unpecked chicks (●○○○●) is impossible. The middle chick must have pecked either left or right, and so it cannot have two unpecked neighbors.

These constraints simplify the analysis of subsequent rounds. The singletons are essentially immortal and unchangeable: The unpecked chick in the middle can never be pecked, and the pecked neighbors can never be unpecked. For the pairs, there are four possible fates, corresponding to the four ways the two active chicks could choose to peck:

In any one round, all four of these events have the same probability, namely \(\frac{1}{4}\). The first three result states are terminal, in the sense that further rounds of pecking will leave them unchanged. In the fourth case we are left with an adjacent pair again, which will therefore face the same set of choices in the next round. Eventually, as the number of rounds goes to infinity, the fourth case must yield one of the other outcomes, and thus in the long run we can consider the fourth case to have probability zero and each of the other three cases to have probability \(\frac{1}{3}\).

And now it’s time to bring all these contingent events together and work out a chicken’s long-term probability of survival. The diagram below presents the scheme. In the first round of pecking, three-fourths of the chicks are eliminated immediately. Of the remaining one-fourth, half are singletons, which survive indefinitely. The other surviving chicks are members of pairs, with another pecking chick as either a right neighbor or a left neighbor.

The lower part of the diagram summarizes the effect of all subsequent rounds, which are assumed to continue until all pairs have been either annihilated or reduced to singletons. (I call this pecking to completion.) For each pathway that leads to a surviving singleton, the probability is the product of the individual probabilities encountered along that pathway. There are three such pathways, with probabilities \(\frac{1}{8}, \frac{1}{48}\), and \(\frac{1}{48}\), for a sum of \(\frac{1}{6}\).

I have to confess that I did not come up with this analysis—or with the correct answer—on my first try. I was able to work it out only after I had run a simulation and thus knew what I was looking for. Even then I had trouble with double counting.

Here are the simulation results:

| \(R\) | \(\bar{S}\) |

|---|---|

| 100 | 16.53 |

| 10,000 | 16.6835 |

| 1,000,000 | 16.664404 |

| 100,000,000 | 16.66701664 |

Again note that accuracy seems to improve as the square root of the sample size, although the variance here is larger than in the single-round experiment.

What about finite-size effects? In circles with only two or three members, the fate of the chicks is fully decided after a single round of pecking: \(\bar{S}\) is 0 and \(\frac{1}{4}\) respectively. Thus these smallest rings escape the \(\frac{1}{6}\) rule, but it appears that circles of all larger sizes converge to \(\frac{1}{6}\). There’s no evidence of even-odd discrepancies.

Another approach to understanding the iterated chicken-pecking problem is through the theory of Markov chains. For a ring of \(N\) chicks we list all \(2^N\) states of the flock and assign a probability to each transition between states. Consider a ring of four chicks, which has 16 states. Symmetries allow us to consolidate some sets of states, and other states can be ignored because they are unreachable from the starting state of four unpecked chicks (![]() ).

).

Only the four states in the red box need to be retained in the model. The transitions between them are recorded in a directed graph, where each arrow is labeled with the corresponding probability. Note that the starting state ![]() has only outgoing arrows; there is no way to re-enter the state once you leave. The states

has only outgoing arrows; there is no way to re-enter the state once you leave. The states ![]() and

and ![]() are absorbing: The only outgoing arrow leads directly back to the same state; thus, once you reach one of those states, you never escape it.

are absorbing: The only outgoing arrow leads directly back to the same state; thus, once you reach one of those states, you never escape it.

The essential information from the directed graph can be captured in a \(4 \times 4\) matrix, where the rows and columns are labeled with the four states, and the matrix entries represent the probability of a transition from the row state to the column state. The entries in each row sum to 1, as they must if they are to represent probabilities.

The pattern of zero entries in the transition matrix implies that certain states can’t be reached from other states, even by an indirect route. For this reason the Markov model is said to be irregular. That’s a bit awkward, because regular Markov models are easier to analyze and understand. In a regular model, when you take successive powers of the transition matrix, it converges to a steady state, where all the rows are identical and every column consists of a single, repeated value. This fixed point reveals the system’s long-term probability distribution. An irregular Markov model may not even have a stable limiting distribution, but this one does, and it seems to offer some insight. Every ring of four chickens must wind up in one of the two absorbing states. With probability two-thirds that terminal state will be ![]() and with probability one-third

and with probability one-third ![]() . This result is consistent with the finding that one-sixth of the chickens survive unpecked.

. This result is consistent with the finding that one-sixth of the chickens survive unpecked.

So, finally, that wraps it up, right? Both the contest problem and Ellenberg’s iterative extension asked for the expected number of surviving chickens, and we have supplied the answers: for a circle of \(N\) chickens, the expected number of survivors \(\bar{S}\) is \(\frac{N}{4}\) after a single round of pecking and \(\frac{N}{6}\) upon pecking to completion. Ironically, though, the expected value of a probabilistic process doesn’t necessarily tell you what to expect. Consider a simpler problem: When you flip a fair coin 100 times, how many heads do you expect to see? The obvious answer is 50, and it’s correct in the sense that no other number has a higher likelihood of correctly predicting the outcome of the experiment. However, the probability of seeing exactly 50 heads is only about 0.08, and thus some other number will turn up more than 90 percent of the time.

Instead of looking only at the expected value, let’s examine the range of possible \(S\) values in the pecking game. We’ve already established that zero survivors is a possible outcome, so that forms a lower bound. What is the upper bound—the maximum number of survivors? In the single-round process, every chick pecks, and so after that round every chick must have at least one pecked neighbor. On the basis of this fact I claim that the surviving population can never be greater than \(\frac{N}{2}\). (Do you agree? It took me a while to persuade myself it’s true.)

If \(S\) can never be greater than \(\frac{N}{2}\), the next question is whether it can ever attain that bound. And if we can have equal numbers of pecked (●) and unpecked (○) chicks, how are they arranged in the ring? It’s tempting to propose the following configuration:

●○●○●○●○●○●○

This is a stable state: The unpecked chicks can never be pecked, so no further changes are possible. And the fraction of survivors is \(\frac{1}{2}\). But there’s a problem with this pattern: It cannot be reached from the starting state. Look at any of the black pecked chicks and ask yourself: Which of its neighbors did it peck? Neither of them, evidently, since they are both unpecked. But that’s not possible, given that every chicken must peck in the first round.

Although the alternating black and white arrangement is ruled out, we’re on the right track. There’s another configuration that also leaves one-half of the chicks unpecked after a single round, and that pattern is achievable from the starting state:

●●○○●●○○●●○○

When you join the ends to form a ring, every chick, whether pecked or not, has one pecked neighbor. It turns out this is the only way—after allowing for some obvious symmetries—to reach 50 percent survivorship. (Strictly speaking, 50 percent is attainable only when \(N\) is divisible by 4, but \(S\) is never less than \(\frac{N-2}{2}\).)

When the pecking continues to completion, the upper bound of \(S = \frac{N}{2}\) is no longer reachable. Suppose we tried to maintain \(\frac{N}{2}\) over multiple rounds of pecking. Clearly we would have to start in the first round with the maximal-survivor state ●●○○●●○○●●○○. However, at least half of the unpecked chicks in this configuration must succumb in subsequent rounds, leaving no more than \(\frac{N}{4}\) survivors.

Does this argument mean that \(S = \frac{N}{4}\) is the greatest possible after pecking to completion? No, it doesn’t. There’s another pattern where one of every three chicks survives:

●●○●●○●●○●●○

This configuration is reachable in a single round and stable indefinitely, since none of the pecking chicks has any pecking neighbors. No other arrangement has a higher density of survivors once the pecking process goes to completion.

To summarize: After one round of pecking the number of surviving chicks must lie somewhere between zero and \(\frac{N}{2}\), and the expected number \(\bar{S}\) is right in the middle at \(\frac{N}{4}\). After all further rounds of pecking are completed, the count of unpecked chicks is between zero and \(\frac{N}{3}\), with the expected value again in the middle, at \(\bar{S} = \frac{N}{6}\).

“How many chickens survive?” is a question that seems to call for a numeric answer, but in truth the most informative response is not a number at all; it is a distribution:

Each curve records the results of a million experiments with a ring of 100 chicks, giving the frequency of each possible value of \(S\). As expected, the one-round distribution has a peak at 25 survivors, and the iterated curve peaks at 17 (the closest integer to \(\frac{100}{6}\). Note that the red curve is not only shifted to the left but is also slightly taller and narrower.

To get a better view of the details, let’s zoom in. For the sake of smoother curves, I’m going to switch to experiments with \(N = 10{,}000\) chickens. First the green single-round curve, then the red one for the iterated pecking experiment:

With the larger value of \(N\), the curves now peak at 2500 and at 1666.67—exactly the positions expected for \(\frac{N}{4}\) and \(\frac{N}{6}\). Finding the peaks at these positions is no surprise, but what governs the width and the overall shape of the curve? In other words, what is the mathematical nature of the distributions?

One guess that’s always worth a try is the normal (or Gaussian) distribution. For the pecking problem, a normal distribution defines \(P(S)\), the probability of observing \(S\) survivors, as follows:

\[P(S) = \frac{1}{\sigma\sqrt{2 \pi}} \exp -\frac{1}{2}\left(\frac{S - \mu}{\sigma}\right)^2.\]

That’s a pretty messy equation for such a familiar concept, but it’s possible to tease out the basic meaning. The equation defines a symmatric curve with a peak where \(S\) is equal to \(\mu\), the mean of the distribution. The width of the peak depends on \(\sigma\), the standard deviation. Because the area under the curve is a constant, \(\sigma\) also effectively determines the height: A narrower peak has to be taller.

We can fit a normal distribution to the pecking data using a procedure that finds the optimal values of \(\mu\) and \(\sigma\)—those that minimize the discrepancy between the data points and the mathematical model. In the two graphs below the fitted models are superimposed on the two data plots, first for one round of pecking and then for pecking to completion:

The fits appear to be quite close indeed, with the theoretical curves splitting the experimental ones from end to end. In some sense this result has to be counted a success, and yet I don’t find this approach to the problem fully satisfying. The normal curve provides a very good descriptive model of the pecking process, but not a predictive or explanatory one. Remember, the curve is fitted to the data, not the other way around. I see no obvious way to construct a specific normal distribution from what I know about the underlying interactions of pecking chickens. In particular, where do the values of \(\sigma\) in the two models come from? Why is \(\sigma \approx 25\) in the one-round model and \(\sigma \approx 23.6\) in the iterated model? These values look like free parameters, which we have to tune to suit the data. Moreover, they will differ for every value of \(N\). Another issue: the normal curve is a continuous distribution, defined over the entire real number line. The pecking function is discrete; it makes sense only for integer numbers of chickens.

Let’s set aside the normal curve and consider another plausible model: the binomial distribution, which is discrete, and which turns up in many probabilistic contexts. Suppose you roll 10,000 dice and count how many of them come to rest with a 1 showing on the upper face. When you repeat this experiment many times, the expected number of 1s is one-sixth of 10,000, the same as the expected number of survivors in the iterated chicken-pecking experiment. With dice, there’s a well-known mathematical expression that defines not just the expected value but also the form of the entire distribution. Assume that every die has probability \(p\) of showing a \(1\). We are going to roll \(N\) dice and we want to know the probability of seeing \(k\) \(1\)s for any \(k\) between \(0\) and \(N\). The formula that supplies this information is:

\[P(k) = {N \choose k} p^k (1 - p)^{N - k}.\]

Here \(p^k (1 - p)^{N - k}\) gives the probability of any specific arrangement of \(k\) \(1\)s among \(N\) dice. The binomial coefficient \(N \choose k\), equal to \(N! / k! (N-k)!\), counts the number of such arrangements.

With \(N = 10000\) and \(p = \frac{1}{6}\) we get a curve showing the outcome of the dice-rolling experiment mentioned above. Perhaps the same curve also describes what happens to the iterated pecking model, which has the same expected value? Alas no.

The binomial curve is wider and flatter than the distribution of iterated pecking survivors. What has gone wrong? When I first saw the graph, I had an inkling. As noted above, the binomial coefficient \(N \choose k\) counts all the ways of choosing \(k\) items from a set of size \(N\). This is appropriate for an experiment with dice, since all possible arrangementds of \(k\) successes among \(N\) trials are equally likely. In particular, when you roll \(10{,}000\) dice, you could conceivably see no \(1\)s at all, or all \(10{,}000\) dice could land with a \(1\) showing face up; the entire range of outcomes has probability greater than zero.

The pecking problem is different. It’s not possible for 100 percent of the chickens to remain unpecked. Thus only a subset of the \(N \choose k\) arrangements are attainable. If the binomial distribution is going to work in this context, we need to adjust it somehow to include only the feasible outcomes.

With the thought that it’s easier to solve a problem if you already know the answer, I tried fiddling with the parameters of the distribution to see how the graph responded. My goal was to squeeze the curve into a narrower and taller profile while keeping it centered at the same mean. The mean is equal to \(Np\), so if we decrease \(N\) we have to increase \(p\) by the same factor. Here are the results of some experiments:

The dark green curve is the one we’ve already seen, for a binomial distribution with \(N = 10000\) and \(p = \frac{1}{6}\). Going to \(N = 5000\) and \(p = \frac{1}{3}\) appears to be a step in the right direction, and \(N = 3333\) and \(p = \frac{1}{2}\) is even better. Then, with \(N = 2500\) and \(p = \frac{2}{3} \ldots\) Bingo! The yellow curve is an excellent match to the pecking data. Thus it appears we can predict the survivorship of an \(N\)-member pecking ring by constructing a binomial distribution with parameters \(N^\prime = \frac{N}{4}\) and \(p^\prime = 4p\).

I can pull the same trick to find a binomial distribution that matches the single-round pecking data. This time the magic numbers that bend the curve to the correct trajectory are \(N’ = \frac{N}{3} = 3333\) and \(p’ = 3p = \frac{3}{4}\).

Unlike the normal distribution, the binomial model is constructive, or predictive. From the two parameters \(N’\) and \(p’\) we can calculate both the mean of the distribution and the standard deviation. The mean is simply \(N’ p’\); the standard deviation is \(\sqrt{N’ p’ (1 - p’)}\). For the example of the \(10{,}000\) chickens pecking to completion, the mean \(\mu\) works out to \(1{,}666.666 \dots\) (as expected), and the standard deviation \(\sigma\) is \(23.570226\). (The fitted normal distribution had \(\sigma = 23.567337\).) For the single-round case, \(\mu\) is exactly \(2500\) and \(\sigma\) is \(25\). (To avoid roundoff errors, I am taking \(N\) to be \(9999\) instead of \(10{,}000\).)

Hooray, eh? At last we have a formula for calculating the shape and location of the chicken-pecking distribution, based on a few simple parameters—\(N’\) and \(p’\). But I’m still grumpy, indeed more perplexed and frustrated than ever. Maybe the model explains the data, but what explains the model? With \(10{,}000\) chickens and a first-round survivor probability of \(\frac{1}{4}\), why does the formula call for \(N’ = 3333\) and \(p’ = \frac{3}{4}\)? Where do those numbers come from? And why \(N’ = 2500\) and \(p’ = \frac{2}{3}\) for the iterated case?

I am embarrassed to admit how long I have spent helplessly flailing and thrashing in the bogs of probability theory, trying to solve these mysteries. (I even turned to a recent book called The Probability Lifesaver, which I highly recommend—but it didn’t save my life.) In the search for answers I have investigated the multinomial extensions of binomials. I have looked into convolutions of distributions and computed contingent probabilities. I have filled whole pads of scratch-paper with soldierly rows of ●s and ○s, searching for patterns that would explain those enigmatic fractions \(\frac{N}{3}\) paired with \(\frac{3}{4}\), and \(\frac{N}{4}\) with \(\frac{2}{3}\). Night after night I’ve gone to bed with a promising idea, only to awaken and recognize a fatal flaw.

Now I believe I do have a correct explanation. It has passed the overnight test several nights in a row. I’m going to reveal it, but not until the end of this essay. Perhaps you’ll figure it out on your own before then. In the meantime, I’m going to widen the horizons of the chicken problem.

Our cozy circle of chickens is a one-dimensional structure. You can go clockwise or counterclockwise around the ring; there are no other meaningful directions in this little universe. Now suppose that instead of getting all our chickens in a row, we arrange them in a grid, an array of columns and rows, covering a region of a two-dimensional surface. To avoid leaving a subset of chickens on the exposed edges of a rectangular array, we can mate the left edge with the right edge and the top edge with the bottom edge. (Topologically, this turns the rectangle into a torus.) Getting real chickens to cooperate in this experiment would be even harder than in the one-dimensional version, but no matter; we’ve long since lost all touch with barnyard reality.

The most important fact about the two-dimensional flock is that each chicken has four neighbors instead of two. With twice as many hostile neighbors, one might well guess that a chicken would be more vulnerable to a pecking attack. On the other hand, each of those neighbors spreads its pecking over twice as many potential targets. How do these competing effects balance out?

For a single round of pecking, we can calculate the survival probability in the same way we did for the one-dimensional system. A chick remains unpecked only if all of its neighbors turn elsewhere to peck. Each neighbor does so with probability \(\frac{3}{4}\), and so the probability that all of them turn away is \(\left(\frac{3}{4}\right)^4\). Numerically, this works out to about 0.3164, compared with 0.25 in the circle. Thus the fraction surviving is greater in two dimensions than in one; the distraction of having more targets outweighs the danger of having more attackers. The distribution observed in computer experiments confirms this finding.

Here’s what a \(40 \times 40\) lattice of chicks looks like after a single round of pecking.

There are 1,600 chicks in the two-dimensional array. If you count the unpecked ○s, you’ll find there are 501, for a survival fraction of 0.3131, close to the theoretical value of 0.3164. Simulations confirm the expected survival rate of \(\left(\frac{3}{4}\right)^4\) for \(N \times N\) lattices with any value of \(N\) greater than \(2\). (For the \(2 \times 2\) grid, the survival rate is \(\frac{1}{4}\), as in the one-dimensional system. There’s a reason why!)

When I stare at the pattern above, I notice a certain stringy or loopy texture, with chains of ○s separating blobs of ●s. This might be a trick of the eye and mind, but I think not. In two dimensions the no-three-in-a-row restriction is lifted; the array includes rows and columns with as many as six consecutive unpecked chicks, as well as diagonal lines. But you will not see a solid \(3 \times 3\) block (![]() ) of unpecked chicks, or even a \(3 \times 3\) cross (

) of unpecked chicks, or even a \(3 \times 3\) cross (![]() ). Such patterns cannot exist because the chick in the middle of the block must have pecked one of its four neighbors. More generally, the system is still bound by the rule that every chick, whether pecked or unpecked, must have at least one pecked neighbor.

). Such patterns cannot exist because the chick in the middle of the block must have pecked one of its four neighbors. More generally, the system is still bound by the rule that every chick, whether pecked or unpecked, must have at least one pecked neighbor.

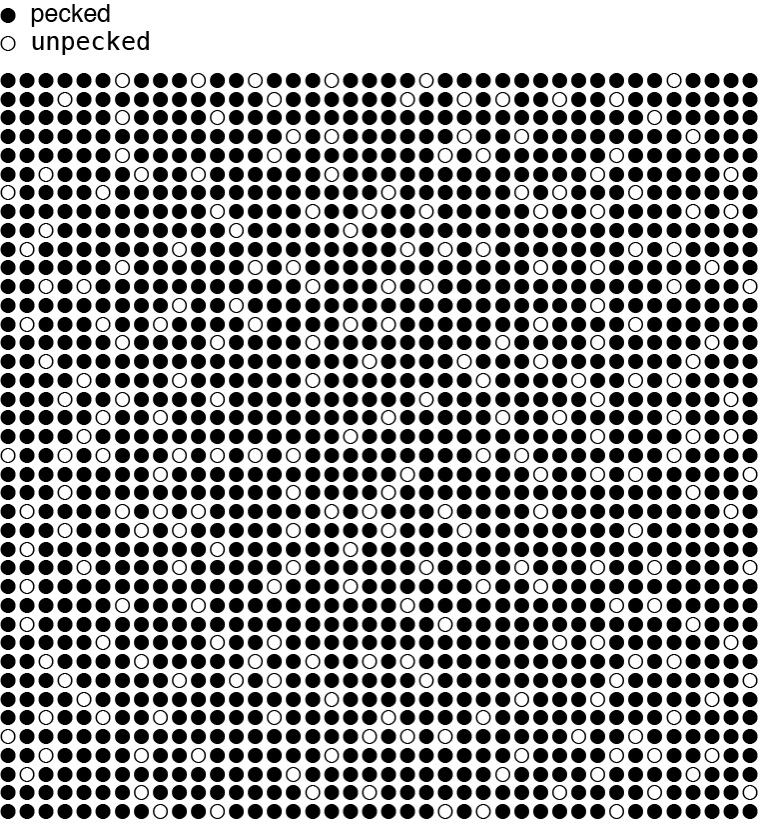

Since more chicks survive the first round of pecking in a two-dimensional world, it seems plausible there might also be a greater proportion of survivors when the pecking continues to completion. Let’s try the experiment:

In this \(40 \times 40\) array there are 238 survivors out of 1,600 chicks, which is less than the one-sixth survival rate seen in one dimension. In a sample of a million such pecking grids, I found that the mean survival rate \(\bar{S}\) is about 0.1533. Compare the distributions for one- and two-dimensional systems:

In going from 1D to 2D the peak shifts to the left, with the mean moving from 0.1667 to 0.1533. The 2D hump is also a little taller and skinnier, thus showing reduced variance.

Why stop at two dimensions? Let us ask our ever-accommodating chickens to roost in a three-dimensional lattice, again with opposite boundaries joined to create the 3D equivalent of a toroidal surface. It’s not hard to guess how this experiment is going to turn out. Back in one dimension, where every chick had two neighbors, the fraction of survivors after a single round of pecking was \(\left(\frac{1}{2}\right)^2 = \frac{1}{4}\). In two dimensions, with four neighbors, the corresponding number was \(\left(\frac{3}{4}\right)^4 = \frac{81}{256}\). In the three-dimensional pecking party each chick has six neighbors, so the obvious extrapolation is \(\left(\frac{5}{6}\right)^6 = \frac{15625}{46656}\), with a value of \(\approx 0.3349\). Running the simulation supports this surmise, and shows a clear trend when we construct chicken lattices with still higher numbers of dimensions.

From this series of results we can boldly generalize: When every chick has \(n\) neighbors, the fraction expected to survive a single round of pecking is:

\[\left(\frac{n - 1}{n}\right)^n.\]

As \(n\) increases, this expression converges on a value of approximately \(0.36787944\). Does that number look familiar? It is \(\frac{1}{e}\). (Changing the minus sign to a plus generates \(e\) itself, \(2.71828\).) When I stumbled upon this formula, the sudden appearance of \(\frac{1}{e}\) took me by surprise, but it shouldn’t have. The constant turns up in the same way in a model of rumor spreading that I wrote about some years ago.

What about the iterated pecking process in higher dimensions? The fraction of survivors shows a steady decline as the number of dimensions increases:

The proportion of chicks that never get pecked falls from 16.7 percent in one dimension to about half that when we embed our intrepid chickens in seven-dimensional space. In other words, a higher-dimensional space raises the initial survival rate (after one round of pecking), but depresses long-term survival (after pecking to completion). Here’s another way of showing the effect of dimension—tracking the mean number of survivors remaining after each round of pecking in one dimension through seven dimensions.

I can offer a rough, hand-wavy rationale for this trend. If you are a chick in a one-dimensional ring, your chance of surviving the first round of pecking is only \(\frac{1}{4}\), but if you make it through that round, your chance of avoiding a peck in the second round is at least \(\frac{1}{2}\). Why the improvement? It’s because of your own actions: Your pecking in the first round eliminated the threat from one of your two neighbors. Your odds continue improving in subsequent rounds: The longer you last, the greater the chance that you will hang on until all your neighbors are pacified.

The same trend holds in higher dimensions, but the magnitude of the effect tapers off. In four dimensions, for example, you have eight neighbors, and your chance of surviving the first round is \(\left(\frac{7}{8}\right)^8\), or about 0.34. Because you peck one of those neighbors, your probability of making it through the second round is better, but only slightly so: \(\left(\frac{7}{8}\right)^7\), or 0.39.

Looking at the graphs above, one might surmise that as the dimension \(D\) goes to infinity, the number of survivors (after pecking to completion) will drop to zero. To explore this idea, we don’t actually need infinite-dimensional space. What matters most is not the geometric arrangement of the chickens but the number of neighbors, and we can approximate an infinite-dimensional lattice just by declaring that all chickens are nearest neighbors. In other words, the who-pecks-whom graph becomes complete, with an arc from every chick to every other chick. This does seem to be a recipe for annihilation; you can’t be safe as long as even one other chicken continues to peck. But the details of the end game allow a little room for variation. Will there be one survivor or none?

Peter Winkler discusses a similar problem, “Group Russian Roulette,” in Mathematical Puzzles: A Connoisseur’s Collection (p. 33). The actors in his version are not chickens but “armed and angry people,” who engage in rounds of simultaneously shooting random neighbors. Winkler observes that the probability of a survivor does not approach a limit as \(N\) increases. I don’t see this effect in the chicken problem: There is almost always a last chicken standing. What makes the difference, I believe, is that Winkler’s roulette players don’t waste their ammunition on players who have already been shot, whereas the chickens continue to peck at neighbors who don’t peck back.

Finally, I return to the narrow confines of one dimension and to the mysterious binomial distributions that seem to predict the statistics of chicken pecking in this system. To review: If you roll 10,000 dice and count those that show a \(1\), you can expect to find about 1667. If you put 10,000 chicks in a circle and wait until all the pecking is done, you can expect about 1667 unpecked survivors. The dice experiment is described by a binomial distribution with parameters \(N = 10000\) and \(p = \frac{1}{6}\). The same model doesn’t work for the chickens: The predicted distribution is much broader than the observed one. But that’s not the weird part. The real puzzler is why a different binomial model, with parameters \(N’ = 2500\) and \(p’ = \frac{2}{3}\), does seem to match the experimental results.

The dice model’s failure to work for chicken pecking is not really a surprise. A key assumption underlying the binomial distribution is that the events or objects being counted are independent. That’s true for dice; one die doesn’t care what the others do. But the circle of pecking chickens is all about interactions between neighbors. If you have already been pecked, that alters the odds that your neighbors will eventually be pecked. Independence enters the binomial distribution through the coefficient \(N \choose k\). Given \(N\) dice with \(k\) of them showing \(1\)s, all possible interleavings of the \(1\)s among the other dice are equally likely; the binomial coefficient counts those arrangements. But given \(N\) chicks with \(k\) of them unpecked, it’s not true that all arrangements are equally likely. Indeed, many patterns, such as ○○○, are impossible.

If neighbor interactions spoil the binomial model with \(N = 10{,}000\) and \(p = \frac{1}{6}\), how are those interactions overcome in the model with \(N’ = 2500\) and \(p’ = \frac{2}{3}\)? For the longest time I was beguiled by the observation that 2500 is the expected number of survivors after a single round of pecking, and two-thirds of those individuals can be expected to survive all subsequent rounds. Surely, having those two numbers turn up in the binomial distribution cannot be a meangingless coincidence. Maybe not, but I was able to make sense of the situation only when I gave up on that line of inquiry.

What’s needed is a model in which we count the arrangements of 2500 objects, where two-thirds of the objects can be considered successes or survivors. I have found such a model. The objects are not individual chickens. They are groups of four chickens. Consider this set of 4-tuples:

a = ○●●●

b = ●○●●

c = ●●●●

If you select elements from this set at random and string them together, any sequence you create could be an output of the iterated pecking process. A typical result looks like this:

●●●●○●●●●○●●○●●●●●●●○●●●●○●●●●●●●○●●○●●●●○●●●●●●○●●●●○●●●○●●○●●●●●●●○●●

Note that this sequence satisfies all the rules for flock of chickens that has pecked to completion. All unpecked ○s are singletons, surrounded by pecked neighbors. At least two ●s separate every pair of ○s, and this ensures that every element of the sequence has at least one ● neighbor. There is no way of concatenating any selection of a, b, and c elements that violates these rules. Furthermore, if a, b, and c are chosen with equal probability, the expected proportion of ○s in the sequence is \(\frac{1}{6}\).

I am deeply ambivalent about this discovery. On the one hand, it’s always a relief to get to the bottom of a problem that has stumped you. On the other hand, what we have here is a recipe for creating a sequence with the same structure and statistics as the product of the pecking process, but it offers no insight into the nature of that process. There’s no connection with the behavior of the chickens. Worse, it’s not even a true or exact model. Although the curve appears to coincide with the data, it’s only an approximation. The proof of this fact is simple. The binomial distribution with \(N’ = 2500\) and \(p’ = \frac{2}{3}\) has an absolute cutoff at \(2500\). For any number of survivors greater than \(2500\), the model assigns a probability of zero. Yet the flock of \(10{,}000\) pecking chickens can in fact leave up to \(3333\) survivors.

The defect becomes visible in a smaller model, such as this one with \(N = 24\):

The predicted and observed curves exhibit slight mismatches everywhere, but pay particular attention to the right tail of the distribution, where the binomial curve (purple) dives to zero for all survivor numbers greater than six, whereas the experimental data (red) include 6718 instances with seven survivors and 49 instances with eight survivors.

A similar model for the one-round pecking process uses a set of four 3-tuples:

a = ○●●

b = ○●●

c = ●●○

d = ●●●

Again it generates a sequence that looks very much like the outcome of a pecking experiment, but fails to reproduce the tail of the distribution. In the model the highest possible density of survivors is \(\frac{1}{3}\) whereas it should be \(\frac{1}{2}\).

Perhaps you’re thinking that a cute high school problem about chicks pecking their neighbors doesn’t really merit an 8,000-word screed on Markov chains and probability distributions, with tables and equations and 25 graphs and diagrams. That thought has crossed my mind, too. However, I want to add just a few more words to argue that the exercise is not totally frivolous.

Mathematics does not owe us a tidy, closed-form, one-line solution to every problem, but we’d be foolish to give up the quest too easily. In this case, computer simulations are easy and productive. By running a program for five minutes I can get answers to a multitude of detailed questions, and I don’t have serious doubts about the correctness of those answers. But they don’t help me make the connection between the microscopic mechanisms (a chicken pecks left or right at random) and macroscopic observations (the distribution has \(\mu = \frac{1}{6}\) and \(\sigma = 23.56\)). Richard Hamming’s old chestnut says the purpose of computing is insight, not numbers, but insight is just what I’m missing.

Second, this is not really a problem about chickens, whether real or abstract. It is a gateway to a collection of other many-body problems in statistical physics and dynamical systems and cellular automata.

Finally, I’ve had fun, and what’s the harm in that? Maybe the fun’s not over. What about zombie chickens, whose pecks bring other chickens back to life?

Update 2017-07-11: Carl Witty has worked out the correct probability distribution for the single-round case. See his comment below.

A different kind of intuition is helpful in the first-round problem: pecks that occur simultaneously must be independent. (But in real life, nearly-simultaneous events are perceived to be dependent.) So the global problem becomes a local one and no effects can “propagate”, and in particular the number of chicks doesn’t matter.

There’s another interesting observation about the setups that you consider. Each of the n-dimensional tori has a symmetry group that acts transitively, so that each chick is equal. Very mathematically intuitive, but what about different kinds of symmetry? Do the 2D cylinder and Mobius strip have the same survival statistics?

Some generalizations include chicks on regular graphs, or graphs in general, or directed graphs (which connected graph maximizes the survival rate?) as well as the case of nonlethal pecks (what if they’re only 50% effective?).

As is typical in discrete mathematics, there’s a wide range of generalizations for a simple question, and few of them actually correspond to real-world chicken behavior.

I think I found a better analytical approximation for your one-round-of-pecking case. It’s closely related to the Beta distribution:

\[p(k) = \frac{k^{N/2} (N/2-k)^{N/2}N!}{(N/2)^N ((N/2)!)^2} \]

This function is still not correct, as it gives zero probability to \(k = 0\) and \(k = N/2\), but it does not display the asymmetrical error of your binomial solution.

I guess that working out why this is a plausible limit is the next step!

Very interesting, but I’m having some trouble getting sensible results. Here’s a plot for \(N = 24\).

Red is observed data; purple is the graph of your PDF. I thought it was just missing a factor of 2, so I tried doubling the amplitude (bright green), but that curve is a little off, too. The sum of \(p(k)\) for \(0 \le k \le 12\) is about 0.48. Perhaps I’ve misunderstood what you’re aiming at.

I’m also unsure how to deal with \((N/2)!\) when \(N\) is odd — gamma function?

First: I believe your graphics are wrong in the section on Markov chains; you’ve got a lot of diagrams with 1 black dot and 3 white dots that you call absorbing, but it’s actually 3 black dots and 1 white dot that should be absorbing. (The error is remarkably consistent; by my count you have 4 wrong SVG files and one wrong PNG file (that’s used twice). Also, in the first wrong SVG file the unreachable states with 3 “black” (gray) dots and 1 white dot should have 3 white dots.)

Second: I believe I have a better explanation for your one-round results, at least in the case where you have an odd number of chicks.

Let’s start by computing the probability of seeing exactly \(k\) unpecked chicks out of \(n\) total (after the first round). A particular chick is unpecked if the chick to the left pecks to its left, and the chick to the right pecks to its right; only those two chicks matter. In particular, the pecking state of the chick under consideration is irrelevant; so let’s stop thinking about it. Where it might seem like we should look at neighborhoods of \(a-1, a, a+1\), we’ll instead look at neighborhoods (shifted by one) of \(a, a+2\) (ignoring the chick at \(a+1\)). We can combine multiple such neighborhoods into a chain \(a, a+2, a+4, \ldots\), where we wrap around at the end of the list. If \(n\) is even, there are two such chains; for example, \(n=8\) has \((1,3,5,7)\) and \((2,4,6,8)\). If \(n\) is odd, there is only one chain; \(n=7\) has \((1,3,5,7,2,4,6)\).

We can represent a chain as a sequence of letters \(L\) or \(R\). Then within a chain, there is a sequence \(LR\) iff the chick between these two chicks is unpecked. (Since we consider that chains wrap around, having \(L\) at the end of the sequence and \(R\) at the beginning also counts as an unpecked chick.) Now let’s look at the probability of seeing exactly \(k\) sequences \(LR\) in a random chain of length \(m\). We can compute this by checking how many of the \(2^m\) possible chains have \(k\) \(LR\) sequences.

Now, between every two “adjacent” \(LR\) sequences there is exactly one \(RL\) sequence; in other words, every chain has the same number of \(LR\) and \(RL\) sequences. If we count both \(LR\) and \(RL\) changes together, then a chain with \(k\) \(LR\) sequences will have \(2k\) changes. For any given chain we can define a change-chain. Start by adding \(n\) for “no change” or \(c\) for “change” between every pair of letters; so for the chain \(LLLRLLR\) we would end up with \(LnLnLcRcLnLcRc\). (That last \(c\) is “between” the final \(R\) and the initial \(L\).) Then delete the original \(L\)s and \(R\)s, so our example would end up as \(nnccncc\).

Every chain converts to a single change-chain; every change-chain is the conversion of exactly two chains (one where the first letter is an \(L\), one where the first letter is an \(R\)). An \(m\)-letter chain will have \(k\) \(LR\)s iff its change-chain has \(2k\) \(c\)s. So we only need to count the number of \(m\)-letter change-chains with exactly \(2k\) \(c\)s, which is simply \({m \choose 2k}\) (and then double that, because each change-chain corresponds to two chains). Then the probability of having \(k\) \(LR\) sequences in a random \(m\)-letter chain is \(2{m \choose 2k}/2^m\), or \({m \choose 2k}/2^{m-1}\).

Now if we go back to the original problem with \(n\) chicks, there are two cases. If \(n\) is odd, then it has a single chain of length \(n\), so the probability of having \(k\) unpecked chicks is

\[

{{n \choose 2k} \over 2^{n-1}}.

\]

If \(n\) is even, then it has two chains, each of length \(n/2\). Then the probability of having \(k\) unpecked chicks is

\[

\sum_{0 \leq a \leq k} {{n/2 \choose 2a}{n/2 \choose 2(k-a)} \over 2^{n-2}}.

\]

The probability in the even case is very messy (and I don’t know how to simplify it); also, I haven’t double-checked it, so there might be minor errors in the formula. So from now on I’ll concentrate on the odd case.

If we look at the formula for the odd case, it’s not a standard binomial distribution; but it’s close. If we started with the standard distribution \({n \choose k} / 2^n\), we can turn it into our distribution by discarding the values for odd \(k\) (and pushing the surviving values to the left, to keep them adjacent), and then doubling each probability (so that the probabilities sum to 1 after we discard half of them). We can use this to see how to approximate our distribution with a normal distribution. A standard binomial distribution \({n \choose k} / 2^n\) can be approximated by a normal distribution with mean \(n/2\) and standard deviation \(\sqrt{n}/2\). Since our \(n\)-chick distribution has half the mean and is half as wide as the standard binomial distribution, its approximating normal distribution will also have half the mean and half the standard deviation. So our \(n\)-chick distribution can be approximated by a normal distribution with mean \(n/4\) and standard deviation \(\sqrt{n}/4\) (at least when \(n\) is odd).

Since normal and binomial distributions are good approximations of each other, this \(n\)-chick normal distribution can in turn be approximated by a binomial distribution, which it turns out has parameters \(N’=n/3\) and probability \(p’=3/4\); but this is just an approximation of an approximation of the correct distribution, and I don’t believe that these \(N’\) and \(p’\) have any combinatorial meaning.

Third: I had a plan for how to approach the iterated problem. The plan didn’t work out, but I gathered some intriguing (and potentially useful) data on the way that I’d like to share.

My plan was to take the one-round results and record the number of singleton chicks and pairs of chicks separately. Then I would approximate them with separate normal distributions, multiply the mean and standard deviation of the pairs-of-chicks distribution by 1/3, and add the result back to the singleton-chicks distribution. (Using (incorrectly, as it turns out) the result that you can add normal distributions by adding their means and variances.) I hoped that this would give the correct mean and standard deviation of the experimental iterated distribution.

I decided to start with exact results, looking at all possible results of a small number of chicks; for \(n\) from 3 to 17, I computed the exact mean and variance of the distributions for all surviving chicks, all singleton surviving chicks, and all chicks surviving in pairs (which is always an even number). My first surprise was that the variance of the latter two distributions was actually higher than the variance of the former, even though the former distribution should be the sum of the latter two. Upon reflection, this makes sense; the result about adding normal distributions requires that the samples from the source distributions be independent, and our singleton-chick and paired-chick samples are evidently strongly anti-correlated (which seems obvious in retrospect).

The second surprise is that all of these means and variances turned out to be exact numbers of a particularly simple form. For \(n\) from 4 up, the all-chicks mean is \(n/4\), and the singleton-chicks and paired-chicks means are both \(n/8\). (Not too surprising… those are the asymptotically correct numbers, but I am a little surprised that they are exactly correct.) And for \(n\) from 7 up, the all-chicks variance is \(n/16\), the singleton-chicks variance is \(5n/64\), and the all-chicks variance is \(9n/64\). (Results are tested up to \(n=17\), which is where I got bored of waiting for my slow test code.)

I don’t know enough about statistics or probability to take this any further, so I’m giving up here (at least for now).

Well, that’s impressive!

First, thanks so much for pointing out my black-and-white confusion. Should be fixed now.

Second, I am grateful to now have a probability model that works and whose derivation makes sense, at least for the one-round-of-pecking case. “. . . the pecking state of the chick under consideration is irrelevant; so let’s stop thinking about it” — that’s a step in the analysis that I would not have thought to take.

Just for the record, here’s a comparison of your odd and even distributions with the empirical data:

They both appear to be spot on.

A small correction in the application of the pigeonhole principle (chickeonhole principle? :-P). Where it says:

it should say:

The reason why the first block is wrong is that in configurations other than a circle it is indeed possible that everyone has a pecked neighbor and more than \(\frac{N}{2}\) survive, so the conclusion doesn’t follow from the argument.

(A correction that doesn’t detract anything from the fascinating nerdiness of this full post)

I just wanted to say I think visualizations on this post are pretty great–what a lovely set of diagrams and graphs!

One nitpick, why not “ghost” the grid lines for all graphs as done in the “fraction surviving sequential pecking” graph?

I think I got tripped up by the same double counting problem that you mentioned. It took me a long time to find the double count. The trick is that I calculated the fraction of unpecked pairs as if it they were unpecked chicks.

But thinking through it was great! Thank you for your analysis.

Here was my writeup

—-

After the first round, every chick has a 1/4 probability to be unpecked. (1/2^2)

Of the unpecked chicks,

1/2 are immortal singletons, and 1/2 are in pairs.

So there is a

1/4 * 1/2 = 1/8 chance of immediately becoming a singleton unpecked chicken and therefore immortal, plus a 1/8th chance of ending up in a pair of unpecked chicks. (And a 6/8th chance of being pecked).

For each unpacked pair, there are four outcomes:

1/4: a pecks b

1/4: b pecks a

1/4: a pecks b and b pecks a

1/4: neither pecks the other.

Let the number of immortal chicks coming from a pair = u. Then,

u = 1/2 + 1/4u

3/4u = 1/2

u = 2/3

From above, the expected fraction of chicks in pairs is 1/8, so the fraction of pairs is 1/16 of the total chick count.

1/8+ 1/16(2/3) ~= .1667