The Ormat Game

by Brian Hayes

Published 16 August 2010

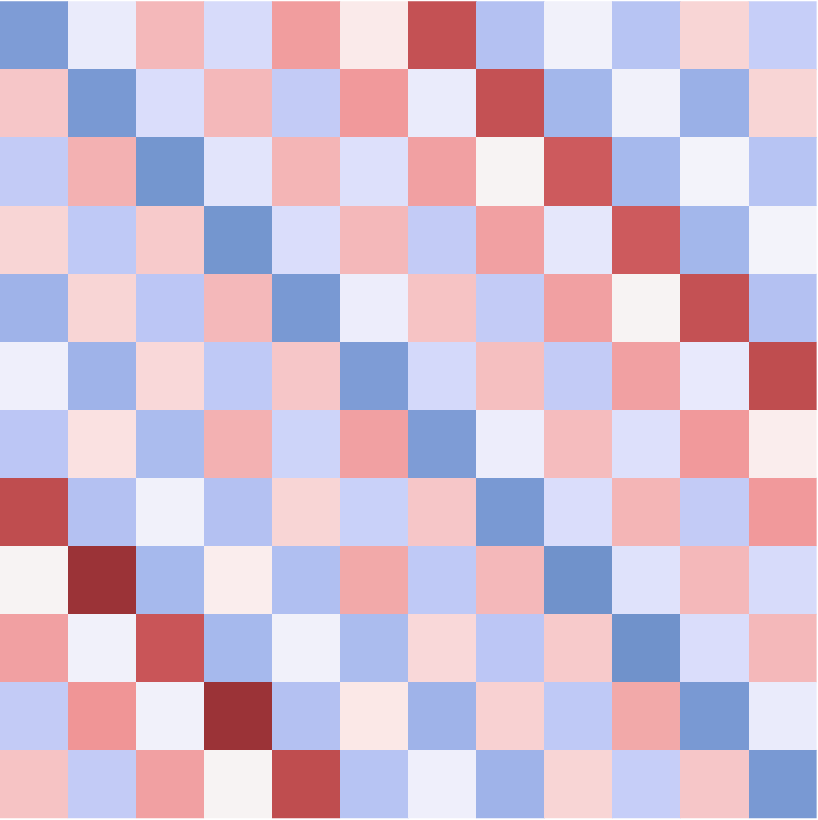

Here’s the deal. I’m going to give you a square grid, with some of the cells colored and others possibly left blank. We’ll call this a template. Perhaps the grid will be one of these 3×3 templates:

You have a supply of transparent plastic overlays that match the grid in size and shape and that also bear patterns of black dots:

Note that each of these patterns has exactly three dots, with one dot in each row and each column. The six overlays shown are the only 3×3 grids that have this property.

Your task is to assemble a subset of the overlays and lay them on the template in such a way that dots cover all the colored squares but none of the blank squares. You are welcome to superimpose multiple dots on any colored square, but overall you want to use as few overlays as possible. To make things interesting, I’ll suggest a wager. I’ll pay you $3 for a correct covering of a 3×3 template, but you have to pay me $1 for each overlay you use. Is this a good bet?

Before going further, I should mention that not every conceivable template can be covered under these rules. To take an obvious example, no 3×3 template with fewer than three colored squares can possibly be covered by any combination of the six overlays. But I promise to submit only templates that can be covered by some combination of the given dot patterns; if I err about this, I forfeit the bet.

How does the game play out? If I give you the template marked “1″ above, you can easily win; just choose permutations a and b, which together cover all the colored squares and no others. You pay $2 and get $3. Template 2, with all nine squares colored, looks like it might be the toughest challenge. Clearly, it cannot be covered with fewer than three overlays, since we need a total of nine dots; and it turns out that exactly three overlays are required. Indeed, there are two ways of covering the template with three overlays: a + d + e and b + c + f. Thus this template is a breakeven proposition: You earn $3 and pay $3.

Now we come to template 3, which has eight colored squares and one blank. Surely if you can cover the full nine squares with just three overlays, then you should also be able to cover eight squares—no? I invite you to try it. In fact the only covering that works requires four overlays: b + d + e + f. Thus you shouldn’t take my bet, since I can always give you a template with just one blank, and you’ll have a net loss of $1.

Some background. I’ll return to the gaming table momentarily, but first let me explain what this is all about and where it came from. A few weeks ago, I was writing about “ranges of rankings,” which led me into the topic of permutation matrices. To recapitulate:

- A permutation matrix is a square matrix with a single 1 in each column and each row, and all the rest of the elements 0.

- An ormat is a superposition of permutation matrices, formed by applying the Boolean OR function to corresponding elements of the permutation matrices. For example:

- Not all square matrices with (0,1) entries can be formed by OR-ing permutation matrices, but there’s an efficient algorithm for deciding whether or not a given matrix is an ormat. (I thank some helpful commenters for enlightening me on this point.)

- Given an ormat, the total number of distinct permutation paths that can be threaded through the 1 entries of the matrix is equal to the permanent of the matrix. Calculating the permanent is known to be a hard computational problem.

In a comment, Barry Cipra posed the following query:

The permanent tells us the maximum number of different permutations that can be OR-summed to produce a given ormat, but what is the corresponding minimum number? Also, in how many different ways can the minimum be achieved?

The connection between ormats and my little game is probably apparent by now. The template of colored and blank squares is an ormat; the dotted overlays represent permutation matrices; to maximize your payoff in the game (or to minimize your loss), you need to answer Barry’s first question, finding the minimum number of permutations that can be combined to yield the given ormat.

For 3×3 matrices, we can solve this problem by exhaustive search, calculating the OR-sums of all possible combinations of the six 3×3 permutation matrices taken 1, 2, 3, …, 6 at a time. I did this with pencil and paper on a recent airplane trip. Here is a summary of the results:

Some of the numbers on this card are easy to explain. The six ormats with just three 1 entries are the permutation matrices themselves. There are six of them because there are 3! = 6 permutations of three things. There are no ormats with four 1 entries for a reason that bears thinking about: There can be no permutations that differ from one another in just one element. When you superimpose any of the six overlays shown above, you can wind up with three, five or six dots, but never four.

At the other end of the scale, it’s no surprise that there’s exactly one ormat with nine 1 entries, and that it takes three permutations to produce it. And then there are the nine ormats with eight 1 entries, which each require four permutations to be OR-ed. These are the single-blank patterns like template 3 above.

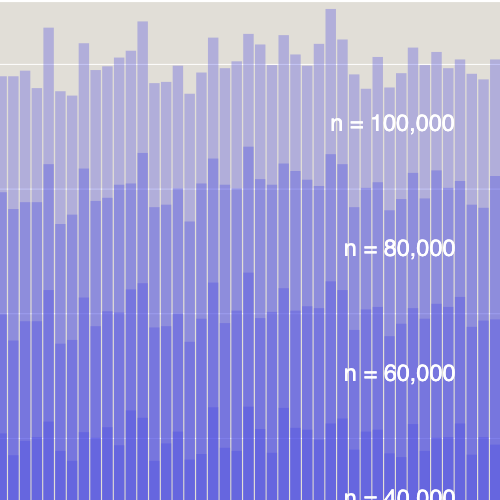

Based on these results, I began speculating about what I would see in a tabulation of all 4×4 ormats.

There would have to be 4! = 24 patterns with four 1 entries, and just one pattern with all 1s, generated by OR-ing four permutations. And there should be 16 ormats that require five permutations, namely the 16 matrices with a single 0 element. This last prediction seemed a little less self-evident than the others.

Pocket change and Cheerios. My thoughts about the single-zero (or single-blank) case went something like this. To cover 15 squares with sets of four dots each, we need at least four sets, or else we simply won’t have enough dots. So a useful starting point is one of the optimal arrangements that cover all 16 squares without gaps or overlaps. By this time I had grown tired of drawing zillions of dots, and so I started working with sets of coins.

In this arrangement each coin denomination forms a permutation, with no two pennies, nickels, dimes or quarters in the same row or the same column. We have successfully covered all the colored squares, but unfortunately we’ve also covered the blank at the lower right. Thus this pattern of coins is not an acceptable solution, but maybe we can fix it up somehow?

Moving the penny from the blank square to another square in the same column solves one problem but creates another: Now the arrangement of pennies is no longer a permutation. There are two pennies in the third row.

So now we have to shift another penny to restore the one-per-row-and-column property. Inevitably, this leaves a colored square uncovered. The only way we can cover that exposed square is to introduce a fifth permutation. Since I had run out of coin denominations, I chose a popular brand of breakfast toroids. Voila:

There’s nothing special about the particular moves I chose in this sequence. If you try some alternatives, you should be able to persuade yourself that moving the penny that covers the blank to any other square in the fourth column (or in the fourth row) would lead to essentially the same situation. Likewise the game would come out the same if the single blank square were placed anywhere else in the grid. And you could also start with a different set of initial permutations (provided they cover all the squares).

This coin-shuffling exercise demonstrates that we can cover any 4×4 template that has a single blank by combining no more than five permutations, but how do we know that five are actually needed? Maybe there’s some totally different arrangement that would do the job with just four permutations? Well, think about what such an arrangement would look like. It would have to differ at exactly one position from some other layout of permutations that covers the full 16-square grid. But no two permutations can differ at one and only one place. Thus the reason there can be no four-permutation cover of 15 squares is essentially the same as the reason no 4×4 ormat pattern can cover just five squares.

This argument generalizes to k×k matrices: For any integer k, there must be at least k ormat patterns that cannot be covered with fewer than k+1 permutations. But then comes the bigger speculative leap: Perhaps k+1 is an upper bound. Perhaps part of the answer to Barry’s question is that no k×k ormat pattern requires more than k+1 permutations. At one point I even had a “proof” of this conjecture. Then I wrote a program to check it, doing much the same thing I did with the dots on the airplane.

Out of bounds. My program found the expected 16 ormat patterns that require five permutations—and it found many more as well. In all it identified 2,032 4×4 ormats that can’t be composed from fewer than five permutations. And then came a bigger surprise: The program also found 480 patterns that require six permutations. So much for my proposed upper bound.

One of those problematic 480 ormats takes this form:

Looking over this pattern, I thought I understood where my earlier reasoning had gone awry. This matrix is just like the single-zero pattern, but with two zeros! (I do mean for that statement to make sense. Bear with me.) Suppose we start again with a set of four permutations that completely cover the grid, including the two blanks.

Then we can uncover each blank just as we did in the coin-shuffling procedure above, although we have to be careful the two sets of movements don’t interfere with each other. (Not much point in removing the penny from a blank square, then putting the nickel there.) Here is a strategy for clearing both blank squares while maintaining the one-per-column-and-row permutation property:

Inevitably, when we uncover the two blank squares, we also remove coins from two colored squares, which now have to be filled in again. The key point is that no single permutation can repair that damage, because the two open colored squares are in the same row. To cover both of those squares we need two additional permutations.

Other ways of reshuffling the coins avoid putting the two open squares in the same row or column, but they still foil all attempts to complete the covering with just five permutations. Try adding four Cheerios to the diagram below. If you cover both of the open blue squares, then either you also cover one of the blank squares or you wind up with two Cheerios in the same row.

So now it’s clear we need as many as six permutations to cover a 4×4 ormat. Does that suggest that the general upper bound might be k+2 rather than k+1? Or perhaps the appropriate formula is 2k–2? In support of this latter possibility I offer these two ormats, which require 8 and 10 permutations respectively:

Another wager. Having fooled myself several times about the upper bound on minimal ormat coverings, I feel I should build in a little margin for error before I invite you to make a further wager. We already have direct evidence that covering a k×k ormat can take as many as 2k–2 permutations. So I’ll be generous and offer a full $2k for a proper covering, while charging $1 per permutation. If k=3 or k=4, you can definitely make money on this deal. But is it a good bet for larger k? (Hint: I’d be willing to play the game on these terms for real money.)

• • •

Update 2010-08-19: No takers for my bet, eh? Too bad; I had already spent my winnings.

Barry Cipra, who raised the question about minimal ormat covers in the first place, sends this illuminating letter:

I’m going to tiptoe a short ways out on a long long limb and conjecture (really just guess) that the “worst case” behavior, in terms of the minimum number of permutations it takes to produce a given ormat, occurs for ormats of the following form, shown here for k=7:

So as not to abuse existing matrix terminology, I’ll call any (square) matrix of this type—i.e., whose entries below the main subdiagonal are all 0—”uppity triangular.” I can (and will!) show that this uppity triangular ormat for k=7 requires (at least) 16 permutations—and the number appears to grow concavely upwards from that, so I, for one, will definitely not take you up on your $2k wager.

The trick, I realized, is to view each ormat as the “shadow” of what I’ll call an “addmat.” If you let P1, P2, …, Pr be k×k permutation matrices, their addmat is simply the ordinary result of addition: S = P1 + P2 + … + Pr, whose entries are positive integers wherever one or more of the constituent permutations has a 1 and otherwise 0. The associated ormat is obtained by changing each of these entries to a 1, while leaving the 0′s alone. In this sense, the ormat’s 1′s are the “shadows” of the addmat’s positive entries.

What’s crucial is that addmats have a lovely little property not shared with their shadows: the row and columns sums of the entries of an addmat all equal the number of permutations that produce them, r.

Come now, let us reason together…. The uppity triangular ormat example above (for k=7) must come from an addmat of the form

where a, b, and all the *’s are positive integers. In particular, each * is at least 1. Since all row and column sums must be equal, the sum a+b must equal the sum of b and all 6 *’s above it. Hence a is at least 6. Likewise a+b must equal the sum of a and all 6 *’s above it, so b is also at least 6. Hence a+b is at least 12, which means the OR-sum that produced the given ormat involves at least 12 permutations.

This clearly generalizes to arbitrary k, which is more than “direct evidence” that covering a k×k ormat can take as many as 2k–2 permutations, it’s rigorous proof! But we can immediately do better, at least on a case-by-case basis. If we try to get by with just 12 permutations for this uppity triangular ormat, we quickly run into trouble. We obviously must have a = b = 6, and it follows that all the *’s above them are 1′s (to make those column sums 12). That is, we have the addmat

where I now wish to call your attention to the entry labeled “@”. To make its row-sum equal 12, we need @ = 10. But that means its column sum (with the 5 *’s above it) is at least 15, which cannot be! So we are forced to try larger values of a and/or b—which is to say, we need more permutation matrices to produce this addmat.

It turns out you can’t satisfy the row and column sum condition until you get to a = b = 8. I won’t take you through all the steps, but just give you a taste with the penultimate possibility, a = 7, b = 8. The best you can hope for in this case is

Note that I put as much of the “weight” in the last two columns as close to the 7 and 8 as possible, so that I could use the smallest possible value (10) as the entry with 5 positive entries above it. This makes the last three rows, and the right three columns all have the same sum, 15, but now we see a problem in the 12′s column: Its sum is at least 16. So once again, we’re screwed. It’s only with the next attempt that we avoid contradiction:

This matrix finally has all its row and column sums equal. Please note, this may or may not be an actual addmat of a set of 16 permutation matrices—I suspect it probably is, but I haven’t bothered to check. All we know is that it satisfies a necessary condition of being an addmat, namely that its row and column sums are all equal. (It’d be nice if that were also a sufficient condition, but something tells me it isn’t.)

This example, which can clearly be played out for larger values of k, suggests that not only are you safe with a $2k wager, but with a $(2k+2) wager and higher I’ve played around with this a bit, and persuaded myself that the number of permutation matrices will go to 2k + a lot—for k=10, if I did things correctly, you need 24 permutations (or possibly more, if the uppity triangular matrix the analysis leads to is not an actual addmat). I am entirely convinced that some additional careful thought can streamline the analysis into a nice, slick proof. I’m just not sure I haven’t already made a mistake, and built an elaborate house of cards….

Does any of this jibe with what you’ve already found to be the case?

It does indeed jibe.

First of all, to answer a small question Barry left open, here is a set of 16 permutations that will successfully cover his 7×7 “uppity triangular” matrix:

This was found with a simple greedy search.

My own attempts to find an upper bound have focused not on uppity triangular matrices but on matrices I’ve been calling “flags,” like this 7×7 case:

This matrix also requires 16 permutations for a proper covering. To see why, try threading permutations through the columns of the matrix, starting at the left edge and in each column choosing a 1 element (never a 0) from a different row. Because of the block of zeros at the upper left, the first three elements of every permutation must lie in rows 4 through 7. Thus each permutation “uses up” three of the last four rows in the first three columns, and the rest of the permutation can revisit this range of rows only once. It follows that each permutation can touch only one element in the 4×4 block of 1s in the lower right corner of the matrix, and at least 16 permutations are needed to cover all the 1s in the matrix. Showing that 16 are sufficient is not hard.

This kind of analysis works for any odd k, and thus we know that such matrices can require as many as

\[\left\lceil\frac{k}{2}\right\rceil^2\]permutations. (For even k the situation is a little less symmetrical, and I haven’t worked out the exact details.)

These results give us a lower bound on the upper bound on the number of permutations that may be needed to cover a k×k ormat. But we haven’t proved it’s the true upper bound. Are there other ormats that require even more permutations? My guess is no, but keep in mind that almost all my conjectures along these lines have turned out to be wrong.

Responses from readers:

Please note: The bit-player website is no longer equipped to accept and publish comments from readers, but the author is still eager to hear from you. Send comments, criticism, compliments, or corrections to brian@bit-player.org.

Publication history

First publication: 16 August 2010

Updated with letter from Barry Cipra: 19 August 2010

Reformatted: 20 April 2025

Corrected a garbled passage: 24 April 2025

Replaced math image with LaTeX code: 24 April 2025

Brian, are you now conjecturing that all your conjectures turn out to be wrong?

Your flag-waving approach is much much better than my uppity triangular idea. It gets to a good general result without any messy case-by-case analysis. Well done!

To answer Barry’s question, yes, if all the row and column sums are equal, then the matrix is a sum of permutation matrices. The result goes back to Konig, 1915/16, and can be seen as a consequence of Hall’s Marriage Theorem. See D Leep and G Myerson, Marriage, magic, and solitaire, Amer Math Monthly 106 (1999) 419-429.