My closest friends and family must make do with an old-fashioned paper-and-postage greeting card, but for bit-player readers I can send some thoroughly modern pixels. Happy holidays to everyone.

In recent months I’ve been having fun with “deep dreaming,” the remarkable toy/tool for seeing what’s going on deep inside deep neural networks. Those networks have gotten quite good at identifying the subject matter of images. If you train the network on a large sample of images (a million or more) and then show it a picture of the family pet, it will tell you not just whether your best friend is a cat or a dog but whether it’s a Shih Tzu or a Bichon Frise.

What visual features of an image does the network seize upon to make these distinctions? Deep dreaming tries to answer this question. It probes a layer of the network, determines which neural units are most strongly stimulated by the image, and then translates that pattern of activation back into an array of pixels. The result is a strange new image embellished with all the objects and patterns and geometric motifs that the selected layer thinks it might be seeing. Some of these machine dreams are artful abstractions; some are reminiscent of drug-induced hallucinations; some are just bizarre or even grotesque, populated by two-headed birds and sea creatures swimming through the sky.

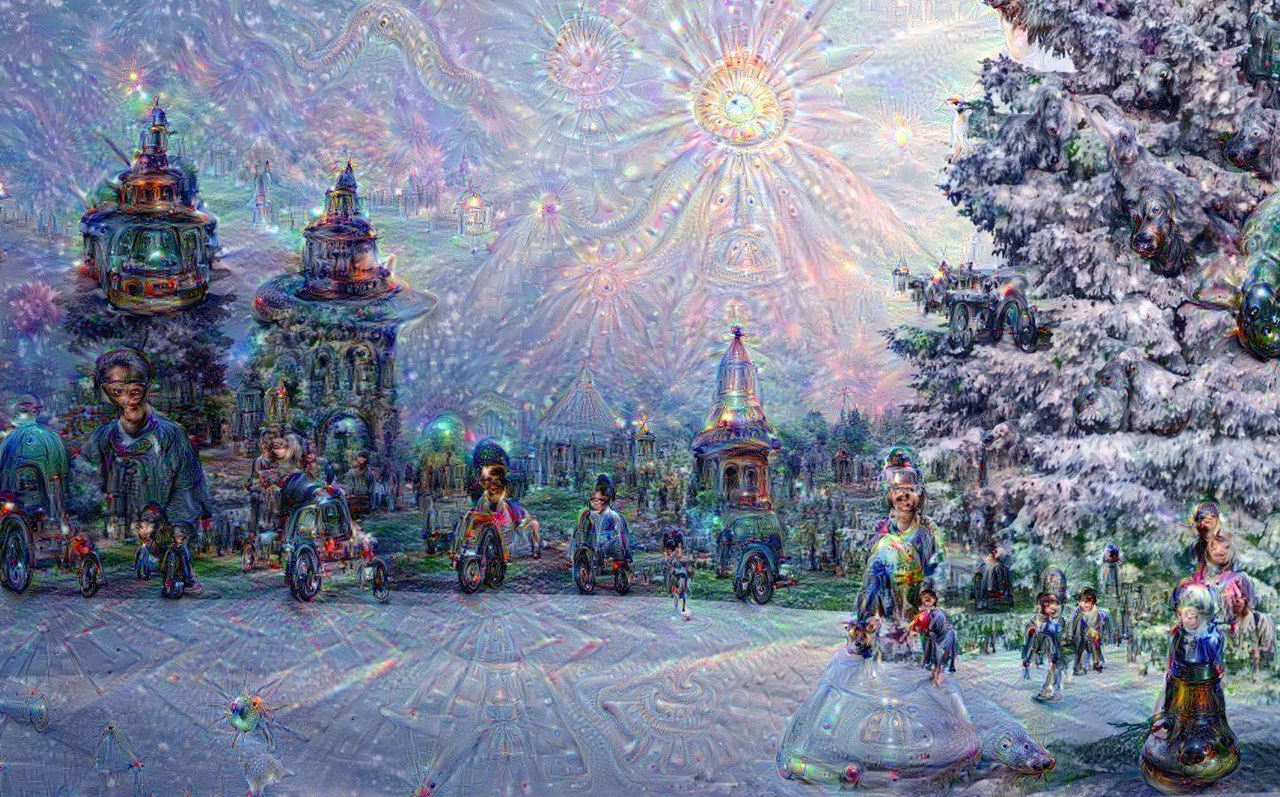

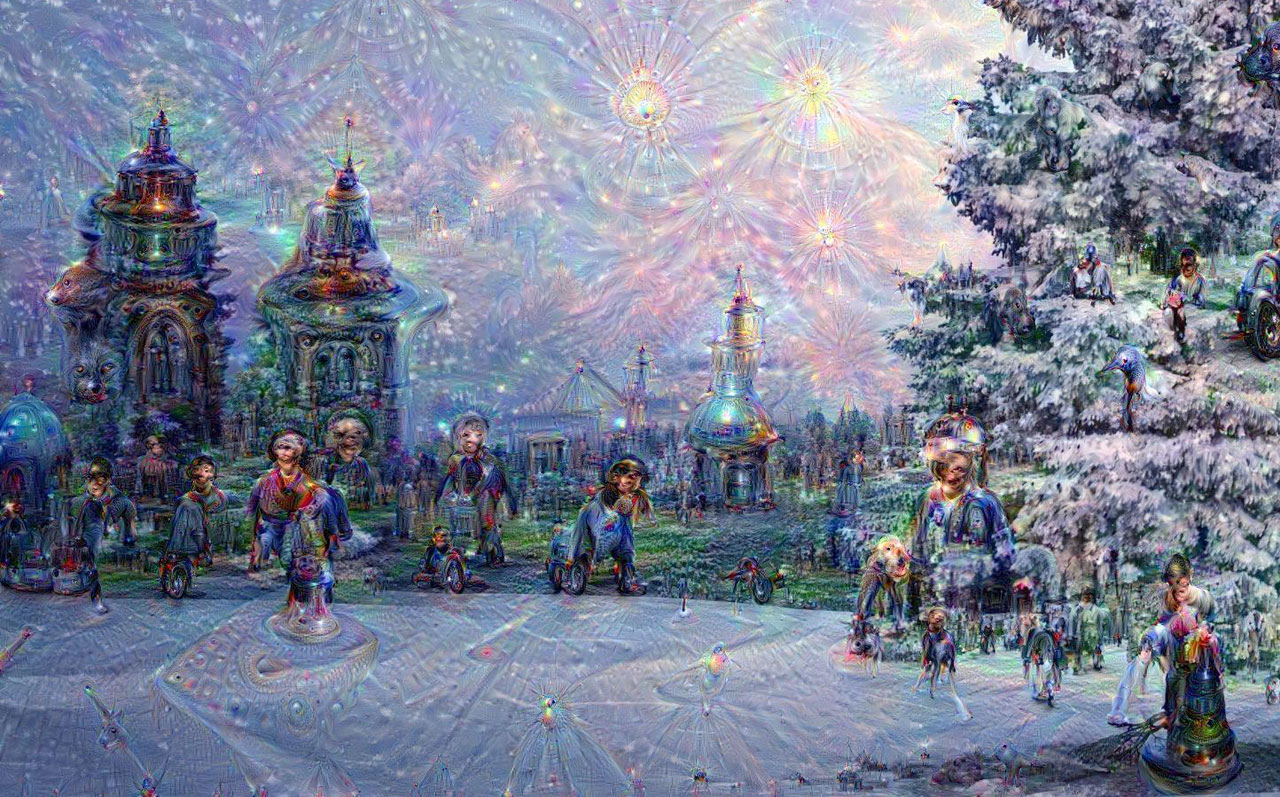

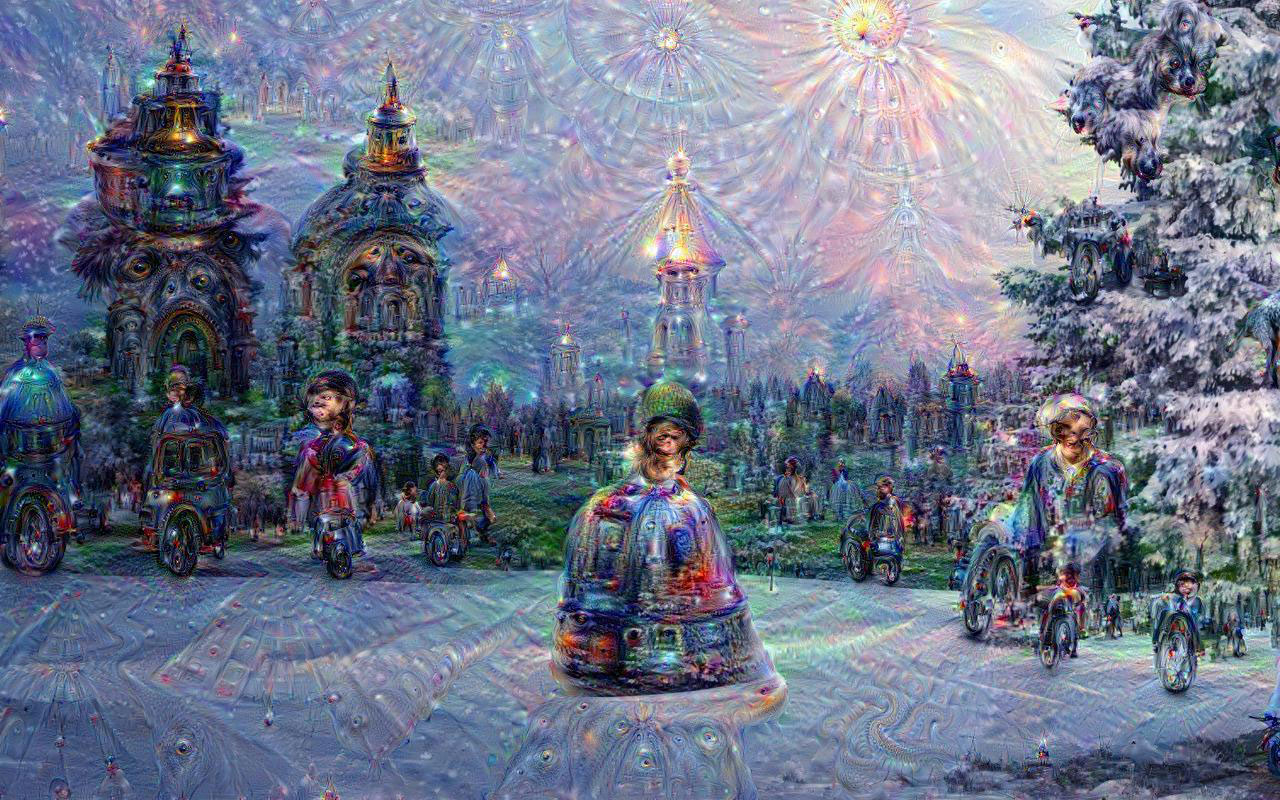

For this year’s holiday card I chose a scene appropriate to the season and ran it through the deep-dreaming program. You can see some of the output below, starting with the original image and progressing through fantasies extracted from deeper and deeper layers of the network. (Navigate with the icons below the image, or use the left and right arrow keys. Shorthand labels identifying the network layers appear at lower right.)

A few notes and observations:

- The embellishments begin with fairly abstract motifs, then become more elaborate and figurative. But this evolution reaches a peak near the middle of the sequence; after that, things calm down a little. By the end, the trees look like trees again, and the sky has more snowflakes and fewer diaphanous monsters. Perhaps this turn toward realism is to be expected, since the later layers are where the neural network settles on a final interpretation of the image.

- Information flows through the layers in sequence, with any hypothesis formed in one later passed along to the later ones. You might think this would tend to stabilize interpretations. If layer 4a sees a puppy face in a certain region, then layers 4b and 4c would be influenced by this verdict. And indeed there are places in the image where certain wild fantasies do persist from one stage to the next; for example, a brightly colored vehicle first appears in the lower right quadrant in layer 4c, and it reappears with variations in the next three layers—4d, 4e, and pool4. On the whole, however, there is less continuity from layer to layer than I would have expected.

- The scene in layer 4c fascinates me. For one thing, it has a cast of characters quite unlike the surrounding layers—human figures (sort of) rather than animals, and buildings that look like gazebos or onion-domed spires. But what intrigues me most is the geometric transformation of the landscape. The world has been flattened; in the left half of the image, the buildings all have their foundations resting on a plane that doesn’t actually exist, and the people have their feet on the ground. The network is constructing a perspective view.

And some links:

- Deep Dreaming was invented by three young engineers and interns at Google, Alexander Mordvintsev, Michael Tyka, and Christopher Olah. As far as I know, their only publications on the subject are a pair of blog posts. The first post announced the discovery and showed some sample images; the second provided links to the open-source code. The code itself is available on GitHub.

- The neural network used in these experiments, called GoogLeNet, was devised by Christian Szegedy and several colleagues at Google Research. They describe it in arXiv:1409.4842.

- For the experiments described here, the GoogLeNet program was trained on more than a million pre-labeled images retrieved from a database called ImageNET. The subjects of these images, which had been downloaded from the Internet, were what the neural network learned to recognize.

- “Computer Vision and Computer Hallucinations” is my American Scientist article on the subject (Vol. 103, No. 6, November–December 2015, pages 380–383).

- If you would like to try playing with these toys yourself, all the software is open source, but getting it installed and running can be an adventure. I’ve written a memo on my own experiences, which includes links to other useful resources.

- I would like to credit the photographer who created the original image, but I have not been able to track down the source. I found it at the Latvian website www.lejins.lv, there’s no information there about its provenance. Thus I am using it here without permission. Mea culpa.

Update 2015-12-31: In the comments, Ed Jones asks, “If the original image is changed slightly, how much do the deep dreaming images change?” It’s a very good question, but I don’t have a very good answer.

The deep dreaming procedure has some stochastic stages, and so the outcome is not deterministic. Even when the input image is unchanged, the output image is somewhat different every time. Below are enlargements cropped from three runs probing layer 4c, all with exactly the same input:

They are all different in detail, and yet at a higher level of abstraction they are all the same: They are recognizably products of the same process. That statement remains true when small changes—and even some not-so-small ones—are introduced into the input image. The figure below has undergone a radical shift in color balance (I have swapped the red and blue channels), but the deep dreaming algorithm produces similar embellishments, with an altered color palette:

In the pair of images below I have cloned a couple of trees from the background and replanted them in the foreground. They are promptly assimilated into the deep dream fantasy, but again the overall look and feel of the scene is totally familiar.

Based on the evidence of these few experiments, it seems the deep dreaming images are indeed quite robust, but there’s another side to the story. When these neural networks are used to recognize or classify images (the original design goal), it’s actually quite easy to fool them. Christian Szegedy and his colleagues have shown that certain imperceptible changes to an image can cause the network to misclassify it; to the human eye, the picture still looks like a school bus, but the network sees it as something else. And Ahn Nguyen et al. have tricked networks into confidently identifying images that look like nothing but noise. These results suggest that the classification methods are rather brittle or fragile, but that’s not quite right either. Such errors arise only with carefully crafted images, called “adversarial examples.” There is almost no chance that a random change to an image would trigger such a response.

The image is broken and not visible, at least in Chrome for Windows.

Thanks for the heads up! Should be fixed now.

It was working on the main page (that is, http://bit-player.org) and I never checked the individual post page (http://bit-player.org/2015/deep-dreaming-with-every-card-i-write). Relative links that work in one context don’t work in the other. I knew that once — the last time I tripped over this bug.

Well now this family member has both. :)

Seeing all the layers…layers I didn’t know existed til now, is fun.

However if I had to choose, I’d still take the old fashioned way every time.

That image in 4c is so realistic that if you had asked me what the input image was, my first guess would include the vehicle, dancer in front, lit green slope on the left, and distant towers on the left and right. This is not true for any of the other layers, where the fountains, chalices, fish, dogs, birds etc. all seem out of place. Perhaps the algorithm did a good job of learning or pretending to learn about context, or maybe it just got lucky.

How robust are the deep dreaming images? If the original image is changed slightly, how much do the deep dreaming images change?

Please see the addendum to the post.