Advances in Obfuscation

by Brian Hayes

Published 27 September 2013

Richard M. Stallman, the gnuru of the free software movement, has launched a campaign to liberate JavaScript. His call to action begins: “You may be running nonfree programs on your computer every day without realizing it—through your web browser.”

My first reaction to this announcement was bafflement. Why pick on JavaScript? I had been thinking that one of JavaScript’s notable virtues is openness. If you include a JavaScript program in a web page, then anyone who can run that program can also inspect the source code. Just use the browser’s “View Source” command, and all will be revealed.

Reading on in Stallman’s complaint, I began to see more clearly what irks him:

Google Docs downloads into your machine a JavaScript program which measures half a megabyte, in a compacted form that we could call Obfuscript because it has no comments and hardly any whitespace, and the method names are one letter long. The . . . compacted code is not source code, and the real source code of this program is not available to the user.

I have to agree that obfuscated JavaScript is annoying; indeed, I have griped about it myself. Although there’s an innocent reason for distributing the code in this “minified” form—it loads faster—some software authors doubtless don’t mind that the compression also inhibits reverse engineering.

The very idea of software obfuscation turns out to have a fascinating theoretical background, as I learned last week at a talk by Craig Gentry of IBM Research. Gentry is also the inventor of a scheme of homomorphic encryption (computing with enciphered data) that I described in a column last year. The two topics are closely related.

Suppose you have a nifty new way to compute some interesting function—factoring large integers, solving differential equations, pricing financial derivatives. You want to let the world try it out, but you’re not ready to reveal how it works. One solution is to put the program on a web server, so that users can submit inputs and get back the program’s output, but they learn nothing about how the computation is carried out. This is called oracle access, since it is rather like interacting with the oracles of ancient Greek tradition, who gave answers but no explanations.

A drawback of running the program on your own server is that you must supply the computing capacity for all visitors to the site. If you could wrap your function up as a downloadable JavaScript program, it would run on the visitor’s own computer. But then the source code would be open to inspection by all. What you want is to let people have a copy of the program but in an incomprehensible form.

The kind of obfuscation provided by a JavaScript minifier is of no help in this context. The result of the minification process is garbled enough to peeve Richard Stallman, but it would not deter any serious attempt to understand a program. The debugging facilities in browsers as well as widely available tools such as jsbeautifier offer help in reformatting the minified program and tracing through its logic. The reconstruction may be tedious and difficult, but it can be done; there is no cryptographic security in minification.

Secure obfuscation is clearly a difficult task. In ordinary cryptography the goal is to make a text totally unintelligible, so that not even a single bit of the original message can be recovered without knowledge of the secret key. Software obfuscation has the same security goal, but with a further severe constraint: The obfuscated text must still be a valid, executable program, and indeed it must compute exactly the same function as the original.

In a way, program obfuscation is the mirror image of homomorphic encryption. In homomorphic encryption you give an untrusted adversary \(A\) an encrypted data file; \(A\) applies some computational function to the data and returns the encrypted result, without ever learning anything about the content of the file. In the case of obfuscation you give \(A\) a program, which \(A\) can then apply to any data of his or her choosing, but \(A\) never learns anything about how the program works.

For many years fully homomorphic encryption was widely considered impossible, but Gentry showed otherwise in 2009. His scheme was not of practical use because the ability to compute with encrypted data came at an enormous cost in efficiency, but he and others have been refining the algorithms. During his talk at the Harvard School of Engineering and Applied Sciences last week Gentry mentioned that the time penalty has been reduced to 107. Thus a one-minute computation on plaintext takes about 200 years with encrypted data.

Will the problem of secure obfuscation also turn out to be feasible? There is a bigger hurdle in this case: not just a widespread feeling that the task is beyond our reach but an actual impossibility proof. The proof appears in a 2012 paper by seven authors: Boaz Barak, Oded Goldreich, Russell Impagliazzo, Steven Rudich, Amit Sahai, Salil Vadhan, and Ke Yang (BGIRSVY). Ref: On the (im)possibility of obfuscating programs. Journal of the ACM 59(2):Article 6. Most of the work described in the 2012 article was actually done a dozen years earlier and was reported at CRYPTO 2001. Preprint. They give the following informal definition of program obfuscation:

An obfuscator \(\mathcal{O}\) is an (efficient, probabilistic) “compiler” that takes as input a program (or circuit) \(P\) and produces a new program \(\mathcal{O}(P)\) satisfying the following two conditions.

— Functionality: \(\mathcal{O}(P)\) computes the same function as \(P\).

— “Virtual black box” property: Anything that can be efficiently computed from \(\mathcal{O}(P)\) can be efficiently computed given oracle access to \(P\).

In other words, having direct access to the obfuscated source text doesn’t tell you anything you couldn’t have learned just by submitting inputs to the function and examining the resulting outputs.

BGIRSVY then prove that the black-box obfuscator \(\mathcal{O}\) cannot exist. They do not prove that no program \(P\) can be obfuscated according to the terms of their definition. What they prove is that no obfuscator \(\mathcal{O}\) can obfuscate all possible programs in a certain class. The proof succeeds if it can point to a single unobfuscatable program—which BGIRSVY then proceed to construct. I won’t attempt to give the details, but the construction involves the kind of self-referential loopiness that has been a hallmark of theoretical computer science since before there were computers, harking back to Cantor and Russell and Turing. Think of programs whose output is their own source code (although it’s actually more complicated than that).

The BGIRSVY result looks like bad news for obfuscation, but Gentry has outlined a possible path forward. Ref: 2013 preprint. Candidate indistinguishability obfuscation and functional encryption for all circuits. Preprint. His work is a collaboration with five co-authors: Sanjam Garg, Shai Halevi, Mariana Raykova, Amit Sahai, and Brent Waters (GGHRSW). They adopt a different definition of obfuscation, called indistinguishability obfuscation. The criterion for success is that an adversary who is given obfuscated versions of two distinct but equivalent programs—they both compute the same function—can’t tell which is which. This is a much weaker notion of obfuscation, but it seems to be the best that’s attainable given the impossibility of the black-box definition. An obfuscator of this kind conceals as much information about the source text as any other feasible method would.

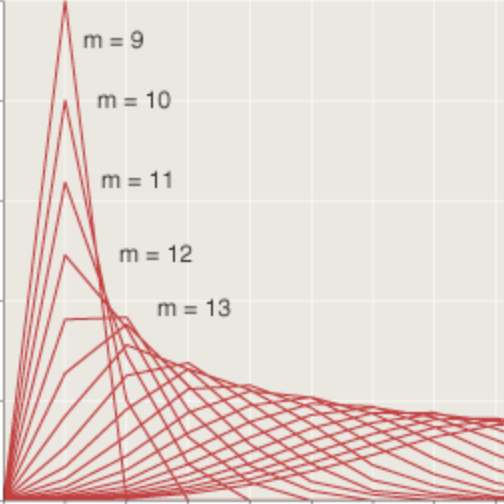

GGHRSW introduce a “candidate” construction for an indistinguishability obfuscator, which works for all programs in an important complexity class called \(NC^{1}\). The algorithm relies on a transformation introduced almost 30 years ago by David Barrington. Ref: Barrington, David A. 1986. Bounded-width polynomial-size branching programs recognize exactly those languages in \(NC^{1}\). In Proceedings of the Eighteenth Annual ACM Symposium on Theory of Computing, STOC ’86, pp. 1–5. Preprint. The class \(NC^{1}\) is defined in terms of networks of Boolean gates, but Barrington showed that every such network is equivalent to a “branching program” where the successive bits of the input determine which of two branches is chosen. The entire computation can then be expressed as the product of \(5 \times 5\) permutation matrices; the essence of the obfuscation step is to replace those matrices with random matrices that have the same product and therefore encode the same computation. There are exponentially many choices for the random matrices, and so any given program has many possible obfuscations.

Gentry calls the scheme a “candidate” because the security guarantee depends on some rather elaborate assumptions that have not yet been thoroughly checked. The team is working to put the method on a firmer foundation.

GGHRSW write that “it is not immediately clear how useful indistinguishability obfuscators would be,” but they offer some possibilities. Software vendors might use the technique for “secure patching”: fixing a flaw without revealing what’s being fixed. Or obfuscation could help in producing “crippleware”—try-out versions of a program that have certain functions disabled. In these applications indistinguishability obfuscation would make it impossible to identify the differences between two versions of a program. Watermarking, which tags each copy of a program with a unique identifier, is another likely use.

In his Harvard talk Gentry also looked a little further into the future to suggest that obfuscation might be valuable when it comes time to upload your brain to the Internet. The idea, presumably, is that you could allow others to borrow (or rent?) your cognitive abilities without disclosing all your private knowledge and personal memories. Since I already have only erratic access to my own memory, I’m not sure any further obfuscation is needed.

Two further comments.

“Use less big word.” Grafito, Russell Field, Cambridge, MA. Photographed 2013-09-20. (Mouseover to magnify.)

First, I find both homomorphic encryption and indistinguishability obfuscation to be fascinating topics, but linguistically they are somewhat awkward. The word sesquipedalian comes to mind. In the case of indistinguishability obfuscation we are just two syllables short of supercalifragilisticexpialidocious. I’m hoping that when Gentry makes his next breakthrough, he’ll chose a term that’s a little pithier. (The GGHRSW paper sometimes refers to garbled rather than obfuscated programs, which seems like a step in the right direction.)

Second, returning to the everyday world of JavaScript programs in web pages, I have to note that Richard Stallman will probably not applaud advances in obfuscation, particularly when they are applied to crippleware or watermarking. I can’t match Stallman’s idealogical zeal for open software, but I do prefer to make my own code available without artificial impediments. For example, the bit of JavaScript that creates a magnifying loupe for the photograph above is available without obfuscation. (I have not included a copyleft license, but there’s a comment reading “Please feel free to use or improve.”)

Responses from readers:

Please note: The bit-player website is no longer equipped to accept and publish comments from readers, but the author is still eager to hear from you. Send comments, criticism, compliments, or corrections to brian@bit-player.org.

Publication history

First publication: 27 September 2013

Converted to Eleventy framework: 22 April 2025

I think that RMS not only wants you to be capable of accessing the not-obfuscated source code, but also that you can change it and redistribute it. Which makes the difference between open source and free software.

In the case of JavaScript, it seems like the technical problems are more difficult than the legal and idealogical ones.

If you want to grab a copy of some JavaScript I’ve written, modify it in some way, then use it on your own web site, there’s nothing to stop you. But most JavaScript is tightly integrated with a specific HTML and CSS environment.

What Stallman seeks, if I understand correctly, is to modify a copy of the JavaScript from a web site, then arrange to run the modified version whenever he visits that site. I don’t see any fundamental reason that can’t be done, but it will take a little browser engineering.

Putting something in an open box doesn’t mean everyone’s free to use it.

“View source” is a great feature to have from a technological perspective, but is actually very dangerous in our current legal climate. Let’s say that Google Docs isn’t obfuscated and I look at its code using “view source”. I am now effectively forbidden from making an online document editor, since Google could claim it’s a derivative of Google Docs which would violate their copyright.

Hence proprietary software should be avoided, whether it’s “open” or not.

There’s nothing evil about proprietary software, only about the claim that by looking at it, anything you later do could be considered derived work.

We don’t need to avoid proprietary software, we need to change the legal climate.

Both terms are more overloaded than a Godaddy-hosted site under slashdotting, but if you ask the Open Source Initiative, which was created by Bruce Perens and Eric S. Raymond, it’ll tell you that Open Source software must be derivable and redistributable too. See the Open Source Definition.