In simple games, one can calculate the exact probability of every outcome, and so the expected winnings can also be determined exactly….

When I wrote the words above in a recent American Scientist column, I didn’t think I was saying anything controversial. So I was taken by surprise when a reader objected to the very notion of exact probability. My correspondent argued that probabilities can never be determined exactly, only measured to within some error bound. He also ruled out the use of limits in defining probabilities, allowing only finite processes. After a brief correspondence with my critic, I set the matter aside; but it keeps coming back to haunt me in idle moments, and I think I should try to clarify my thoughts.

The “simple games” I had in mind were those based on coin-flipping or dice-rolling or card-dealing. For example, I would say that the exact probability of getting heads when you flip a fair coin is 1/2. (Indeed, that’s how I would define a fair coin.) Likewise the exact probability of rolling a 12 with a pair of unbiased dice is 1/36, and the exact probability of dealing four aces from a properly shuffled deck is 1/270,725. The arithmetic behind these numbers is straightforward. You’ll find a multitude of similar examples in the exercises of any introductory textbook on probability. The trouble is, if you ask me to show you a fair coin or an unbiased die or a properly shuffled deck, I can’t do it. I certainly can’t prove that any specific coin or die or deck has the properties claimed. So my critic has a point: Those exact probabilities I was talking about come from a suppositional world of ideal randomizing devices that don’t exist—or can’t be shown to exist—in the physical universe.

Of course mathematics is full of objects that we can’t construct out of Lego bricks and duct tape—dimensionless points, the real number line, Hilbert space. Just as I can’t exhibit a fair coin, I can’t show you an equilateral triangle—or rather I can’t draw you a triangle and then prove that the three sides of that particular triangle are equal. The existence of perfect circles and right angles and other such Platonic apparatus is something we just have to accept if we want to do a certain kind of mathematics. I’m happy to view the fair coin in this light—as a hypothetical device that comes out of the same cabinet where I keep my Turing machines and my Cantor sets. The question is whether we can (or should) take the more radical step of banishing such idealized paraphernalia altogether.

Suppose we insist that the only way of determining a probability is to measure it by experiment. Take a coin out of your pocket and flip it 100 times; if it comes up heads 55 times, then p(H) = 0.55. But when you repeat the experiment, you might get 51 heads, suggesting p(H) = 0.51. Or maybe you would now conclude that p(H) = 0.53. Or you might adopt some other method of inferring a probability from the experimental evidence, something more sophisticated than just taking the mean of a sample. There’s an elaborate and highly developed technology for just this purpose. It’s called statistics, and it works wonders. Still, to the extent that the assigned probability depends on the outcome of a finite number of trials (and remember: taking limits is out of bounds), we’re never going to settle on a single, definite and immutable value for the probability.

Alan Hájek, in an article in the Stanford Encyclopedia of Philosophy, calls this approach to probability “finite frequentism.”

Where the classical interpretation [i.e., the probability theory of Laplace, Pascal, et al.] counted all the possible outcomes of a given experiment, finite frequentism counts actual outcomes. It is thus congenial to those with empiricist scruples. It was developed by Venn (1876), who in his discussion of the proportion of births of males and females, concludes: “probability is nothing but that proportion.”

Hájek calls attention to several worrisome aspects of this doctrine. Most of the problems take us right back to where this discussion began, namely to the fact that we can never learn the exact probability of anything. And this ignorance leads to awkward consequences. In the standard calculus of probabilities, we know that if an event occurs with probability p, then two independent occurrences of the same event have probability p2. How do we apply this rule in an environment where p changes every time we measure it? I suppose a stalwart empiricist might reply that if you want to know p(HH), then that’s what you should be measuring. The empiricist might also point out that the loss of the calculus is not a defect of the theory; it’s just the human condition. We really are ignorant of exact probabilities, and we’ll only get into trouble if we pretend otherwise.

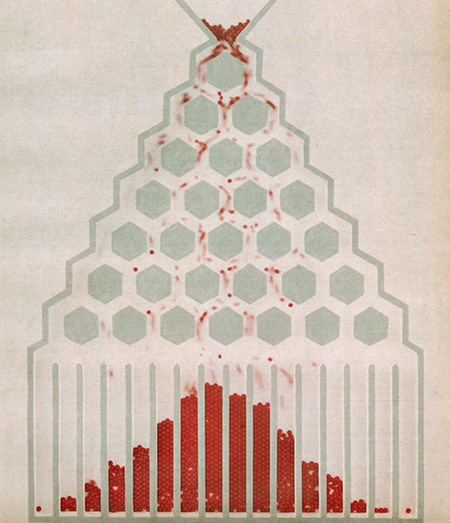

We could adopt a new calculus based on probabilities of probabilities, in which p(H) is not a number but a distribution—maybe a normal distribution determined by the mean and variance of the experimental data. Then p2 becomes the product of two such distributions. But beware: The shape of that normal curve we’ve just smuggled into our reasoning is defined by a process that involves the moral equivalent of flipping a fair coin, not to mention taking limits. Maybe we should instead use a discrete, experimental approximation to the normal distribution, created with a physical device such as a Galton board.

• • •

Reading on in Hájek’s long encyclopedia article, I find that the finite frequentists are not the only school of thought subject to withering criticism; Hájek finds deep flaws in every interpretation of probability theory, without exception. (That’s a philosopher’s job, I guess.)

Joseph Doob, writing 15 years ago in the American Mathematical Monthly, took the position that we have a perfectly sound mathematical theory of probability (formulated mainly by Kolmogorov in the 1930s, and founded on measure theory); the only problem is that it doesn’t connect very well with the world of everyday experience. I would like to quote at length what Doob has to say about the law of large numbers:

In a repetitive scheme of independent trials, such as coin tossing, what strikes one at once is what has been christened the law of large numbers. In the simple context of coin tossing it states that in some sense the number of heads in n tosses divided by n has limit 1/2 as the number of tosses increases. The key words here are in some sense. If the law of large numbers is a mathematical theorem, that is, if there is a mathematical model for coin tossing, in which the law of large numbers is formulated as a mathematical theorem, either the theorem is true in one of the various mathematical limit concepts or it is not. On the other hand, if the law of large numbers is to be stated in a real world nonmathematical context, it is not at all clear that the limit concept can be formulated in a reasonable way. The most obvious difficulty is that in the real world only finitely many experiments can be performed in finite time. Anyone who tries to explain to students what happens when a coin is tossed mumbles words like in the long run, tends, seems to cluster near, and so on, in a desperate attempt to give form to a cloudy concept. Yet the fact is that anyone tossing a coin observes that for a modest number of coin tosses the number of heads in n tosses divided by n seems to be getting closer to 1/2 as n increases. The simplest solution, adopted by a prominent Bayesian statistician, is the vacuous one: never discuss what happens when a coin is tossed. A more common equally satisfactory solution is to leave fuzzy the question of whether the context under discussion is or is not mathematics. Perhaps the fact that the assertion is called a law is an example of this fuzziness.

Note that the coins Doob is tossing seem to be drawn from the Platonic closet of ideal hardware.

None of my reading and pondering has made me a convert to finite frequentism, but at the same time I am grateful for this challenge to my easygoing confidence that I know what probabilities are and how to calculate with them. I surely know nothing of the sort. And on balance I think it might be a good idea if introductory accounts of probability theory put a little more emphasis on calculating from real-world data, with less reliance on fair coins and unbiased dice. In this connection I can recommend the probability section of Joseph Mazur’s diverting book Euclid in the Rainforest.

Four miscellaneous related notes, in lieu of a conclusion:

(1) One might guess that any discrepancies between real coins and ideal ones would amount to only minor biases (unless you’re wagering with Persi Diaconis). Perhaps so, but consider what happened a century ago when several eminent statisticians tried large-scale experiments in generating random numbers with dice, playing cards and numbered slips of paper drawn from a bowl or a bag. Not one of those efforts produced results that passed statistical tests of randomness (including the predictions of the law of large numbers). As late as 1955, even the big Rand Corporation table of a million random digits (generated by a custom-made electronic device) had to be fudged a little after the fact.

(2) Hájek’s indictment of finite frequentism includes this charge: The scheme “rules out irrational probabilities; yet our best physical theories say otherwise.” Elsewhere in the essay he elaborates on this point, mentioning that quantum mechanics posits “irrational probabilities such as 1/√2.” At first I thought this a very acute criticism, but now I’m not so sure. In the quantum contexts most familiar to me, 1/√2 is a commonly encountered amplitude; the corresponding probability is |1/√2|2 = 1/2. Is it true that quantum mechanics necessarily requires irrational probabilities?

(3) Mark Kac, writing on probability in Scientific American (September 1964, p. 96) asks why probability theory was such a late-blooming flower among the branches of mathematics. It was neglected through most of the 18th and 19th centuries. Kac offers this explanation:

Why this apathy toward the subject among professional mathematicians? There were various reasons. The main one was the feeling that the entire theory seemed to be built on loose and nonrigorous foundations. Laplace’s definition of probability, for instance, is based on the assumption that all the possible outcomes in question are equally likely; since this notion itself is a statement of probability, the definition appears to be a circular one.

I’m skeptical of this hypothesis. It’s surely true that probability had shaky foundations, but so did other areas of mathematics. In particular, analysis was a ramshackle mess from the time of Newton and Leibniz until 1951, when Tom Lehrer finally gave the world the epsilon-delta notation. Yet analysis was the height of fashion all through that period.

(4) If you adhere strictly to the finite-frequentist doctrine that a probability does not exist until you measure it, can you ever be surprised by anything?

When I give a class on probability in a Math Circle, I always start by convincing the students that they don’t actually know what probability is - which is fine since no one does, really.

Another problem with the frequentist approach is that it’s hard to make any sense out of statements like “30% chance of rain tomorrow”. We all feel we know what is meant here, but there’s no way to reasonably interpret this as a frequency estimate, be it finite or infinite (are we to sample 100 “tomorrows” and see what happens?)

To me, this is the most obvious reason why some sort of Bayesian approach (which Hajek seems to classify as “subjective”) is required if one is to connect the mathematical notion of probability with real life. It’s an undeniable fact that we feel we know what such probability estimates mean, so if we have any interest in codifying those estimates and inferences in mathematical language, we should follow a Bayesian approach. One may have no interest in such codification, of course, and one may still find it useful to apply probability theory in frequentist settings. This is an age old debate, often quite heated, but I don’t find the debate itself particularly illuminating.

A very nice, brief overview of the “state of knowledge” interpretation to probability can be found in Chapter 1 of Saha’s free e-text on data analysis: http://www.physik.uzh.ch/~psaha/pda/ . It is remarkable that a very small number of assumptions around the notion of “degree of confidence” leads one inevitably to the standard axioms of probability.

I personally like the approach to probability given by Jaynes.

http://www.amazon.com/Probability-Theory-Science-T-Jaynes/dp/0521592712/ref=sr_1_1?ie=UTF8&qid=1313582582&sr=8-1

The book has lots of real world type examples, which I always need to understand somthing

“I suppose a stalwart empiricist might reply that if you want to know p(HH), then that’s what you should be measuring.”

Of course this is generally impossible, because the complexity of verifying a distribution over n coin flips scales exponentially with n. An interesting fact that physics, in particular special relativity, can give a way out of this problem, allowing you to prove that certain events are (arbitrarily close to) independent (assuming no causality violations). This doesn’t work with classical mechanics, but can be shown to work with quantum mechanics. I have discovered a truly marvelous proof of this, which this comment box is too narrow to contain.

It is illuminating to replace “probability” with “volume” everywhere in Hajek’s article. One immediately notices the very many places where an objection (or explanation) specifically about an interpretation of probability could be taken in a more general form as an objection to (or an explanation of) an interpretation of measure theory. Obviously measure theory doesn’t describe anything “real” nor anything that can be measured, so I am predisposed to set aside these objections/explanations as clever sophistry.

That leaves the question — is there something peculiar about probability that is *not* also peculiar about length/volume/mass/etc.?

Ian Hacking’s Intro to Probability is pretty good on this subject. (Of course, since he’s a philosopher, this is exactly the area where you would expect his book to be good…) He divides things into belief-type probability and frequency-type probability and then further divides it into other sub-categories. It’s been a while since I’ve taught it, but if I were to do it again, I might go ahead and start with that chapter (it’s in the middle of the book) instead of starting with the math side of things.

I like F. Carr’s comment. To expound a little:

It’s not unique to probability to prove things about mathematical (or imaginary, or ‘Platonic’) objects and then posit some kind of correspondence between those your real-world objects. That’s part of how most science works, I think.