Measure Twice, Average Once

by Brian Hayes

Published 7 December 2007

Whenever Norm Abram tells me to “measure twice, cut once,” I wonder what I’m supposed to do if the two measurements disagree. Perhaps I should measure a third time, in hope of settling the question by majority rule; but then I might well wind up with three discrepant values.

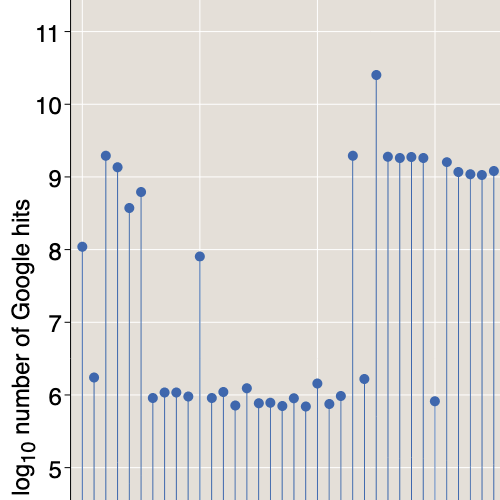

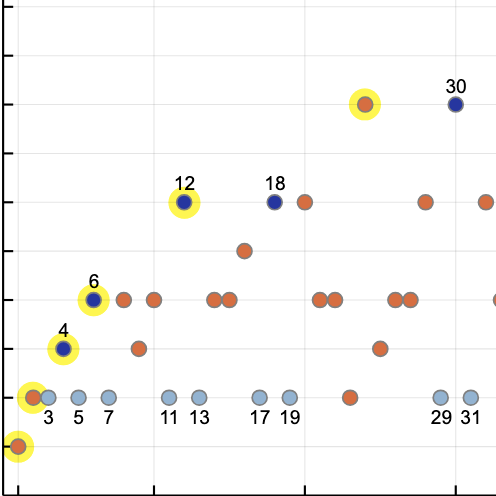

Strolling by a construction site the other day, I came upon the plywood panel shown above. There was no one around to help me interpret these curious scrawled measurements, but I could easily enough imagine the scene. A carpenter—Skilsaw at the ready—is surrounded by a group of statisticians and decision theorists eager to advise him on where to make the cut.

“Obviously,” says the first consultant, “we take the average—the arithmetic mean. Gauss proved 200 years ago that the sample mean is always the best estimator for a measurement subject to normally distributed random errors.”

“Actually, he proved just the opposite,” says another hardhatted and hardheaded savant. “He started by assuming that the mean is the most probable value, and then he invented the normal distribution as a way of ensuring that this rule will hold.”

“Whatever. But we’ve come a long ways since 1805. We know that the mean is an admissible estimator. Even without assuming a normal distribution, the sample mean is the estimator that minimizes the sum of the squared errors.”

“But who says the sum of the squared errors is the function we want to optimize? It’s just one of many possibilities. And it gives undue influence to the extremes of the distribution. In this case, the presence of that peculiar-looking eight-and-an-eighth value pulls the mean down to 55.875. Is that really where we should saw the board?”

“That 8.125 is obviously an outlier. Somebody was reading the wrong end of the tape measure. Excluding that bogus value, the mean is 63.833.”

“If you’re going to be picking and choosing which data points to trust, what about the one at the upper right? I’m not even sure I can read it: 64 and seven-eighths? And somebody seems to have crossed it out. Maybe we should drop that one, too.”

“And 64 is the only other item that isn’t circled. That must mean something.”

Another direction is suggested: “Instead of Gauss’s sum of the squared errors, we could adopt the criterion of Laplace, the sum of the absolute errors. With this choice, the favored estimator is the median rather than the mean. The median of our data is 63.625. And the median is much less sensitive to outliers and strangely shaped distributions. Whether we include or exclude the eight-and-an-eighth measurement makes only a minor difference.”

“What makes you all so sure we’re seeing several attempts to measure the same quantity? I think we actually have three distinct sets of measurements here, which just happen to be scribbled on the same piece of wood. The eight-and-an-eighth is clearly on its own. The two uncircled measurements form another set. And then we have four circled values all clustering around 63-and-something. If we want to simultaneously optimize the least-squares error for all three sets, we should be using a James-Stein estimator, which shrinks the average of each set toward the overall average.”

At this point a Bayesian is heard from. Others mention maximum likelihood, Pitman’s measure of closeness, minimum variance, the method of moments….

The conversation does not end here, but the rest is lost in the whine of the power saw. The carpenter has cut off the plank somewhere out beyond 64 inches and explains this choice as follows: Cutting long may mean cutting twice, but cutting short means buying twice.

* * *

One lesson you might draw from this little farce and fable is that if you have a hard decision to make, you should call a carpenter rather than a statistician. But that’s not the conclusion I intended.

You sometimes get the impression that statistics is a dry and lifeless discipline, where all the interesting questions were answered long ago, and all that remains now is to memorize some formulas and learn when to apply them. I think not!

Problems in statistics don’t get much simpler than this one. It concerns a small set of observations, with one variable in one dimension and one parameter to be estimated. It’s a problem that would have been perfectly familiar to Gauss and Laplace, Legendre and Adrain. And yet there’s still room for doubt and controversy about how best to approach such questions.

I found the plywood puzzle challenging enough that I was led to do some reading. Most of it is well above my grade level, and so I can’t claim to have absorbed everything the authors have to say. But I’ll offer a few pointers in case anyone else wants to follow along:

- Colin R. Blyth (1951) directly confronts the Norm Abram question: How do you decide when to stop measuring and start cutting? I gather that this paper was a major landmark in estimation theory. R. H. Farrell (1964) follows up on related themes. (A number of other papers could be mentioned in the same context; I draw attention to these two because they are freely available online through Cornell’s Project Euclid.)

- There’s an “Introduction to Estimation Theory” by Don Johnson of Rice at the Connexions web site. The context is signal processing, but there’s plenty of use to carpenters.

- For the history of statistics, Stephen Stigler is always the place to start. His article on “Gauss and the Invention of Least Squares” is chapter 17 in Statistics on the Table (Harvard University Press, 1999). The original 1981 version from Annals of Statistics is online here through Project Euclid.

- For a gentle introduction to the James-Stein estimator, I recommend a Scientific American article by Bradley Efron and Carl Morris, “Stein’s Paradox in Statistics” (Vol. 236 No. 5, May 1977, pp. 119–127). (Disclaimer: I was the editor of that article.)

- Finally, at the moment I’m halfway through Pitman’s Measure of Closeness: A Comparison of Statistical Estimators, by Jerome P. Keating, Robert L. Mason and Pranab K. Sen (SIAM, 1993). I really don’t yet know what to make of this, but it has opened up a world I knew nothing about.

Publication history

First publication: 7 December 2007

Converted to Eleventy framework: 22 April 2025