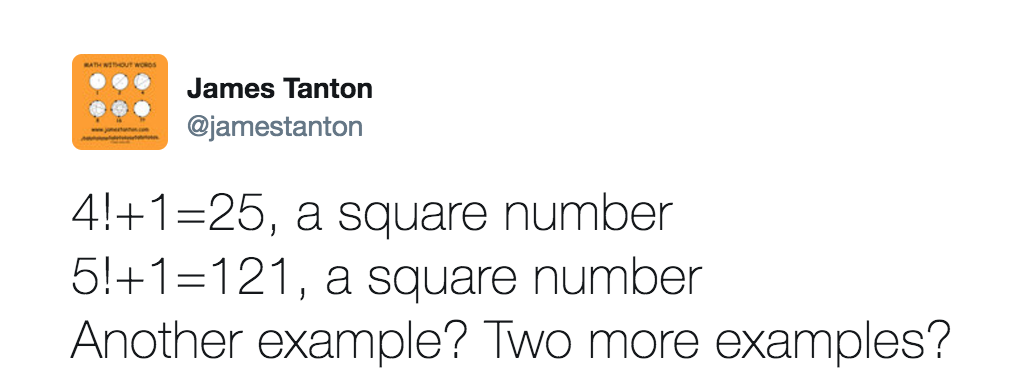

James Tanton tosses off number theory problems the way John D. Rockefeller handed out dimes. I wrote about one of Tanton’s problems back in January. Then a few weeks ago this tweet about factorials and squares snagged my attention, and it hasn’t let go:

With pencil and paper it’s easy to show that \(6!\) doesn’t work. The factorial of \(6\) is \(1 \times 2 \times 3 \times 4 \times 5 \times 6 = 720\); adding \(1\) brings us to \(721\), which is not a square. (It factors as \(7 \times 103\).) On the other hand, \(7!\) is \(5040\), and adding \(1\) yields \(5041\), which is equal to \(71^2\). This makes for a very cute equation:

\[7! + 1 = 71^2.\]

Continuing on, you can establish that \(8! + 1\), \(9! +1\) and \(10! + 1\) are not square numbers. But to extend the search much further, we need mechanized assistance. Here’s a Julia function that does the obvious thing, generating successive factorials and checking each one to see if it is \(1\) less than a perfect square:

function search_fac_sqr(maxn)

fac = big(1) # bigints needed for n > 20

for n in 1:maxn

fac *= n # incremental factorial

r = isqrt(fac + 1) # floor of sqrt

if r * r == fac + 1

println(n, "! + 1 = ", r, "^2 = ", r^2)

end

end

println("That's all folks!")

endWith this tool in hand, let’s check out \(n! + 1\) for all \(n\) between \(1\) and \(100\). Here’s what the program reports:

search_fac_sqr(100)

4! + 1 = 5^2 = 25

5! + 1 = 11^2 = 121

7! + 1 = 71^2 = 5041

That's all folks!Those are the three cases we’ve already discovered with pencil and paper—and no more are listed. In other words, among all values of \(n! + 1\) up to \(n = 100\), only \(n = 4\), \(n = 5\), and \(n = 7\) yield squares. When I continued the search up to \(n = 1{,}000\), I got exactly the same result: no more squares. Likewise \(n = 10{,}000\) and \(n = 100{,}000\). Allow me to mention that the factorial of \(100{,}000\) is a rather large number, with \(456{,}574\) decimal digits. At this point in the search, I began to grow weary; furthermore, I began to lose hope. When \(99{,}993\) successive values of \(n\) fail to produce a single square, it’s hard to sustain faith that success might be just around the corner. Nevertheless, I persisted. I got as far as \(n = 500{,}000\), which has \(2{,}632{,}341\) decimal digits. Not one more perfect square in the whole lot.

What can we learn from this evidence—or lack of evidence? Are 4, 5, and 7 the only values of \(n!\) that lie \(1\) short of a perfect square? Or are there more such cases somewhere out there along the number line, maybe just beyond my reach, waiting to be found? Could there be infinitely many? If so, where are they? If not, why not?

To my taste, the most satisfying way to resolve these questions would be to find some number-theoretical principle ensuring that \(n! + 1 \ne m^2\) for \(n \gt 7\). I have not discovered any such principle, but in a dreamy sort of way I can imagine what a proof might look like. Suppose we eliminate the “\(+1\)” part of the formula, and search for integers such that \(n! = m^2\). It turns out there is just one solution to this equation, with \(n = m = 1\). You needn’t bother lathering up your laptop in the quest for larger examples; there’s a simple proof they don’t exist. In any square number, all the prime factors must be present an even number of times, as in \(36 = 2 \times 2 \times 3\times 3\). In a factorial, at least one prime factor—the largest one—always appears just once. (If you’re not sure why, check out Bertrand’s postulate/Chebyshev’s theorem.)

Of course when we put the “\(+1\)” back into the formula, this whole line of reasoning falls to pieces. In general, the factorization of \(n!\) and of \(n! + 1\) are totally different. But maybe there’s some other property of \(n! + 1\) that conflicts with squareness. It might have something to do with congruence classes, or quadratic residues. From the definition of a factorial, we know that \(n!\) is divisible by all positive integers less than or equal to \(n\), which means that \(n! + 1\) cannot be divisible by any of those numbers (except \(1\)). This observation rules out certain kinds of squares, namely those that have small primes in their factorization. But for all \(n \gt 4\) the square root of \(n!\) greatly exceeds \(n\), so there’s plenty of room for larger factors, as in the case of \(7! + 1 = 71^2\).

Here’s another avenue that might be worth exploring. The decimal representation of any large factorial ends with a string of \(0\)s, formed as the products of \(5\)s and \(2\)s among the factors of the number. Thus \(n! + 1\) must look like

\[XXXXX \ldots XXXXX00000 \ldots 00001,\]

where \(X\) represents any decimal digit, and the trailing sequence of \(0\)s now ends with a single terminal \(1\). Can we figure out a way to prove that a number of this form is never a square? Well, if the final digit were anything other than \(1, 4,\) or \(9\), the proof would be easy, but lots of squares end in \(\ldots 01\), such as \(10{,}201 = 101^2\) and \(62{,}001 = 249^2\). If there’s some algebraic argument along these lines showing that \(n! + 1\) can’t be a square, it will have to be something subtler.

All of the above is make-believe mathematics. I have stirred up some ingredients that look like they might make a tasty confection, but I have no idea how to bake the cake. Perhaps someone else will supply the recipe. In the meantime, I want to entertain an alternative hypothesis: that nothing prevents \(n! + 1\) from being a square except improbability.

The pattern observed in the \(n! + 1 = m^2\) problem—a few matches among the smallest elements of the sequences, and then nothing more for many thousands of terms—is not unique to factorials and squares. Other pairs of sequences exhibit similar behavior. For example, I have tried matching factorials with triangular numbers. The triangulars, beginning \(1, 3, 6, 10, 15, 21, \ldots\), are defined by the formula \(T(m) = m(m + 1)/2\). If we look for factorials that are also triangular, we get \(1! = T(1) = 1\), then \(3! = T(3) = 6\), and finally \(5! = T(15) = 120\). No more examples appear through \(n = 100{,}000\).

What about factorials that are \(1\) less than a triangular, satisfying the equation \(n! + 1 = T(m)\)? I know of only one case: \(2! + 1 = 3\). Broadening the search a little, I found that \(n! + 4\) is triangular for \(n \in {2, 3, 4}\), again with no more hits up to \(100{,}000\).

For another experiment we can bring back the square numbers and swap out the factorials, replacing them with the ever-popular Fibonacci sequence, \(1, 1, 2, 3, 5, 8, 13, \ldots\), defined by the recurrence \(F(n) = F(n - 1) + F(n - 2)\), with \(F(1) = F(2) = 1\). It’s been known since the 1960s that \(1\) and \(144\) are the only positive integers that are both Fibonacci numbers and perfect squares. Looking for Fibonacci numbers that are \(1\) less than a square, I found that \(F(4) + 1 = 4\) and \(F(6) + 1 = 9\), with no other instances up to \(F(500{,}000)\).

We can do the same sort of thing with the Catalan numbers, \(1, 1, 2, 5, 14, 42, 132 \ldots\), another sequence with a huge fan club. I find no squares other than \(1\) among the Catalan numbers up to \(n = 100{,}000\); I don’t know if anyone has proved that none exist. A search for cases where \(C(n) + 1 = m^2\) also comes up empty, but there are a few low-lying matches for \(C(n) + k = m^2\) for \(k \in {2, 3, 4}\).

Finding similar behavior in all of these diverse sequences changes the complexion of the problem, in my view. If we discover some obscure, special property of \(n! + 1\) that explains why it never lands on a square (for large values of \(n\)), do we then have to invent another mechanism for Fibonacci numbers and still another for Catalan numbers? Isn’t it more plausible that some single, generic cause lies behind all the observations?

But the cause can’t be too generic. It’s not the case that you can take any two numeric sequences and expect to see the same kind of pattern in their intersections. Consider the factorials and the prime numbers. By the very nature of a factorial, none of them except 2! = 2 can possibly be prime, but there’s no obvious reason that \(n! + 1\) can’t be a prime. And, indeed, for \(n \le 100\) nine values of \(n! + 1\) are prime. Extending the search to \(n \le 1000\) turns up another seven. Here is the full set of known numbers for which \(n! + 1\) is prime:

\[1, 2, 3, 11, 27, 37, 41, 73, 77, 116, 154, 320, 340, 399, 427, 872, 1477, \\ 6380, 26951, 110059, 150209\]

They get rare as \(n\) increases, but there’s no hint of a sharp cutoff, as there is in the other cases explored above. Does the sequence continue indefinitely? That seems a reasonable conjecture. (For more on this sequence, including references, see Chris K. Caldwell’s factorial prime page.)

My question is this: Can we understand these curious patterns in terms of mere chance coincidence? The values of \(n! + 1\) form an infinite sequence of integers spread over the number line, dense near the origin but becoming extremely sparse as \(n\) increases. The values of \(m^2\) form another infinite sequence, again with diminishing density, although the dropoff is not as steep. Maybe factorials bump into squares among the smallest integers because there just aren’t enough of those integers to go around, and some of them have to do double duty. But in the vast open spaces out in the farther reaches of the number line, a factorial can wander around for years—maybe forever—and not meet a square.

Let me try to state this idea more precisely. Since \(n!\) cannot be a square, we know that it must lie somewhere between two square numbers; the arrangement on the number line is \((m - 1)^2 \lt n! \lt m^2\). The distance between the end points of this interval is \(m^2 - (m - 1)^2 = 2m - 1\). Now choose a number \(k\) at random from the interval, and ask whether \(n! + k = m^2\). Exactly one value of \(k\) must satisfy this condition, and so the probability of success is \(1/(2m - 1)\), or roughly \(1 / (2 \sqrt{n!})\). Because \(\sqrt{n!}\) increases very rapidly, this probability takes a nosedive toward zero as \(n\) increases. It is represented by the red curve in the graph below. Note that by \(n = 100\) the red curve has already reached \(10^{-80}\).

The green curve gives the probability of a collision between Fibonacci numbers and squares; the shape is similar, though it dives off the precipice a little later. The Fibonacci-square curve approximates a negative exponential: The probability is proportional to \(\phi^{-\sqrt{F(n)}}\), where \(\phi = (\sqrt{5} + 1) / 2 \approx 1.618\). The factorial-square curve is even steeper because the factorial function is superexponential: \(n!\) grows faster than \(c^n\) for any fixed \(c\).

The blue curve, recording the probability of coincidences between factorials and primes, has a very different shape. In the neighborhood of \(n!\) the average distance between consecutive primes is approximately \(\log n!\), which grows just a little faster than \(n\) itself and very much slower than \(n!\). The probability of collision between factorials and primes is roughly \(1 / \log n!\). The continuous blue curve corresponds to this smooth approximation. The blue dots sprinkled near that line give the probability based on actual distances between consecutive primes.

What to make of those curves? Is it legitimate to apply probability theory to these totally deterministic sequences of numbers? I’m not quite sure. Before confronting the question directly, I’d like to retreat a few steps and look at a simpler model where probability is clearly entitled to a seat at the table.

Let us borrow one of Jacob Bernouilli’s famous urns, which have room to hold an infinite number of ping pong balls. Start with one black ball and one white ball in the urn, then reach in and take a ball at random. Clearly, the probability of choosing black is \(1/2\). Put the chosen ball back in the urn, and also add another white ball. Now there are three balls and only one is black, so the probability of drawing black is \(1/3\). Add a fourth ball, and the probability of black falls to \(1/4\). Continuing in this way, the probability of black on the \(n\)th draw must be \(\frac{1}{n + 1}\).

If we go on with this protocol forever—always choosing a ball at random, putting it back, and adding an extra white ball—what is the probability of eventually seeing the black ball at least once? It’s easier to answer the complement of this question, calculating the probability of never seeing the black ball. This is the infinite product \(\frac{1}{2} \times \frac{2}{3} \times\frac{3}{4} \times\frac{4}{5} \ldots\), or:

\[P(\textrm{never black}) = \prod_{n = 1}^{\infty} 1 - \frac{1}{n+1}\]

The product goes to zero as \(n\) goes to infinity. In other words, in an endless series of trials, the probability of never drawing black is \(0\), which means the probability of seeing black at least once must be \(1\). (“Probability \(1\)” is not exactly the same thing as “certain,” but it’s mighty close.)

Now let’s try a different experiment. Again start with one black ball and one white ball, but after the first draw-and-replace cycle add two white balls, then four white balls, and so on, so that the total number of balls in the urn at stage \(n\) is \(2^n\); throughout the process all of the balls but one are white. Now the probability of never seeing the black ball is \(\frac{1}{2} \times \frac{3}{4} \times\frac{7}{8} \times\frac{15}{16} \ldots\), or:

\[P(\textrm{never black}) = \prod_{n = 1}^{\infty} 1 - \frac{1}{2^n}\]

This product does not go to zero, no matter how large \(n\) becomes. Neither does it go to \(1\). The product converges to a constant with the approximate value \(0.288788095\). Strange, isn’t it? Even in an infinite series of draws from the urn, you can’t be sure whether the black ball will turn up or not.

These two urn experiments do not correspond directly to any of the sequence coincidence problems described above; they simply illustrate a range of possible outcomes. But we can rig up an urn process that mimics the probabilistic treatment of the factorials-and-squares problem. At the \(n\)th stage, the urn holds \(1 + 2 \sqrt{n!}\) balls, only one of which is black. The probability of never seeing the black ball, even in an infinite series of trials, is

\[\prod_{n = 1}^{\infty} 1 - \frac{1}{1 + 2 \sqrt{n!}}.\]

This expression converges to a value of approximately \(0.2921426977\). It follows that the probability of seeing black at least once is \(1 - 0.2921426977\), or \(0.7078573023\). (No, that number is not \(1/\sqrt{2}\), although it’s close.)

An urn process resembling the factorials-and-primes problem gives a somewhat different result. Here the number of balls in the urn at stage \(n\) is \(\log n!\), again with just one black ball. The infinite product governing the cumulative probability is

\[\prod_{n = 2}^{\infty} 1 - \frac{1}{\log n!}.\]

On numerical evidence this expression seems to dwindle away to zero as \(n\) goes to infinity (although I’m not \(100\) percent sure of that). If it does go to \(0\), then the complementary probability that the black ball will eventually appear must be \(1\).

Some of these results leave me feeling befuddled, and even a little grumpy. Call me old-fashioned, but I always thought that rolling the dice infinitely many times ought to be enough to settle beyond doubt whether a pattern appears or not. In the harsh light of eternity, I would have said, everything is either forbidden or mandatory; as \(n\) goes to infinity, probability goes to \(0\) or it goes to \(1\). But apparently that’s not so. In the factorial urn model the probability of never seeing a black ball is neither \(0\) nor \(1\) but lies somewhere in the neighborhood of \(0.2921426977\). What does that mean, exactly? How am I supposed to verify the number, or even check its first few digits? Running an infinite series of trials is not enough; you need to collect a statistically significant sample of infinite experiments. For an exact result, try an infinite series of infinite experiments. Sigh.

The urn model corresponds in a natural way to the randomized version of the factorial-square problem, where we look at \(n! + k = m^2\) and choose \(k\) at random from an appropriate range of values. But what about the original problem of \(n! + 1 = m^2\)? In this case there’s no random variable, and hence there’s no point in running multiple trials for each value of \(n\). The system is deterministic. For each \(n\) the factorial of \(n\) has a definite value, and either it is or it isn’t adjacent to a perfect square. There’s no maybe.

Nevertheless, there might be a way to sneak probabilities in through the back door. To do so we have to assume that factorials and squares form a kind of ergodic system, where observing one chain of events for a long period is equivalent to watching many shorter chains. Suppose that factorials and squares are uncorrelated in their positions on the number line—that when a factorial lands between two squares, its distance from the larger square can be treated as a random variable, with every possible distance being equally likely. If this assumption holds, then instead of looking at one value of \(n!\) and trying many random values of \(k\), we can adopt a single value of \(k\) (namely \(k = 1\)) and look at \(n!\) for many values of \(n\).

Is the ergodic assumption defensible? Not entirely. Some distances between \(n!\) and \(m^2\) are known to be more likely than others, and indeed some distances are impossible. However, the empirical evidence suggests that the deviations must be slight. The histogram below shows the distribution of distances between a factorial and the next larger square for the first \(100{,}000\) values of \(n!\). The distances have all been normalized to the range \((0, 1)\) and classified in \(100\) bins. There is no obvious sign of bias. Calculating the mean and standard deviation of the same \(100{,}000\) relative distances yields values within \(1\) percent of those expected for a uniform random distribution. (The expected values are \(\mu = 1/2\) and \(\sigma = 1/12\).)

If this probabilistic approach can be taken seriously, I can make some quantitative statements about the prospects for ever finding a large factorial adjacent to a perfect square. As mentioned above, the overall probability that one or more values of \(n! + 1\) are equal to squares is about \(0.7078573023\). Thus we should not be too surprised that three such cases are already known, namely the examples with \(n = 4, 5,\) and \(7\). Now we can apply the same method to calculate the probability of finding at least one more case with \(n \gt 7\). Let’s make the question more general: “Whether or not I have seen any squares among the first \(C\) values of \(n! + 1\), what are the chances I’ll see any thereafter?” To answer this question, we can just remove the first \(C\) elements from the infinite product:

\[\prod_{n = C+1}^{\infty} 1 - \frac{1}{1 + 2\sqrt{n!}}.\]

For \(C = 7\), the answer is about \(0.0037\). For \(C = 100\), it’s about \(5.7 \times 10^{-80}\). We are sliding down the steep slope of the red curve.

As a practical matter, further searching for another factorial-square couple does not look like a promising way to spend time and CPU cycles. The probability of success soon falls into the realm of ridiculously small numbers like \(10^{-1{,}000{,}000}\). And yet, from the mathematical point of view, the probability never vanishes. Removing a finite number of terms from the front of an infinite product cannot change its convergence properties. If the original product converged to a nonzero value, then so will the truncated version. Thus we have wandered into the canyon of maximal frustration, where there’s no realistic hope of finding the prize, but the probabilities tell us it still might exist.

I am going to close this shambling essay by considering one more example—a cautionary one. Suppose we apply probabilistic reasoning to the search for a cube that is \(1\) less than a square. If we were looking for exact matches between cubes and squares, we’d find plenty of them: They are the sixth powers: \(1, 64, 729, \ldots\). But integer solutions to the equation \(n^3 + 1 = m^2\) are not so abundant. One low-lying example is easy to find: \(2^3 + 1 = 3^2\), but after 8 and 9 where can we expect to see the next consecutive cube and square?

The probabilistic approach suggests there might be reason for optimism. Compared with factorials and Fibonaccis, cubes grow quite slowly; the rate is polynomial rather than exponential or superexponential. As a result, the probability of finding a cube at a given distance from a square falls off much less steeply than it does for \(n!\) or \(F(n)\). In the graph below, \(P(n^3 + k = m^2)\) is the orange curve.

Note that the orange curve lies just below the blue one, which represents the probability that \(n!\) lies near a prime. The proximity of the two curves suggests that the two problems—factorials adjacent to primes, cubes adjacent to squares—might belong to the same class. We already know that factorial primes do seem to go on and on, perhaps endlessly. The analogy leads to a surmise: Maybe cube-square coincidences are also unbounded. If we keep looking, we’ll find lots more besides \(8\) and \(9\).

The surmise is utterly wrong. The problem has a long history. In 1844 Eugène Catalan conjectured that \(8\) and \(9\) are the only consecutive perfect powers among the integers; the conjecture was finally proved in 2004 by Preda Mihăilescu. For the special case of squares and cubes, Euler had already settled the matter in the 18th century. Thus, probabilities are beside the point.

All of the questions considered here belong to the category of Diophantine analysis—the study of equations whose solutions are required to be integers. It is a field notorious for problems that are easy to state but hard to solve. Catalan’s conjecture is one of the most famous examples, along with Fermat’s Last Theorem. When Diophantine problems are ultimately resolved, the proofs tend to be non-elementary, drawing on sophisticated tools from distant realms of mathematics—algebraic geometry in the proof of Fermat’s Last Theorem by Andrew Wiles and Richard Taylor, cyclotomic fields in Mihăilescu’s proof of the Catalan conjecture. As far as I know, probability theory has not played a central role in any such proof.

When I started wrestling with these questions a few weeks ago, I did not expect to discover a definitive solution. I’ve certainly fulfilled my expectations! As a matter of fact, in my own head the situation is more muddled now than it was at the outset. The realization that even an infinite series of experiments would not necessarily resolve some of the questions is deeply unsettling, and makes me wonder how much I really understand about probability theory. But that’s hardly unprecedented in mathematics. I suppose I’ll just have to get used to it.

Update: Thanks to a further tip from Tanton, I have learned that the problem has an extensive history, and also a name: Brocard’s problem, after Henri Brocard, who published on it in 1876 and 1885. Ramanujan mentioned it in 1913. Erdos conjectured there are no more solutions. Marius Overholt connected it with the abc conjecture. Bruce C. Berndt and William F. Galway established that there are no more solutions up \(10^9\). All this comes from the Wikipedia entry on Brocard’s problem. That article also mentions (but does not explain) that the solutions are called Brown numbers.

I have some more reading to do.

Excellent post.

I’ve spent a few hours on this problem every year or so since undergrad (maybe 10 years ago?). I’m glad to know that it does in fact appear to be hard. What’s particularly maddening about it is how easy it is to analyze n!+k where k is not itself a square. E.g., n! - 1 is only a square when n=1 or n= 2 because for \(n \geq 3\), \(n! - 1 \equiv 2 \bmod 3\), so it can’t be a square. The same proof works for n! + 2 (which is never square), and a similar proof works for n! + 3 (which is only a square when n = 1 or n=3).

Incidentally, I think this question arises fairly naturally in the study of factorial primes.

Thanks much for your comments! Two small points:

Except for \(n=2\).

Agreed where \(k\) is a small square. (Where the number of digits in \(k^2\) is less than the number of trailing zeros in \(n!\).) Not sure it’s so easy for larger \(k\). Can you elaborate?

Oops.. I forgot about 4= 2! + 2 :). Sorry about that.

For non-square k, I meant that it is easy to prove that there are only finitely many examples and even to enumerate all examples as long as you’re willing to run in time poly(k). Then, this procedure suffices:

1) Find a modulus m such that k is not a square mod m. (This can be done by factoring k and then applying quadratic reciprocity and the CRT. One can always get a value less than k.)

2) Enumerate all examples of squares n!+k for n < m.

Of course, the resulting enumeration gives all examples of n!+k for which k is prime, but it runs in time proportional to k.

I'm not sure about very large non-square k. Obviously, there exist non-square k such that n! + k is a square for very large n. I don't immediately see an efficient way to find such an n, given k, and certainly not a way to categorize all such n.

(I should clarify that I'm not an expert in number theory or diophantine equations, so I apologize if I said something stupid above.)

Since 1/log n! is asymptotic to e/n (Stirling’s formula), and the sum of e/n diverges, you may safely conclude that the infinite product of 1-1/log n! goes to 0.

Thank you!

Stirling gives \(n!\asymp n^ne^{-n}\sqrt{2\pi n}\) (I’m using \(\asymp\) for “is asymptotic to”). Taking logs, \(\log n!\asymp n\log n-n+(1/2)\log2\pi+(1/2)\log n\). The convergence of \(\prod(1-(n!)^{-1})\) is thus equivalent to that of \(\sum(n\log n)^{-1}\) (the lower order terms can be ignored), not that of \(\sum(e/n)\). But the conclusion is the same: the sum diverges (by the integral test), so the product diverges to zero.

I’ve heard of this problem before, but don’t remember if there was a solution, proof or counterexample. I’m surprised that you haven’t mentioned the factorization \(n!=m^2-1=(m-1)(m+1)\), which turns your problem of nearly-coincident sequences into a seemingly different one of arranging factors to get close products. It doesn’t generalize well to the \(n!+k\) case though.

This is a nice observation, since it does generalize nicely for square k, which is the hard case.

This may reduce the search for such numbers.

We need n!=(m-1)(m+1), where n>100. As mentioned in the blog post, n! will need to have a large number of zeros at the end

=> (m-1)(m+1) needs to be divisible by a fairly large power of 2. But only one of them is 0 mod 4. Lets say (m-1) is this number. Then, m+1 cannot have more than 1 zero at the end, so we are looking for n! = (k*10^L) (k*10^L+2), or (k*10^L) (k*10^L-2).

In 2015 Robert D. Matson extended the search to 400 billion using Montgomery multiplication, taking about 4 months of CPU time. I believe that this could be reduced (possibly to about 3 months) by using 40 “sparse” primes - those with 4 binary 1′s - instead of the first 40 primes after 10^12.

See: http://unsolvedproblems.org/S73.pdf

The question of triangular factorials is discussed here. But no conclusion is reached.

Koshy and Gao, Square Catalan numbers, J Recreational Math 34(4) (2005-6) 263-266, prove that there are no square Catalan numbers exceeding 1. The proof is an application of Bertrand’s Postulate.

This is just a typo alert. In the product

\[ P(\textrm{never black}) = \prod_{n = 2}^{\infty}( 1 - \frac{1}{2^n}),\] the index should start at 1, not 2 as written. The post itself was fascinating!

Thanks much! I’ve fixed it, and the equation just above it, which had the same error.