Recursive Driveling

by Brian Hayes

Published 1 March 2013

If there’s anything inaner than turning literature into drivel, it’s turning drivel into drivel. I’ve added a “recurse” button to the drivel generator. It feeds the output back to the input, like xeroxing a xerox.

What happens when this process is repeated many times? Before I tried the experiment, two quite different outcomes both seemed plausible. On the one hand, driveling is a recombinatory process that stirs up the text and thereby introduces novelty. Nth-order drivel can’t create any n-grams that don’t also appear in the source text, but those n-grams are jammed together in new ways. Hodgepodge words and phrases can be expected to proliferate as the recursion continues, increasing the entropy of the drivel.

The counterargument says that driveling is also a sampling process. With each round of recursion, some elements of the source text are left behind—and once lost they can never be recovered. In the long run, then, we should expect the recursive drivel to grow more monotonous, with an ever-smaller vocabulary. It’s like a biological population that steadily loses diversity for lack of new germ plasm.

By all means try the experiment for yourself. Here are the results I got when I repeatedly recycled the text of Matthew Arnold’s poem “Dover Beach.” In each round of recursion, I generated a thousand characters of drivel, which became the source text for the next round. In the snippets below, I show only the first line of the output for each round. The integer in front of each line is the level of recursion.

second-order drivel

0 Hating To trand is of Engles shor low, to Hat gone Fine shichocland,

1 p ful, Gles drawither lonce, Gles thdrand lon so of sh Only he again;

2 an, th Only heith re by th re Fret ebbland long Thering and is drawits

3 nly he And, thelas of dar turn, Wits of drawith turn, up fliffs ong

4 e and by herin; And, the and by th turn, we and brin; And by heit up

5 Wit up flike and, to-night up flike Fing pebblas onch’s of Fin; And, to-

6 tretretretretretretretretretretretretretretretretretretretretretretret

fourth-order drivel

0 d flight Gleams, So various, so beautiful, so beautiful, so beautiful, so

1 beautiful, so beautiful, so beautiful, so beautiful, so beautiful, so

sixth-order drivel

0 his mind then again begin, and round earth’s shore Lay like the sound a

1 rue To one another! for the world. Ah, love, let us be true To one

2 , let us be true To one another! for the world. Ah, love, let us be true

3 rue To one another! for the world. Ah, love, let us be true To one

eighth-order drivel

0 g ago Heard it on the Aegean, and it brought Into his mind the turbid

1 r the world. Ah, love, let us be true To one another! for the world. Ah,

2 ve, let us be true To one another! for the world. Ah, love, let us be true

3 world. Ah, love, let us be true To one another! for the world. Ah, love,

In every case, the process quickly reaches a fixed point—and a rather boring one at that. The banana phenomenon is doubtless a major factor in what we’re seeing here; it would be interesting to rerun the experiment with an algorithm immune to that flaw. Also important are finite-size effects. I would like to believe that the outcome would be different if we could generate infinite streams of drivel from an infinitely long source text. The trouble is, I can’t really imagine what an infinitely long source text would look like. If an endless lyric by Matthew Arnold is not trivially repetitious (“so beautiful, so beautiful, so beautiful”) then it has to be some sort of enumeration of all possible combinations of n-grams. In either case, it seems rather drivelish, even without algorithmic help.

Responses from readers:

Please note: The bit-player website is no longer equipped to accept and publish comments from readers, but the author is still eager to hear from you. Send comments, criticism, compliments, or corrections to brian@bit-player.org.

Publication history

First publication: 1 March 2013

Converted to Eleventy framework: 22 April 2025

You might be interested in work on the evolution of languages through iterated learning.

That’s one of this person’s research interests: http://www.lel.ed.ac.uk/~simon/ for example (maybe, I didn’t check carefully) http://www.pnas.org/content/104/12/5241.abstract

Infinite length should mean that each round is i.i.d the same as round 0? The distribution on k-grams after round will be the same as ideal/expectation of the previous, which will not change from round to round. (Assuming that you have made your original source text cyclic by appending a copy of the first k-1 characters to the end.)

Yes, this is what I had in mind — except that there’s no need to worry about running off the end of an infinite text.

Won’t it provably reach a fixed point? No new n-grams can be introduced, and each round there is some probability p that at least one n-gram is lost. p technically would change each round, but it’s bounded below by some epsilon > 0 that depends on the length of the text but not on the number of rounds so far.

For your proof to go through, I think you need to put a limit on the length of the generated text. Otherwise we can just keep going until every n-gram turns up in the drivel text, in which case nothing is ever lost.

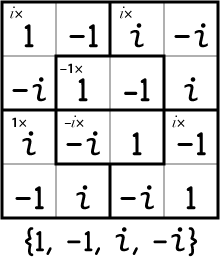

There’s another issue: What do we mean by a fixed point? Is it enough to have an unchanging catalog of constituent n-grams, even though their sequence in the output stream can vary? Or is it a state in which every n-gram has only one possible successor, as in the second-order string “tretretretre…” in the example above? The three Markov transitions are tr–>e, re–>t and et–>r; nothing can ever change again.

If I’m understanding your terms correctly, \(n\)th-order drivel cannot introduce new \((n+1)\)-grams; given a fixed output length, and an \(n\)-gram that occurs with multiple successors in the source text, there is a lower bound on the probability of losing one of the successors in the output text. Thus you will eventually reach a fixed point where every \(n\)-gram in your text has exactly one successor.

Indisputably true. But truncating the text at some fixed length also forces us to face up to the ragged-end problem. That is, we need a policy for dealing with the situation where the algorithm tries to find the successor of the last n-gram in the text. Suppose the final character occurs nowhere else; then any continuation we choose will necessarily introduce a novel n-gram. At that point we’ve lost the closed-universe property.

Can this be patched up? Given the finite-length assumption (and also a finite alphabet), there’s only so much room for variety. With a fixed-length text, we can’t help falling into a repetitive pattern eventually, no matter how we generate the text. So, yes, we’ll still eventually reach a fixed point. But this seems to have more to do with the finite size of the sample than with the properties of the driveling algorithm.

I should mention that the algorithm as implemented on the web page deals with this problem by pretending it doesn’t exist. If the program comes to an n-gram that has no successor anywhere in the text, it just halts.

Since higher-order drivel loses some entropy due to the banana effect and lower-order drivel increases it by recombining parts of the text, why not do a hybrid drivel? Roll a die and use its outcome to control the drivel order, possibly creating a (1-6)-th order drivel.

For an infinite stream it is not quite clear how to define the probabilities used in the lookup table. If one assumes that the complete stream is known when constructing the table then one would define it as the probability of finding a specific n-gram when randomly choosing a sequence of n characters. In this case, I would think that the recursion would not introduce any change at all.

The first level of recursion would generate drivel. In the limit of infinite length the probability table would eventually converge to that of the original text. This means that the next level of recursion uses exactly the same table as the previous one which means one would not be able to distinguish between the first level of recursion and the next.