Five years ago I wrote about a rumored proof of the abc conjecture, an idea from the 1980s that stands at the juncture between the additive and the multiplicative parts of number theory. Now there’s more abc news, and this time it’s not just a rumor. Shinichi Mochizuki of Kyoto University has released a series of four long papers in which he claims to provide a proof. I have nothing useful to say about the proof itself—the waters are deep and dark out where Mochizuki swims—but I’m going to take the occasion for some dabbling in the shallow end of the pool.

So what’s the abc conjecture? I gave an account in my earlier post, but here I want to try a different approach. Let’s make a game of it.

You choose a positive integer a, and I’ll choose a larger integer b that’s relatively prime to a. In other words, a < b and no integer n > 1 divides both a and b. Now we calculate two quantities. The first is the sum a + b = c. (I note that c must also be relatively prime to both a and b. Agreed?) The second quantity is called the radical of a, b, c, which I’m going to designate R; it’s the product of all the distinct primes in a, b and c. To compute the radical we list all the prime factors of a, b and c, merge the three lists, cast out duplicates until each prime appears just once, and finally multiply.

Now for the payoff: If R ≥ c, you win. If R < c, the triple {a, b, c} is called an abc-hit, and I win.

Suppose you pick a = 5 and I reply with b = 9, for a sum of c = 14. The distinct prime factors of these numbers are 2, 3, 5 and 7, and so the radical R = 2 × 3 × 5 × 7 = 210. Since R is larger than c, I lose. But if you pick 5 and I pick 27, we have c = 5 + 27 = 32 whereas R = 2 × 3 × 5 = 30. This is an abc-hit, and I’m the winner.

In a sense, this game is very much in your favor, because abc-hits are rare. There are 4,950 integer pairs with a < b ≤ 100, and among them 3,043 pairs are relatively prime; but only six of those pairs produce an abc-hit:

\[\begin{align}

1 + 8 &= 9 & R &= 6\\

1 + 48 &= 49 & R &= 42\\

1 + 63 &= 64 & R &= 42\\

1 + 80 &= 81 & R &= 30\\

5 + 27 &= 32 & R &= 30\\

32 + 49 &= 81 & R &= 42

\end{align}\]

The same information is presented graphically below. Maroon dots are abc-hits (and their symmetrical counterparts after interchanging a and b); gray dots are all the other relatively prime pairs.

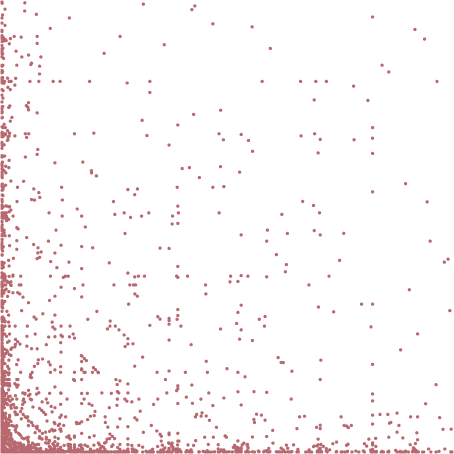

For a broader view, here are the 1,318 abc-hits with a < b ≤ 106 (and their reflections). I didn’t compute all these hits myself; the data come from the ABC@Home project, where volunteers have been donating cpu cycles to the task for several years.

Given the rarity of abc-hits, it looks like you have an excellent chance of avoiding them. If we both pick random a’s and b’s in the range from 1 to 1,000,000, you win with probability 1 – 10–10. Before you lay down any heavy wagers on this game, however, consider that I too have an advantage. I play second. If for every a there is some b that yields an abc-hit, then in principle I can always win. All the same, I won’t be making any big bets either. Although it may well be true that there’s at least one abc-hit for every a, I don’t have the computational resources to find a winning b in every case. For instance, if you choose a = 330, I’m stuck. The ABC@Home team has not yet found a b that makes an abc-hit with a = 330; if such a b exists, it must be larger than 999,999,999,999,999,670.

So that’s the basic abc game. Let’s fiddle with the rules a little to make it more interesting.

Not all abc-hits are equally impressive. Sometimes R is much smaller than c, but in other cases the difference is slight and the ratio c/R is barely greater than 1. A few examples suggest the range of possibilities:

\[\begin{align}

459 + 3125 &= 3584 & R &= 3570 & c/R &= 1.004\\

5 + 27 &= 32 & R &= 30 & c/R &= 1.067\\

1 + 4374 &= 4375 & R &= 210 & c/R &= 20.833\\

2 + 6436341 &= 6436343 & R &= 15042 & c/R &= 427.891

\end{align}\]

Suppose we adjust the rules of the game so that I win only if I can find a “high-impact” abc-hit, where R is dramatically less than c. Before playing, we agree on a number h, which can be any real number equal to or greater than 1. Then you pick your a, I pick my b, and we calculate the sum c and the radical R, just as before. But in this version of the game, I win only if Rh < c. (A mathematically equivalent formulation says that I win if (log c)/(log R) > h.)

If h = 1, our new game is identical to the old one (since R1 = R). If we assume that an abc-hit exists for every a, and if I have unlimited computing power, then I should always be able to win with h = 1. But everything changes when h > 1. This is where the abc-conjecture comes into the picture. The conjecture asserts that only finitely many abc-hits have Rh < c whenever h has any value greater than 1—no matter how slight the excess over 1. An immediate consequence is that you win the game. If there are only finitely many “high-impact” abc-hits for our chosen value of h, then there must be infinitely many a’s for which I can’t find a winning b.

For the record, here’s a statement of the abc conjecture without the apparatus of the two-player game:

Given any real number h > 1, there are at most finitely many triples {a, b, c} with radical R(a,b,c) where Rh < c.

Note that the definition requires the exponent h to be strictly greater than 1. It says there can’t be an infinity of abc-hits with R2 < c or R1.5 < c or even R1.0000001 < c. But it doesn’t rule out an infinity of abc-hits with R1 < c, and in fact such an infinity is known to exist. (A presentation by Frits Beukers gives a constructive proof, generating an infinite sequence of abc-hits with a = 1.)

I’d like to dwell for just a moment on the strangeness of this statement. Think of h as a parameter controlling the size of the pores in a mathematical sieve. We set h to some specific value and then run all the abc-hits through the sieve, keeping those with (log c)/(log R) > h, and discarding the rest. If we start with h = 1.5, we might find we retain just a few hits. As we reduce the value of h, the size of the collection grows, but (if the abc conjecture is true) the number of qualifying triples remains finite as long as h is greater than 1, even infinitesimally so. And then, when h = 1, the sieve suddenly catches an infinity of abc-hits. So now we can ask: For how many of those infinitely numerous hits is (log c)/(log R) equal to 1? The answer is: 0.

I don’t mean to suggest there’s anything wrong with the mathematics of this situation. It’s just another hint that this paradise Cantor created for us is a mighty weird place to live.

One more digression. The defining property of an abc-hit is R < c. What about the case of R = c? I would love to exhibit a specimen of such a triple, but I haven’t been able to find one. Do they exist? [Answer: Please see comments.] I see no obvious reason why they shouldn’t, and I suspect they’re just very rare. After all, there are many ways for R to be less than c and many ways for R to be greater than c but there’s only one way for R to be equal to c. My search for low-hanging fruit (examining all triples with c ≤ 25,000) came up empty. I have also sifted through 14 million triples recorded by the ABC@Home project, which cover all territory up to c = 1018. In this data set I found no triples with R = c. However, I don’t know whether that’s because no R = c triples exist in this range or because the ABC@Home software was set to look only for R < c.

• • •

My abc games require nothing but simple arithmetic. Not so Mochizuki’s work on the abc conjecture.

Mochizuki is professor in the Research Institute for Mathematical Sciences in Kyoto. His Ph.D. is from Princeton, where he studied under Gerd Faltings, the Fields Medalist who proved the Mordell Conjecture.

In August Mochizuki posted four manuscripts on his web site at Kyoto University. (Links to PDFs: Part I, Part II, Part III, Part IV.) They carry the collective title “Inter-Universal Teichmüller Theory,” and together they amount to 512 pages. Part IV is where the claimed proof of the abc conjecture appears.

I have made a sincere attempt to extract some meaning from these documents. In the case of Part IV, I have “read” the entire manuscript, in the sense that I have cast my eyes over each line of text and tried to apprehend the sense of the words and the symbols, seeking help in reference works and the web when necessary. Nothing of worth has come from this endeavor. I’m usually pretty good at reading things I don’t understand, but Mochizuki has defeated me.

I haven’t yet found anyone else who claims to fully understand the proof, but several readers have made much more progress than I have. The best commentary so far is on Mathoverflow. Other sources are Jordan Ellenberg’s blog Quomodocumque, a Google+ note by John Baez and Peter Woit’s blog Not Even Wrong.

Note: The paragraph above beginning “I’d like to dwell for just a moment…” has been changed since this article was first published. Resisting the powerful urge to bury my embarrassing mistakes, I reproduce below the original version:

I’d like to dwell for just a moment on the strangeness of this statement. Suppose we go through all the abc-hits and for each one write down the value of h = (log c)/(log R). Then, assuming the abc conjecture is true, we have these results:

- How many hits have h ≥ 1? Answer: infinitely many.

- How many hits have h > 1? Answer: finitely many.

- How many hits have h = 1? Answer: zero.

Addendum 2012-09-12: When I began this piece, I said I was going to dabble in the shallow water. Now I’m in well over my head. As Stevie Smith said: Not waving but drowning.

In the comments, Jim Lyon takes issue with my statement about the “strangeness” of the abc conjecture. Lyon writes:

If N(h) is the number of hits for value h, it’s not that strange that N(h) is finite when h > 1, but infinite when h = 1.

To some extent, strangeness is subjective. What a person finds strange or surprising is the mirror image of what feels familiar and expected. Is it strange that the integers and the rationals can be put into one-to-one correspondence but the real numbers form a larger set? Well, I think I understand the proof of that fact, and yet, when I focus on the fact itself, my brow still furrows. Is it strange or remarkable that the infinite series 1/1 + 1/4 + 1/9 + 1/16 . . . converges but 1/2 + 1/3 + 1/5 + 1/7 . . . does not? What about the Banach-Tarski procedure for turning a pea into a planet? Is there anyone for whom that notion is a comfy piece of mental furniture?

The thing is, though, if strangeness is just a matter of unfamiliarity, it can’t be sustained indefinitely. And, indeed, after wrestling with these ideas for a few days and nights, I find the sense of strangeness fading. I’ve changed my mind and I tend to agree with the Jim Lyon statement quoted above.

A simple model helps to clear away some of the sense of mystery. Take the set of all fractions 1/n with n in 1, 2, 3, 4, . . . Now we can count all the fractions whose value is greater than some real number m. For 0 < m ≤ 1, there is a finite set of fractions greater than m. As we reduce m, the set becomes larger, and at m = 0 the set of qualifying fractions is infinite. And yet there are no fractions in the set with 1/n = 0. This is the same behavior observed with abc-hits as h is reduced to 1, and there’s nothing very mysterious about it.

But perhaps this model explains too much. If the set of abc-hits had the same structure as the set of 1/n fractions, there would be no need for an abc conjecture. The truth of the proposition would be easy to establish.

In another comment, Craig asks:

Is anything known, even empirically, about the asymptotic behavior of the number of solutions as h approaches 1? (Aside from the fact that it goes to infinity.) Like, does it go as 1/(h-1)? 1/(h-1)^2? exp[1/(h-1)]?

I suspect this is actually a deep and difficult question. If we had a definitive answer, surely that would be at least a head start toward resolving the abc conjecture.

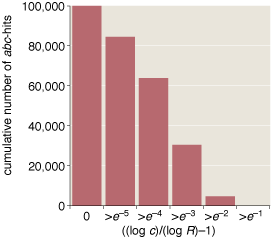

In any case, here is some empirical data that might or might not shed light on the limiting behavior. For a sample of 100,000 hits drawn from the ABC@Home file, I calculated ε = h–1 = ((log c)/(log R)) –1. Then I plotted the cumulative distribution of the ε values, using six bins of exponentially increasing width. The first bin includes counts for all ε > 0, the second for all ε > e–5 ≈ 0.007, the third for all ε > e–4 ≈ 0.018, and so on.

\[\begin{align}

\textit{bin} & \quad \textit{count}\\

0 & \quad 100000\\

e^{-5} & \quad 84532\\

e^{-4} & \quad 63839\\

e^{-3} & \quad 30514\\

e^{-2} & \quad 4748\\

e^{-1} & \quad 48\\

\end{align}\]

The same data in graphic form:

The sample consists of the first 100,000 consecutive abc hits beginning with the first hit having c ≥ 109. I avoided the beginning of the file because the smallest hits seem to have peculiar statistics. Still, I’m not sure whether this is a reasonably fair sample, or even what it means to sample fairly from an infinite data set.

Assuming c=R,

log c=log R

(log c)/(log R)=1.

Because this is never the case, c and R are never equal.

Other than this small oversight, very nice article :)

Great article.

I was going to point out the bit about c=R but I see I’ve been beaten to it. Still great way to make the topic accessible. Thank you.

Surely R=c is trivially impossible. R = c => R | c => R(a) | c => a and c have a common factor.

From jgeralnik on HN https://news.ycombinator.com/item?id=4505264 “I may have misunderstood something, but I believe it is simple to prove that R cannot equal c.

Because c is relatively prime to both a and b, there exists some prime p which b is divisible by and c is not. Because b is divisible by p, R=rad(a,b,c) is also divisible by p. Since R is divisible by p and c is not, R!=c.”

Is anything known, even empirically, about the asymptotic behavior of the number of solutions as h approaches 1? (Aside from the fact that it goes to infinity.) Like, does it go as 1/(h-1)? 1/(h-1)^2? exp[1/(h-1)]?

R ≠ c. Thank you all. Amazing how much computation you can avoid with a little thinking!

If N(h) is the number of hits for value h, it’s not that strange that N(h) is finite when h > 1, but infinite when h = 1.

Now it would be mighty strange if the limit of N(h) as h approaches 1 were finite.

Is it?

@Craig: The ABC@Home project offers plenty of data for curve-fitting. The file records just a and c for each hit, so you need to compute b and R and the ratio of logs. I’m curious too, and I’ll try to do a simple histogram for a subset of the data in a day or two.

THANKS for writing the clearest, most enjoyable explanation of the abc problem I’ve read. With the news of the rumored proof there are so many math-geeks now interested on the subject but I’m afraid most of us got completely disillusioned at the ridiculous level of jargon involved in most other explanations.

The explanation of the problem is awesome!