Scheduled Procrastination

by Brian Hayes

Published 27 June 2006

Forgive me if this story is slightly stale. I meant to write it two weeks ago, but for some reason I kept putting it off.

A paper titled “Scheduling Algorithms for Procrastinators” has been posted on the computer-science section of the arXiv. The authors are Michael A. Bender (Stony Brook University), Raphaël Clifford (University of Bristol) and Kostas Tsichlas (University of Patras). They confess that they got a late start on writing the article, and they finished at the last minute.

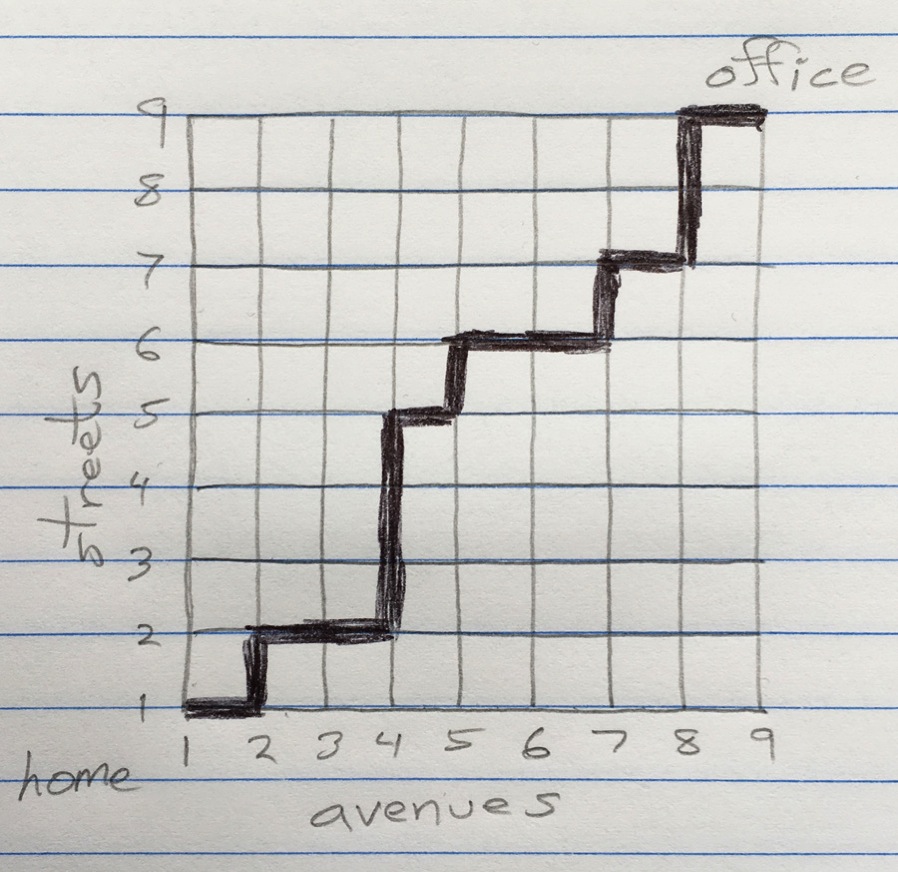

Their theme brings to mind another well-known paper: “The Effects of Moore’s Law and Slacking on Large Computations,” by Chris Gottbrath, Jeremy Bailin, Casey Meakin, Todd Thompson and J. J. Charfman (all of the University of Arizona). Gottbrath et al. considered the options that you face when you are about to begin a long-running computational project. Suppose the job would take three years of continuous work on the best computer available today. The obvious, no-nonsense approach to the problem is to get to work immediately with a state-of-the-art machine, and finish three years hence. But Moore’s Law—the widely noted observation that computer performance doubles every 18 months—suggests an alternative. You could go to the beach for a year and a half; then, returning with an enviable tan, you could buy a machine twice as fast as anything on the market now, so that you would still finish after a total of three years.

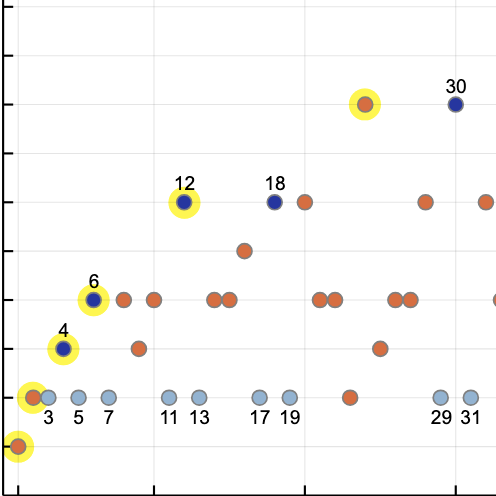

Bender, Clifford and Tsichlas consider a slightly different and more-general class of tasks—not necessarily computations—whose shared trait is that the rate of progress toward a conclusion is nonconstant; instead, the pace of work accelerates as a deadline approaches. This is a familiar phenomenon, at least for the dawdlers among us; personally, I find that my productivity gets a big boost on the night before a major project is due, especially if the penalty for missing the deadline is being flunked or fired. Bender and his colleagues focus on tasks with a simple linear speed law, where the rate of work increases steadily as the deadline nears. In particular, they assume that the initial speed is zero and the acceleration is constant until the job is done.

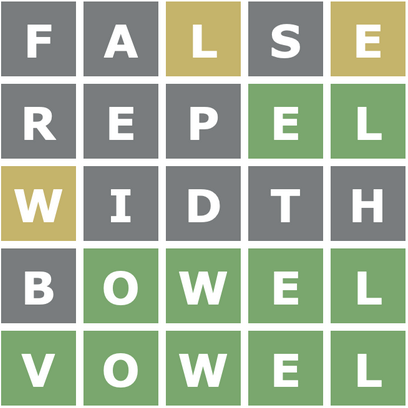

Given a set of such tasks, each with its own start time, deadline and linear speed function, can we find an optimum schedule? The answer is a curiously qualified maybe. If there is a feasible schedule (one where all tasks are completed on time), then there’s an algorithm for finding an optimal schedule (one where the total processing time is minimal). The trouble is, deciding whether or not a feasible schedule exists looks to be a hard problem. No known algorithm can settle the question in polynomial time, and it hasn’t even been established that the problem is in the class NP (nondeterministic polynomial time). For a problem in NP, you may or not be able to find a solution efficiently, but if a candidate solution is dropped in your lap, you can quickly test its correctness; even this limited ability is not guaranteed for the procrastinating scheduler (or the scheduling procrastinator). The root of the difficulty comes from an unexpected quarter. It’s a matter of numerical computation: Scheduled procrastination is hard because evaluating sums of square roots is hard. (Quick: Which is larger, √7 + √26 or √10 + √21?)

There’s more on the sums-of-square-roots problem at The Open Problems Project. For more on how the square-root problem relates to procrastination, see the paper by Bender, Clifford and Tsichlas.

As for me, I’ve got to run; I have a pressing deadline.

Publication history

First publication: 27 June 2006

Converted to Eleventy framework: 22 April 2025