Fourteen years ago I noted that disk drives were growing so fast I couldn’t fill them up. Between 1997 and 2002, storage capacity doubled every year, allowing me to replace a 3 gigabyte drive with a new 120 gigabyte model. I wrote:

Extrapolating the steep trend line of the past five years predicts a thousandfold increase in capacity by about 2012; in other words, today’s 120-gigabyte drive becomes a 120-terabyte unit.

Extending that same growth curve into 2016 would allow for another four doublings, putting us on the threshold of the petabyte disk drive (i.e., \(10^{15}\) bytes).

None of that has happened. The biggest drives in the consumer marketplace hold 2, 4, or 6 terabytes. A few 8- and 10-terabyte drives were recently introduced, but they are not yet widely available. In any case, 10 terabytes is only 1 percent of a petabyte. We have fallen way behind the growth curve.

The graph below extends an illustration that appeared in my 2002 article, recording growth in the areal density of disk storage, measured in bits per square inch:

The blue line shows historical data up to 2002 (courtesy of Edward Grochowski of the IBM Almaden Research Center). The bright green line represents what might have been, if the 1997–2002 trend had continued. The orange line shows the real status quo: We are three orders of magnitude short of the optimistic extrapolation. The growth rate has returned to the more sedate levels of the 1970s and 80s.

What caused the recent slowdown? I think it makes more sense to ask what caused the sudden surge in the 1990s and early 2000s, since that’s the kink in the long-term trend. The answers lie in the details of disk technology. More sensitive read heads developed in the 90s allowed information to be extracted reliably from smaller magnetic domains. Then there was a change in the geometry of the domains: the magnetic axis was oriented perpendicular to the surface of the disk rather than parallel to it, allowing more domains to be packed into the same surface area. As far as I know, there have been no comparable innovations since then, although a new writing technology is on the horizon. (It uses a laser to heat the domain, making it easier to change the direction of magnetization.)

As the pace of magnetic disk development slackens, an alternative storage medium is coming on strong. Flash memory, a semiconductor technology, has recently surpassed magnetic disk in areal density; Micron Technologies reports a laboratory demonstration of 2.7 terabits per square inch. And Samsung has announced a flash-based solid-state drive (SSD) with 15 terabytes of capacity, larger than any mechanical disk drive now on the market. SSDs are still much more expensive than mechanical disks—by a factor of 5 or 10—but they offer higher speed and lower power consumption. They also offer the virtue of total silence, which I find truly golden.

Flash storage has replaced spinning disks in about a quarter of new laptops, as well as in all phones and tablets. It is also increasingly popular in servers (including the machine that hosts bit-player.org). Do disks have a future?

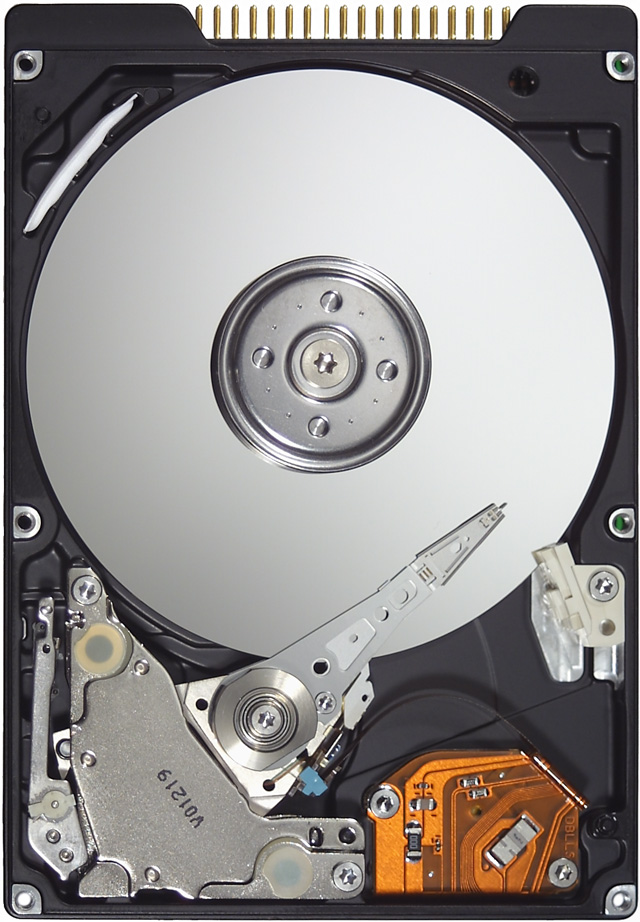

In my sentimental moments, I’ll be sorry to see spinning disks go away. They are such jewel-like marvels of engineering and manufacturing prowess. And they are the last link in a long chain of mechanical contrivances connecting us with the early history of computing—through Turing’s bombe and Babbage’s brass gears all the way back to the Antikythera mechanism two millennia ago. From here on out, I suspect, most computers will have no moving parts.

Maybe in a decade or two the spinning disk will make a comeback, the way vinyl LPs and vacuum tube amplifiers have. “Data that comes off a mechanical disk has a subtle warmth and presence that no solid-state drive can match,” the cogniscenti will tell us.

“You can never be too rich or too thin,” someone said. And a computer can never be too fast. But the demand for data storage is not infinitely elastic. If a file cabinet holds everything in the world you might ever want to keep, with room to spare, there’s not much added utility in having 100 or 1,000 times as much space.

In 2002 I questioned whether ordinary computer users would ever fill a 1-terabyte drive. Specifically, I expressed doubts that my own files would ever reach the million megabyte mark. Several readers reassured me that data will always expand to fill the space available. I could only respond “We’ll see.” Fourteen years later, I now have the terabyte drive of my dreams, and it holds all the words, pictures, music, video, code, and whatnot I’ve accumulated in a lifetime of obsessive digital hoarding. The drive is about half full. Or half empty. So I guess the outcome is still murky. I can probably fill up the rest of that drive, if I live long enough. But I’m not clamoring for more space.

One factor that has surely slowed demand for data storage is the emergence of cloud computing and streaming services for music and movies. I didn’t see that coming back in 2002. If you choose to keep some of your documents on Amazon or Azure, you obviously reduce the need for local storage. Moreover, offloading data and software to the cloud can also reduce the overall demand for storage, and thus the global market for disks or SSDs. A typical movie might take up 3 gigabytes of disk space. If a million people load a copy of the same movie onto their own disks, that’s 3 petabytes. If instead they stream it from Netflix, then in principle a single copy of the file could serve everyone.

In practice, Netflix does not store just one copy of each movie in some giant central archive. They distribute rack-mounted storage units to hundreds of internet exchange points and internet service providers, bringing the data closer to the viewer; this is a strategy for balancing the cost of storage against the cost of communications bandwidth. The current generation of the Netflix Open Connect Appliance has 36 disk drives of 8 terabytes each, plus 6 SSDs that hold 1 terabyte each, for a total capacity of just under 300 terabytes. (Even larger units are coming soon.) In the Netflix distribution network, files are replicated hundreds or thousands of times, but the total demand for storage space is still far smaller than it would be with millions of copies of every movie.

A recent blog post by Eric Brewer, Google’s vice president for infrastructure, points out:

The rise of cloud-based storage means that most (spinning) hard disks will be deployed primarily as part of large storage services housed in data centers. Such services are already the fastest growing market for disks and will be the majority market in the near future. For example, for YouTube alone, users upload over 400 hours of video every minute, which at one gigabyte per hour requires more than one petabyte (1M GB) of new storage every day or about 100x the Library of Congress.

Thus Google will not have any trouble filling up petabyte drives. An accompanying white paper argues that as disks become a data center specialty item, they ought to be redesigned for this environment. There’s no compelling reason to stick with the present physical dimensions of 2½ or 3½ inches. Moreover, data-center disks have different engineering priorities and constraints. Google would like to see disks that maximize both storage capacity and input-output bandwidth, while minimizing cost; reliability of individual drives is less critical because data are distributed redundantly across thousands of disks.

The white paper continues:

An obvious question is why are we talking about spinning disks at all, rather than SSDs, which have higher [input-output operations per second] and are the “future” of storage. The root reason is that the cost per GB remains too high, and more importantly that the growth rates in capacity/$ between disks and SSDs are relatively close . . . , so that cost will not change enough in the coming decade.

If the spinning disk is remodeled to suit the needs and the economics of the data center, perhaps flash storage can become better adapted to the laptop and desktop environment. Most SSDs today are plug-compatible replacements for mechanical disk drives. They have the same physical form, they expect the same electrical connections, and they communicate with the host computer via the same protocols. They pretend to have a spinning disk inside, organized into tracks and sectors. The hardware might be used more efficiently if we were to do away with this charade.

Or maybe we’d be better off with a different charade: Instead of dressing up flash memory chips in the disguise of a disk drive, we could have them emulate random access memory. Why, after all, do we still distinguish between “memory” and “storage” in computer systems? Why do we have to open and save files, launch and shut down applications? Why can’t all of our documents and programs just be everpresent and always at the ready?

In the 1950s the distinction between memory and storage was obvious. Memory was the few kilobytes of magnetic cores wired directly to the CPU; storage was the rack full of magnetic tapes lined up along the wall on the far side of the room. Loading a program or a data file meant finding the right reel, mounting it on a drive, and threading the tape through the reader and onto the take-up reel. In the 1970s and 80s the memory/storage distinction began to blur a little. Disk storage made data and programs instantly available, and virtual memory offered the illusion that files larger than physical memory could be loaded all in one go. But it still wasn’t possible to treat an entire disk as if all the data were all present in memory. The processor’s address space wasn’t large enough. Early Intel chips, for example, used 20-bit addresses, and therefore could not deal with code or data segments larger than \(2^{20} \approx 10^6\) bytes.

We live in a different world now. A 64-bit processor can potentially address \(2^{64}\) bytes of memory, or 16 exabytes (i.e., 16,000 petabytes). Most existing processor chips are limited to 48-bit addresses, but this still gives direct access to 281 terabytes. Thus it would be technically feasible to map the entire content of even the largest disk drive onto the address space of main memory.

In current practice, reading from or writing to a location in main memory takes a single machine instruction. Say you have a spreadsheet open; the program can get the value of any cell with a load instruction, or change the value with a store instruction. If the spreadsheet file is stored on disk rather than loaded into memory, the process is quite different, involving not single instructions but calls to input-output routines in the operating system. First you have to open the file and read it as a one-dimensional stream of bytes, then parse that stream to recreate the two-dimensional structure of the spreadsheet; only then can you access the cell you care about. Saving the file reverses these steps: The two-dimensional array is serialized to form a linear stream of bytes, then written back to the disk. Some of this overhead is unavoidable, but the complex conversions between serialized files on disk and more versatile data structures in memory could be eliminated. A modern processor could address every byte of data—whether in memory or storage—as if it were all one flat array. Disk storage would no longer be a separate entity but just another level in the memory hierarchy, turning what we now call main memory into a new form of cache. From the user’s point of view, all programs would be running all the time, and all documents would always be open.

Is this notion of merging memory and storage an attractive prospect or a nightmare? I’m not sure. There are some huge potential problems. For safety and sanity we generally want to limit which programs can alter which documents. Those rules are enforced by the file system, and they would have to be re-engineered to work in the memory-mapped environment.

Perhaps more troubling is the cognitive readjustment required by such a change in architecture. Do we really want everything at our fingertips all the time? I find it comforting to think of stored files as static objects, lying dormant on a disk drive, out of harm’s way; open documents, subject to change at any instant, require a higher level of alertness. I’m not sure I’m ready for a more fluid and frenetic world where documents are laid aside but never put away. But I probably said the same thing 30 years ago when I first confronted a machine capable of running multiple programs at once (anyone remember Multifinder?).

The dichotomy between temporary memory and permanent storage is certainly not something built into the human psyche. I’m reminded of this whenever I help a neophyte computer user. There’s always an incident like this:

“I was writing a letter last night, and this morning I can’t find it. It’s gone.”

“Did you save the file?”

“Save it? From what? It was right there on the screen when I turned the machine off.”

Finally the big questions: Will we ever get our petabyte drives? How long will it take? What sorts of stuff will we keep on them when the day finally comes?

The last time I tried to predict the future of mass storage, extrapolating from recent trends led me far astray. I don’t want to repeat that mistake, but the best I can suggest is a longer-term baseline. Over the past 50 years, the areal density of mass-storage media has increased by seven orders of magnitude, from about \(10^5\) bits per square inch to about \(10^{12}\). That works out to about seven years for a tenfold increase, on average. If that rate is an accurate predictor of future growth, we can expect to go from the present 10 terabytes to 1 petabyte in about 15 years. But I would put big error bars around that number.

I’m even less sure about how those storage units will be used, if in fact they do materialize. In 2002 my skepticism about filling up a terabyte of personal storage was based on the limited bandwidth of the human sensory system. If the documents stored on your disk are ultimately intended for your own consumption, there’s no point in keeping more text than you can possibly read in a lifetime, or more music than you can listen to, or more pictures than you can look at. I’m now willing to concede that a terabyte of information may not be beyond human capacity to absorb. But a petabyte? Surely no one can read a billion books or watch a million hours of movies.

This argument still seems sound to me, in the sense that the conclusion follows if the premise is correct. But I’m no longer so sure about the premise. Just because it’s my computer doesn’t mean that all the information stored there has to be meant for my eyes and ears. Maybe the computer wants to collect some data for its own purposes. Maybe it’s studying my habits or learning to recognize my voice. Maybe it’s gathering statistics from the refrigerator and washing machine. Maybe it’s playing go, or gossiping over some secret channel with the Debian machine across the alley.

We’ll see.