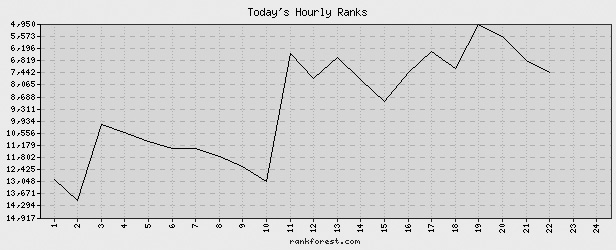

Investors are constantly checking the stock ticker, gamblers check the point spread, and everybody is forever checking their e-mail. For a writerly type like me, however, the unshakeable obsession is checking my Amazon sales rank. Amazon.com calculates a sales rank for every book listed on its Web site, and updates the ranking hourly. Here’s a graph of the hourly fluctuations in the ranking of my book Infrastructure: A Field Guide to the Industrial Landscape over the course of a single day last week:

At any given moment the current rank is listed in the “Product Details” section of the Amazon page for both the hardcover and the paperback editions. The graph above comes from a service called Rankforest, which also tracks both the hardcover and the paperback versions.

From an author’s point of view, there’s a lot of mystery in these numbers. How are the hourly rankings calculated, and what (if anything) do they mean? How do the rankings correlate with actual sales of the book? Amazon is not telling, and so authors and other interested parties have been left to speculate and experiment. The obvious experiment is to order a copy of the book and observe the effect of this purchase on the ranking. I welcome such experimentation with my book. (I would prefer that you do it with the hardcover edition, for which I get a more generous royalty.)

Morris Rosenthal of Foner Books seems to be the leading scryer of signs in this field. The interpretation of the rankings has also been discussed by Chris Anderson, the editor of Wired, in an article, a blog, and a book (current Amazon rank = 463, way above mine, dammit). It’s clear that the hourly ranking cannot simply reflect the number of copies sold within the past hour. After all, in any given hour the vast majority of books sell zero copies, and so there would be a gigantic tie for last place. The rankings also can’t be based in any simple way on total sales since publication, because the standings are much too volatile. The received wisdom is that each sale produces an uptick, followed by an exponential decay until the next sale.

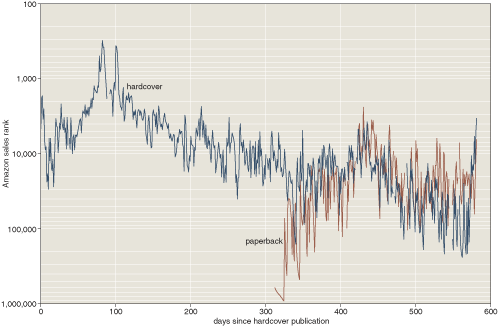

I find all these speculations fascinating, but they are not what I want to write about today. My topic is even more trivial. I’ve been tracking my Amazon ranking for more than a year and a half now, ever since the hardcover edition was first listed in September 2005. (The paperback came out about a year later.) Here’s what the trend looks like:

The graph records the highest (i.e., numerically smallest) rank noted on each day, with rare gaps when I happened to be offline all day. Without question it would be fairer to use the daily average rather than the daily peak. But an author’s ego is a fragile thing, and so I have gone out of my way to make the outlook as rosy as I could.

Here are some of the actual numbers I recorded, for the month of April 2007:

date HB PB 2007-04-01 138263 47878 2007-04-02 192152 22728 2007-04-03 29146 41862 2007-04-04 73628 57155 2007-04-05 37172 16948 2007-04-06 127858 12779 2007-04-07 171363 25212 2007-04-08 55256 20770 2007-04-09 2007-04-10 36333 7121 2007-04-11 112423 19015 2007-04-12 183063 40015 2007-04-13 225457 46781 2007-04-14 239879 29259 2007-04-15 142252 16030 2007-04-16 24803 39200 2007-04-17 93485 18939 2007-04-18 173691 26434 2007-04-19 217440 19360 2007-04-20 44426 17276 2007-04-21 213765 26652 2007-04-22 39014 21699 2007-04-23 150012 18598 2007-04-24 61301 46268 2007-04-25 33800 22474 2007-04-26 10603 39335 2007-04-27 19746 15984 2007-04-28 14027 19324 2007-04-29 16527 25999 2007-04-30 5844 63439

Notice anything out of the ordinary? What I’m looking at is not the overall pattern of rising and falling magnitudes but rather the inner patterns of digits within the numbers. As I’ve been writing down these rankings over the past 20 months, I have had the persistent impression of a peculiar overabundance of repeated digits. Just in this small sample we have 47878, 22728, 192152, 57155, 25212, 36333, 44426, 33800, 39335, 25999, and lots more. Is there something going on here? Is Jeff Bezos broadcasting secret signals hidden in the digit patterns of the Amazon sales rankings?

I consider myself a reasonably sophisticated probabilist. I know I’m not supposed to be shocked when I bring together 23 people and find that two of them share a birthday. And I know that when people try to generate a random sequence of digits by plucking numbers out of their imagination, the result is almost always too homogeneous, with a deficiency of repetitions and other patterns of the kind I’m calling attention to. Thus I was prepared to believe that the patterns I perceived were conjured up out of nothing—phantom regularities in purely random data. Still, day after day, I would note numbers like 55256 and 20770 (which in fact appeared on the same day, one for the hardcover, one for the paperback), and wonder about the odds of such coincidences. Maybe I wasn’t just letting my imagination run away with me.

Finally, this past weekend, I could stand it no longer; I had to find out.

I’m going to have to give away the punchline right now, lest it come as a disappointment later: There is nothing unusual about those numbers. The distribution of digits—the number of pairs and triples and what-not—is well within the range expected for randomly generated numbers of the same size. In other words, I have confirmed the null hypothesis. Often, such a negative result is considered unpublishable, but this one cost me a fair amount of effort, and so I’m determined to get a blog item out of it for better or worse. If the conclusion itself is not very interesting, perhaps I can find something to say about the techniques and technologies that led to it.

How do you decide whether a number like 36333 is too unusual to be a product of random processes? I decided to analyze the numbers in my sample as if they were poker hands (with the rules of poker adapted to a deck of cards with ten ranks and no suits). I confined my attention to the five-digit numbers, which are the most numerous in my sample; of the 850 rankings I had recorded, 458 were in the range between 10,000 and 99,999. Then I wrote a five-line program that takes any such number and classifies it as one of seven types of poker hands:

- five of a kind (e.g., 77777)

- four of a kind (36333, 11119)

- full house (44555, 28288)

- three of a kind (57155, 20900)

- two pairs (97097, 28002)

- one pair (36739, 14912)

- bust (53208, 16897)

I decided to ignore straights; the poker hand 56789 is simply a “bust” according to this scheme of classification. The concept of a flush—all cards of the same suit—doesn’t arise, since there are no suits.

When I ran my little program over the 458 five-digit Amazon sales rankings, here’s what I got:

hand number frequency five of a kind 0 0.0000 four of a kind 3 0.0066 full house 7 0.0153 three of a kind 34 0.0742 two pairs 51 0.1114 one pair 233 0.5087 bust 130 0.2838 TOTAL 458 1.0000

The question now, of course, is what I should expect to see in such a data set, on the hypothesis that the digit patterns are random rather than contrived for some secret, nefarious purpose. Should I find it remarkable that half of the hands have a single pair, or that more than 70 percent have a pair or better? What about those seven full-house hands—should that abundance arouse suspicion? To begin answering questions like these, we need to calculate the probabilities of the various hands.

Having just boasted of my sophistication as a probabilist, I must now confess that the main thing I’ve learned about calculating probabilities is that getting the right answer is highly improbable. I understand the principle of the thing. It’s just a matter of counting. You count the “success” cases and divide by the total number of cases. But counting—even though it tends to come early in the mathematical curriculum—is not always easy.

In the Amazon poker problem, the first trap for the unwary is in counting the total number of cases. You might think that with five decimal digits, there would be 105 possible arrangements, but in fact 104 of those arrangements are excluded from the sample, because numbers in this context cannot have a leading digit of zero. Thus the denominator in the probability calculation will be 90,000 rather than 100,000.

I think I can trust myself to calculate the probability of a five-of-a-kind hand. The first card dealt must not be a zero but can be any of the other nine digits; thereafter, each subsequent digit must be identical to the first one. Thus the number of ways of forming a five-of-a-kind hand is 9×1×1×1×1, and the probability of this outcome is 9/90,000, or 0.0001. You can expect five of a kind in one hand out of every 10,000, if the deal is fair.

I believe this answer is correct, and I am proud of having obtained it; on the other hand, the five-of-a-kind calculation is by far the easiest case. Allow me to try to work out a harder problem: the odds of a full house. I invite you to listen in on my so-called thought process:

Well, a full house is a hand that matches the pattern aaabb. The first digit can be anything but zero, and so there are nine possibilities, but then the second and third digits have to match the first. The fourth digit must differ from the first three, and so there are eight candidates left…. No, wait…. This time zero is allowed, and so there are nine possibilities again. Then the fifth digit has to be identical to the fourth. That gives us the product 9×1×1×9×1, or 81 successes out of 90,000 total cases, for a probability of 0.0009…. Did I get that right?… Of course not. What I’ve calculated is the probability of seeing the pattern aaabb in that precise sequence; I am counting occurrences of 11100, 11122, 11133, …, 99988, but I am not including sequences such as 23233 or 45454. Okay. So one approach, now that I have the number of aaabb sequences, is to multiply by the number of ways of permuting that sequence. We can just take the aaa and intercalate the two b‘s in all possible positions: bbaaa, babaa, baaba,…. No, wait…. The b could be a zero, and so it’s not always allowed to appear in the first position. Hmmm. This is not going well. For the time being, let’s forget about the prohibition of leading zeros, and we’ll correct for it later. That way we can consider all possible permutations of aaabb. As a first step, take aaa, where a can be any of the ten decimal digits, and place a b is all possible positions, allowing b to assume any value that differs from a, so that there are nine choices. There are four places to put the b: baaa, abaa, aaba and aaab. Now, for each of these four sequences, the second b can be placed in any of five positions, and so there are 4×5 = 20 permutations overall. Which means that the total number of full houses is…. Hold on. No, no, no, no, no. Not all those 20 permutations are distinguishable. If we start with baaa and insert a b in either the first or the second slot, we wind up with bbaaa in either case; this sequence should not be counted twice. So how many permutations are there, really? Offhand, I don’t see any way to count them that’s easier than direct enumeration: bbaaa, babaa, baaba, baaab, abbaa, ababa, abaab, aabba, aabab, aaabb. That’s ten cases. Let’s sum up. We know that a can take any of ten values and b has nine possible values, and there are ten ways of arranging three a‘s and two b‘s. Thus the total number of combinations is 10×9×10 = 900. But, don’t forget, now we have to subtract away all those sequences that start with a zero. For this purpose it doesn’t matter whether the first symbol is an a or a b; exactly 10 percent of the sequences will have a leading zero. Thus the number of full houses is 900–90=810. This gives a probability of 810/90,000 = 0.009.

I happen to know, from an independent calculation, that this answer is correct, and so it’s time to stop. If I didn’t know, however, I might well go on to ask whether we need to interchange the a‘s and b‘s—that is, consider the case of sequences aaabb where a has only nine allowed values and b has ten. (Why don’t we have to take that into account?)

I am mildly embarrassed to put this lurching, stumbling, caricature of a probability calculation on public exhibition, and yet it is a fair description of how I often struggle with a problem of this kind. Am I the only one who suffers so? Not everyone does. I know people who could carry out the same computation quite deftly; they command the intuition, the spürkraft, to zero in immediately on the right approach, like a chess player who doesn’t waste time considering fruitless moves. I admire that kind of finesse, but I don’t possess it.

On the other hand, I know something else. I know another way to solve the problem, with less fuss. As I mentioned above, I have already cooked up a little program that can take any five-digit Amazon rank and classify it into one of the seven categories of poker hands. In milliseconds I can run that program on all possible five-digit numbers; after all, there are only 90,000 of them, and it’s quite easy to generate all of them in sequence. Then I can just count the number of hands in each category, and all the probabilities come tumbling out in a neatly formatted table:

hand number frequency five of a kind 9 0.0001 four of a kind 405 0.0045 full house 810 0.0090 three of a kind 6480 0.0720 two pairs 9720 0.1080 one pair 45360 0.5040 bust 27216 0.3024 TOTAL 90000 1.0000

If Fermat or Pascal or the Bernoullis were asked their opinion on this approach to probability, something tells me they would find it distasteful. I’m ambivalent myself. Returning to the chess analogy, this is the equivalent of the machine that beats a grandmaster by brute force, scanning millions of positions but knowing nothing of strategy. You can win that way, but you don’t get any style points. More important, the method is frustratingly opaque. I get the answers, and I have reasonable confidence that they’re correct, but the computation gives me no understanding of why they’re correct. Still another objection is that the method does not scale well. If my Amazon rankings had ten digits instead of five (perish the thought!), I’d have a hard time classifying the nine billion possible hands. (But then again I’m not sure the more analytic method scales all that well either.)

Setting aside these qualms, we can now take the predictions of theory and the observations of the Amazon sample and compare them side by side:

hand prediction observation five of a kind 0.0001 0.0000 four of a kind 0.0045 0.0066 full house 0.0090 0.0153 three of a kind 0.0720 0.0742 two pairs 0.1080 0.1114 one pair 0.5040 0.5087 bust 0.3024 0.2838 TOTAL 1.0000 1.0000

Some of these frequencies match quite closely (three of a kind, one pair); others are a bit off the mark (full house, bust). In a finite sample, of course, you would never expect an exact match to the theoretical frequencies—but are the discrepancies we’re seeing significant or not? My personal instinct says that there’s nothing amiss here, that the observed frequencies are consistent with the null hypothesis. In other words, the rankings could just as well be random numbers. The old rule of thumb that the variation should be less than the square root of the observation leads to the same conclusion. We could quantify these intuitions by calculating variances or standard deviations, doing a Χ2 test, and so on. But if I can barely calculate a simple probability, can I be trusted to navigate all those treacherous subtleties such as choosing the correct number of degrees of freedom?

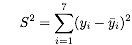

Again there’s another way to go about it, relying on lots of ignorant computation to replace a little smart mathematics. The question we want to answer is this: Given a set of 458 randomly generated five-digit numbers, what is the probability that the random set will differ from the predicted frequencies by at least as much as the observed Amazon set? Suppose we measure distance from the theoretical prediction in terms of the sum of the squared differences:

where the index i ranges over the seven types of poker hand, and the expression in parentheses is the difference between the observed and predicted frequency for each type of hand. (Technical note: The seven numbers entering into this statistic are not independent. For example, if full-house hands are in surfeit, there has to be a compensating deficiency somewhere else. How much should I worry about this?) For the actual Amazon results, S2 works out to 4.267×10–4. Now we can generate lots of batches of random Amazon poker hands, each batch consisting of 458 five-digit numbers, and calculate S2 for each batch. What proportion of them will have an S2 value exceeding 4.267×10–4? The answer, based on half a million batches, is 80 percent. Thus all the anomalies I thought I was seeing in those numbers are pure delusion.

Needless to say, I was rooting for another outcome. I would have enjoyed finding something spooky and inexplicable in the Amazon rankings. Instead, all I’ve proved is that my intuition about what random numbers look like is not to be trusted. This is a disappointment, but maybe I can salvage something from the ruins. Perhaps I can claim the discovery that the Amazon rankings are a fairly good source of random numbers.

Large-scale differences and movements in the rankings are surely not random. It’s not purely a matter of chance that my book’s current rank is 43,888 (what an interesting number!), while some preposterous tale about a pubescent wizard occupies the top of the list—even though that book hasn’t actually been published yet. (Do I sound bitter?) The rankings are nonrandom in another way as well: The first digits are not uniformly distributed but have a Benford or Zipf distribution, with an excess of ones and a shortage of nines. It’s interesting that even though the randomly generated numbers do not share this property—the first digits have a uniform distribution over the range 1 through 9—the poker-hand analysis shows that the frequency of pairs, triples, and other patterns is identical in the two data sets. Thus the digits to the right of the first digit do seem to have the statistical properties of random numbers. The source of the randomness is presumably the hourly reshuffling of the rankings by the actions of thousands of Amazon shoppers—actions that are all too predictable in the aggregate (curse you, Harry Potter) but quite random in detail.

Perhaps it’s worth noting that when the RAND Corporation prepared their famous book A Million Random Digits with 100,000 Normal Deviates in 1955, they also employed the poker test as a measure of randomness. I’m relieved to find that their calculation of the theoretical frequency of the seven types of hands agrees with mine. (They don’t say how they performed the calculation.) The current Amazon sales rank for the book is 2,668,928 (hardcover) and 281,270 (paperback).