One Thursday afternoon last month, dozens of fires and explosions rocked three towns along the Merrimack River in Massachusetts. By the end of the day 131 buildings were damaged or destroyed, one person was killed, and more than 20 were injured. Suspicion focused immediately on the natural gas system. It looked like a pressure surge in the pipelines had driven gas into homes where stoves, heaters, and other appliances were not equipped to handle the excess pressure. Earlier this week the National Transportation Safety Board released a brief preliminary report supporting that hypothesis.

I had believed such a catastrophe was all but impossible. The natural gas industry has many troubles, including chronic leaks that release millions of tons of methane into the atmosphere, but I had thought that pressure regulation was a solved problem. Even if someone turned the wrong valve, failsafe mechanisms would protect the public. Evidently not. (I am not an expert on natural gas. While working on my book Infrastructure, I did some research on the industry and the technology, toured a pipeline terminal, and spent a day with a utility crew installing new gas mains in my own neighborhood. The pages of the book that discuss natural gas are online here.)

The hazards of gas service were already well known in the 19th century, when many cities built their first gas distribution systems. Gas in those days was not “natural” gas; it was a product manufactured by roasting coal, or sometimes the tarry residue of petroleum refining, in an atmosphere depleted of oxygen. The result was a mixture of gases, including methane and other hydrocarbons but also a significant amount of carbon monoxide. Because of the CO content, leaks could be deadly even if the gas didn’t catch fire.

Every city needed its own gasworks, because there were no long-distance pipelines. The output of the plant was accumulated in a gasholder, a gigantic tank that confined the gas at low pressure—less than one pound per square inch above atmospheric pressure (a unit of measure known as pounds per square inch gauge, or psig). The gas was gently wafted through pipes laid under the street to reach homes at a pressure of 1/4 or 1/2 psig. Overpressure accidents were unlikely because the entire system worked at the same modest pressure. As a matter of fact, the greater risk was underpressure. If the flow of gas was interrupted even briefly, thousands of pilot lights would go out; then, when the flow resumed, unburned toxic gas would seep into homes. Utility companies worked hard to ensure that would never happen.

Gasholders looming over a neighborhood in Genoa, Italy, once held manufactured gas for use at a steelmill. The photograph was made in 2001; Google Maps shows the tanks have since been demolished.

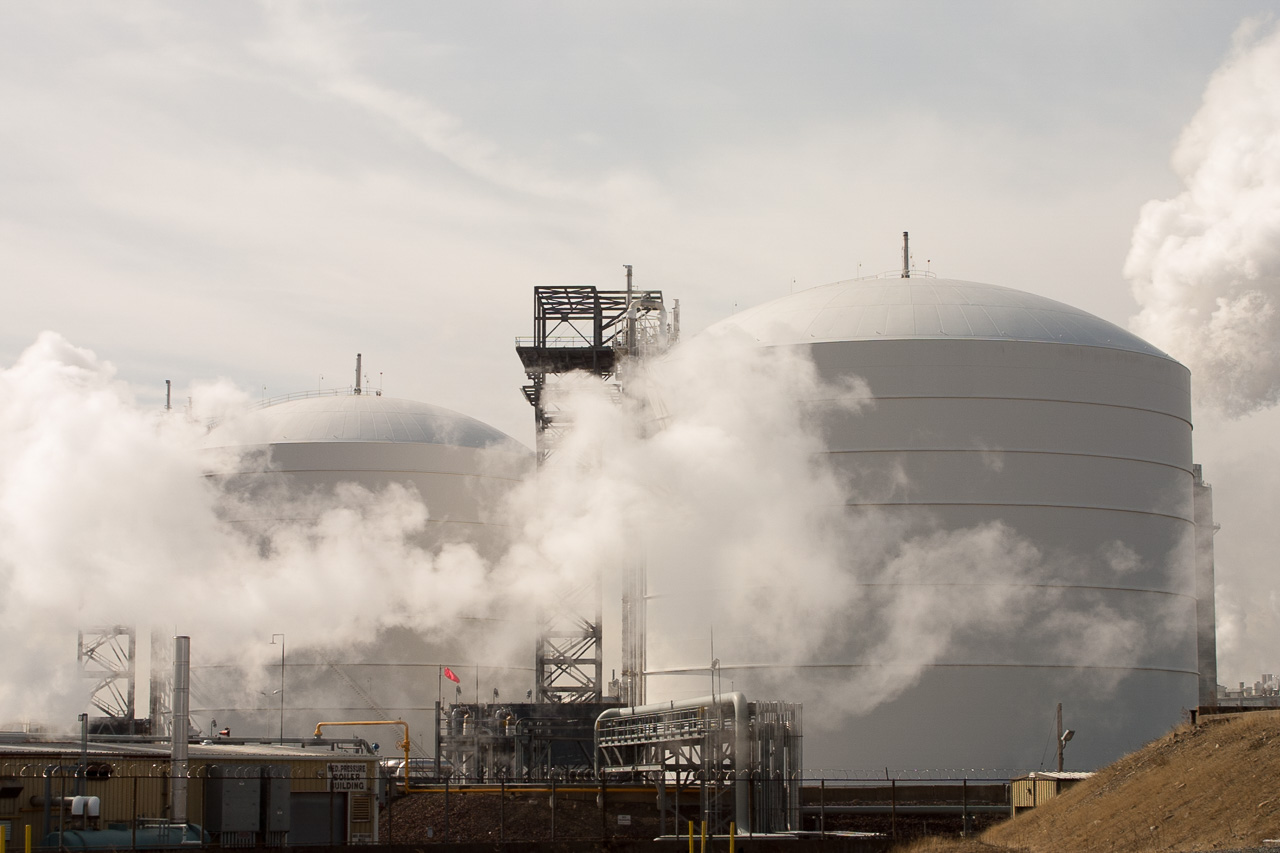

Gas technology has evolved a great deal since the gaslight era. Long-distance pipelines carry natural gas across continents at pressures of 1,000 psig or more. At the destination, the gas is stored in underground cavities or as a cryogenic liquid. It enters the distribution network at pressures in the neighborhood of 100 psig. The higher pressures allow smaller diameter pipes to serve larger territories. But the pressure must still be reduced to less than 1 psig before the gas is delivered to the customer. Having multiple pressure levels complicates the distribution system and requires new safeguards against the risk of high-pressure gas going where it doesn’t belong. Apparently those safeguards didn’t work last month in the Merrimack valley.

Cryogenic storage tanks in Everett, Mass., near Boston, hold liquified natural gas that supplies utilities in surrounding communities.

The gas system in that part of Massachusetts is operated by Columbia Gas, a subsidiary of a company called NiSource, with headquarters in Indiana. At the time of the conflagration, contractors for Columbia were upgrading distribution lines in the city of Lawrence and in two neighboring towns, Andover and North Andover. The two-tier system had older low-pressure mains—including some cast-iron pipes dating back to the early 1900s—fed by a network of newer lines operating at 75 psig. Fourteen regulator stations handled the transfer of gas between systems, maintaining a pressure of 1/2 psig on the low side.

The NTSB preliminary report gives this account of what happened around 4 p.m. on September 13:

The contracted crew was working on a tie-in project of a new plastic distribution main and the abandonment of a cast-iron distribution main. The distribution main that was abandoned still had the regulator sensing lines that were used to detect pressure in the distribution system and provide input to the regulators to control the system pressure. Once the contractor crews disconnected the distribution main that was going to be abandoned, the section containing the sensing lines began losing pressure.

As the pressure in the abandoned distribution main dropped about 0.25 inches of water column (about 0.01 psig), the regulators responded by opening further, increasing pressure in the distribution system. Since the regulators no longer sensed system pressure they fully opened allowing the full flow of high-pressure gas to be released into the distribution system supplying the neighborhood, exceeding the maximum allowable pressure.

When I read those words, I groaned. The cause of the accident was not a leak or an equipment failure or a design flaw or a worker turning the wrong valve. The pressure didn’t just creep up beyond safe limits while no one was paying attention; the pressure was driven up by the automatic control system meant to keep it in bounds. The pressure regulators were “trying” to do the right thing. Sensor readings told them the pressure was falling, and so the controllers took corrective action to keep the gas flowing to customers. But the feedback loop the regulators relied on was not in fact a loop. They were measuring pressure in one pipe and pumping gas into another.

The conjectured cause of the fires and explosions in Lawrence and nearby towns is a misconfigured pressure-control system, according to the NTSB preliminary report. Service was switched to a new low-pressure gas main, but the pressure regulator continued to monitor sensors attached to the old pipeline, now abandoned and empty.

The NTSB’s preliminary report offers no conclusions or recommendations, but it does note that the contractor in Lawrence was following a “work package” prepared by Columbia Gas, which did not mention moving or replacing the pressure sensors. Thus if you’re looking for someone to blame, there’s a hint about where to point your finger. The clue is less useful, however, if you’re hoping to understand the disaster and prevent a recurrence. “Make sure all the parts are connected” is doubtless a good idea, but better still is building a failsafe system that will not burn the city down when somebody goofs.

Suppose you’re taking a shower, and the water feels too warm. You nudge the mixing valve toward cold, but the water gets hotter still. When you twist the valve a little further in the same direction, the temperature rises again, and the room fills with steam. In this situation, you would surely not continue turning the knob until you were scalded. At some point you would get out of the shower, shut off the water, and investigate. Maybe the controls are mislabeled. Maybe the plumber transposed the pipes.

Since you do so well controlling the shower, let’s put you in charge of regulating the municipal gas service. You sit in a small, windowless room, with your eyes on a pressure gauge and your hand on a valve. The gauge has a pointer indicating the measured pressure in the system, and a red dot (called a bug) showing the desired pressure, or set point. If the pointer falls below the bug, you open the valve a little to let in more gas; if the pointer drifts up too high, you close the valve to reduce the flow. (Of course there’s more to it than just open and close. For a given deviation from the set point, how far should you twist the valve handle? Control theory answers this question.)

It’s worth noting that you could do this job without any knowledge of what’s going on outside the windowless room. You needn’t give a thought to the nature of the “plant,” the system under control. What you’re controlling is the position of the needle on the gauge; the whole gas distribution network is just an elaborate mechanism for linking the valve you turn with the gauge you watch. Many automatic control system operate in exactly this mindless mode. And they work fine—until they don’t.

As a sentient being, you do in fact have a mental model of what’s happening outside. Just as the control law tells you how to respond to changes in the state of the plant, your model of the world tells you how the plant should respond to your control actions. For example, when you open the valve to increase the inflow of gas, you expect the pressure to increase. (Or, in some circumstances, to decrease more slowly. In any event, the sign of the second derivative should be positive.) If that doesn’t happen, the control law would call for making an even stronger correction, opening the valve further and forcing still more gas into the pipeline. But you, in your wisdom, might pause to consider the possible causes of this anomaly. Perhaps pressure is falling because a backhoe just ruptured a gas main. Or, as in Lawrence last month, maybe the pressure isn’t actually falling at all; you’re looking at sensors plugged into the wrong pipes. Opening the valve further could make matters worse.

Could we build an automatic control system with this kind of situational awareness? Control theory offers many options beyond the simple feedback loop. We might add a supervisory loop that essentially controls the controller and sets the set point. And there is an extensive literature on predictive control, where the controller has a built-in mathematical model of the plant, and uses it to find the best trajectory from the current state to the desired state. But neither of these techniques is commonly used for the kind of last-ditch safety measures that might have saved those homes in the Merrimack Valley. More often, when events get too weird, the controller is designed to give up, bail out, and leave it to the humans. That’s what happened in Lawrence.

Minutes before the fires and explosions occurred, the Columbia Gas monitoring center in Columbus, Ohio [probably a windowless room], received two high-pressure alarms for the South Lawrence gas pressure system: one at 4:04 p.m. and the other at 4:05 p.m. The monitoring center had no control capability to close or open valves; its only capability was to monitor pressures on the distribution system and advise field technicians accordingly. Following company protocol, at 4:06 p.m., the Columbia Gas controller reported the high-pressure event to the Meters and Regulations group in Lawrence. A local resident made the first 9-1-1 call to Lawrence emergency services at 4:11 p.m.

Columbia Gas shut down the regulator at issue by about 4:30 p.m.

I admit to a morbid fascination with stories of technological disaster. I read NTSB accident reports the way some people consume murder mysteries. The narratives belong to the genre of tragedy. In using that word I don’t mean just that the loss of life and property is very sad. These are stories of people with the best intentions and with great skill and courage, who are nonetheless overcome by forces they cannot master. The special pathos of technological tragedies is that the engines of our destruction are machines that we ourselves design and build.

Looking on the sunnier side, I suspect that technological tragedies are more likely than Oedipus Rex or Hamlet to suggest a practical lesson that might guide our future plans. Let me add two more examples that seem to have plot elements in common with the Lawrence gas disaster.

First, the meltdown at the Three Mile Island nuclear power plant in 1979. In that event, a maintenance mishap was detected by the automatic control system, which promptly shut down the reactor, just as it was supposed to do, and started emergency pumps to keep the uranium fuel rods covered with cooling water. But in the following minutes and hours, confusion reigned in the control room. Because of misleading sensor readings, the crowd of operators and engineers believed the water level in the reactor was too high, and they struggled mightily to lower it. Later they realized the reactor had been running dry all along.

Second, the crash of Air France 447, an overnight flight from Rio de Janeiro to Paris, in 2009. In this case the trouble began when ice at high altitude clogged pitot tubes, the sensors that measure airspeed. With inconsistent and implausible speed inputs, the autopilot and flight-management systems disengaged and sounded an alarm, basically telling the pilots “You’re on your own here.” Unfortunately, the pilots also found the instrument data confusing, and formed the erroneous opinion that they needed to pull the nose up and climb steeply. The aircraft entered an aerodynamic stall and fell tail-first into the ocean with the loss of all on board.

In these events no mechanical or physical fault made an accident inevitable. In Lawrence the pipes and valves functioned normally, as far as I can tell from press reports and the NTSB report. Even the sensors were working; they were just in the wrong place. At Three Mile Island there were multiple violations of safety codes and operating protocols; nevertheless, if either the automatic or the human controllers had correctly diagnosed the problem, the reactor would have survived. And the Air France aircraft over the Atlantic was airworthy to the end. It could have flown on to Paris if only there had been the means to level the wings and point it in the right direction.

All of these events feel like unnecessary disasters—if we were just a little smarter, we could have avoided them—but the fires in Lawrence are particularly tormenting in this respect. With an aircraft 35,000 feet over the ocean, you can’t simply press Pause when things don’t go right. Likewise a nuclear reactor has no safe-harbor state; even after you shut down the fission chain reaction, the core of the reactor generates enough heat to destroy itself. But Columbia Gas faced no such constraints in Lawrence. Even if the pressure-regulating system is not quite as simple as I have imagined it, there is always an escape route available when parameters refuse to respond to control inputs. You can just shut it all down. Safeguards built into the automatic control system could do that a lot more quickly than phone calls from Ohio. The service interruption would be costly for the company and inconvenient for the customers, but no one would lose their home or their life.

Control theory and control engineering are now embarking on their greatest adventure ever: the design of self-driving cars and trucks. Next year we may see the first models without a steering wheel or a brake pedal—there goes the option of asking the driver (passenger?) to take over. I am rooting for this bold undertaking to succeed. I am also reminded of a term that turns up frequently in discussions of Athenian tragedy: hubris.