Controlled Flight into Terrain is the aviation industry’s term for what happens when a properly functioning airplane plows into the ground because the pilots are distracted or disoriented. What a nightmare. Even worse, in my estimation, is Automated Flight into Terrain, when an aircraft’s control system forces it into a fatal nose dive despite the frantic efforts of the crew to save it. That is the conjectured cause of two recent crashes of new Boeing 737 MAX 8 airplanes. I’ve been trying to reason my way through to an understanding of how those accidents could have happened.

Disclaimer: The investigations of the MAX 8 disasters are in an early stage, so much of what follows is based on secondary sources—in other words, on leaks and rumors and the speculations of people who may or may not know what they’re talking about. As for my own speculations: I’m not an aeronautical engineer, or an airframe mechanic, or a control theorist. I’m not even a pilot. Please keep that in mind if you choose to read on.

The accidents

Early on the morning of October 29, 2018, Lion Air Flight 610 departed Jakarta, Indonesia, with 189 people on board. The airplane was a four-month-old 737 MAX 8—the latest model in a line of Boeing aircraft that goes back to the 1960s. Takeoff and climb were normal to about 1,600 feet, where the pilots retracted the flaps (wing extensions that increase lift at low speed). At that point the aircraft unexpectedly descended to 900 feet. In radio conversations with air traffic controllers, the pilots reported a “flight control problem” and asked about their altitude and speed as displayed on the controllers’ radar screens. Cockpit instruments were giving inconsistent readings. The pilots then redeployed the flaps and climbed to 5,000 feet, but when the flaps were stowed again, the nose dipped and the plane began to lose altitude. Over the next six or seven minutes the pilots engaged in a tug of war with their own aircraft, as they struggled to keep the nose level but the flight control system repeatedly pushed it down. In the end the machine won. The airplane plunged into the sea at high speed, killing everyone aboard.

The second crash happened March 8, when Ethiopian Airlines Flight 302 went down six minutes after taking off from Addis Ababa, killing 157. The aircraft was another MAX 8, just two months old. The pilots reported control problems, and data from a satellite tracking service showed sharp fluctuations in altitude. The similarities to the Lion Air crash set off alarm bells: If the same malfunction or design flaw caused both accidents, it might also cause more. Within days, the worldwide fleet of 737 MAX aircraft was grounded. Data recovered since then from the Flight 302 wreckage has reinforced the suspicion that the two accidents are closely related.

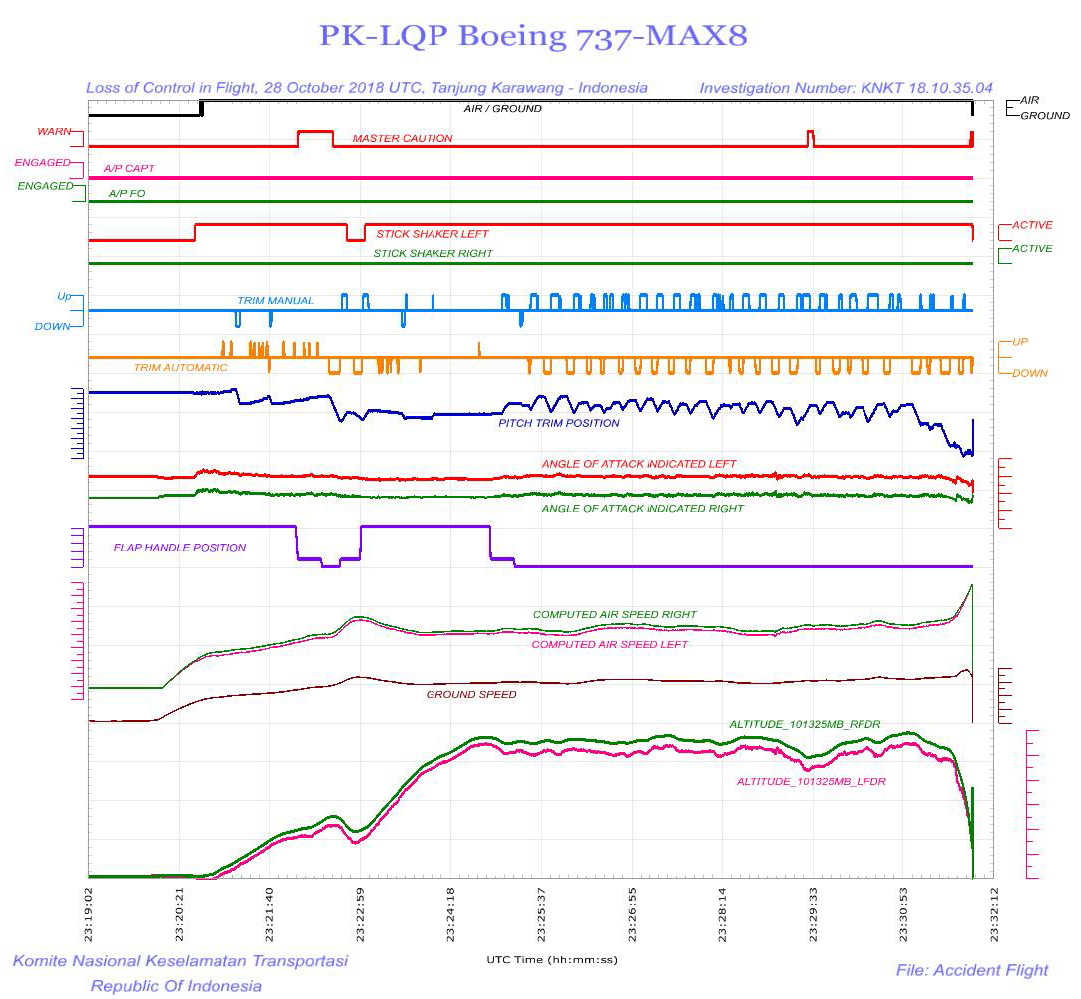

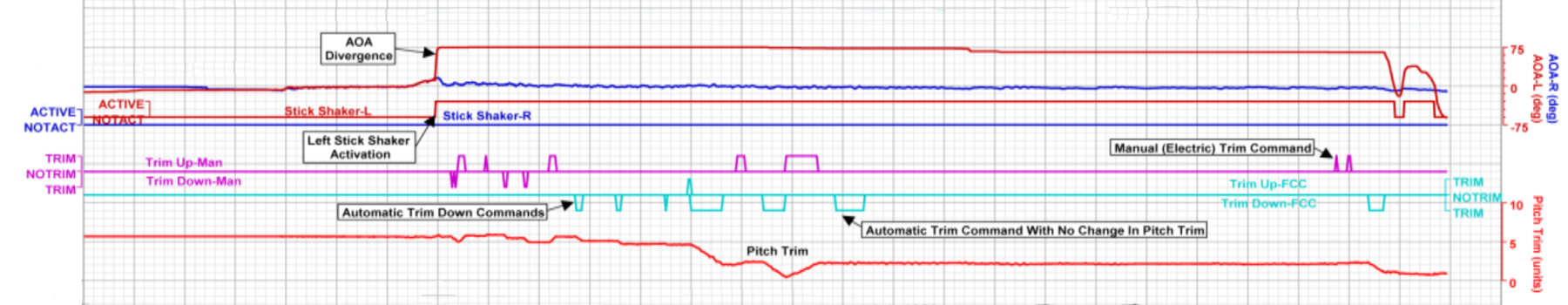

The grim fate of Lion Air 610 can be traced in brightly colored squiggles extracted from the flight data recorder. (The chart was published in November in a preliminary report from the Indonesian National Committee on Transportation Safety.)

The outline of the story is given in the altitude traces at the bottom of the chart. The initial climb is interrupted by a sharp dip; then a further climb is followed by a long, erratic roller coaster ride. At the end comes the dive, as the aircraft plunges 5,000 feet in a little more than 10 seconds. (Why are there two altitude curves, separated by a few hundred feet? I’ll come back to that question at the end of this long screed.)

All those ups and downs were caused by movements of the horizontal stabilizer, the small winglike control surface at the rear of the fuselage. The stabilizer controls the airplane’s pitch attitude—nose-up vs. nose-down. On the 737 it does so in two ways. A mechanism for pitch trim tilts the entire stabilizer, whereas pushing or pulling on the pilot’s control yoke moves the elevator, a hinged tab at the rear of the stabilizer. In either case, moving the trailing edge of the surface upward tends to force the nose of the airplane up, and vice versa. Here we’re mainly concerned with trim changes rather than elevator movements.

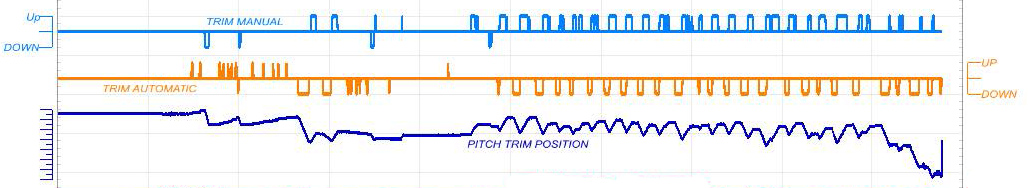

Commands to the pitch-trim system and their effect on the airplane are shown in three traces from the flight data, which I reproduce here for convenience:

The line labeled “trim manual” (light blue) reflects the pilots’ inputs, “trim automatic” (orange) shows commands from the airplane’s electronic systems, and “pitch trim position” (dark blue) represents the tilt of the stabilizer, with higher position on the scale denoting a nose-up command. This is where the tug of war between man and machine is clearly evident. In the latter half of the flight, the automatic trim system repeatedly commands nose down, at intervals of roughly 10 seconds. In the breaks between those automated commands, the pilots dial in nose-up trim, using buttons on the control yoke. In response to these conflicting commands, the position of the horizontal stabilizer oscillates with a period of 15 or 20 seconds. The see-sawing motion continues for at least 20 cycles, but toward the end the unrelenting automatic nose-down adjustments prevail over the briefer nose-up commands from the pilots. The stabilizer finally reaches its limiting nose-down deflection and stays there as the airplane plummets into the sea.

Angle of attack

What’s to blame for the perverse behavior of the automatic pitch trim system? The accusatory finger is pointing at something called MCAS, a new feature of the 737 MAX series. MCAS stands for Maneuvering Characteristics Augmentation System—an impressively polysyllabic name that tells you nothing about what the thing is or what it does. As I understand it, MCAS is not a piece of hardware; there’s no box labeled MCAS in the airplane’s electronic equipment bays. MCAS consists entirely of software. It’s a program running on a computer.

MCAS has just one function. It is designed to help prevent an aerodynamic stall, a situation in which an airplane has its nose pointed up so high with respect to the surrounding airflow that the wings can’t keep it aloft. A stall is a little like what happens to a bicyclist climbing a hill that keeps getting steeper and steeper: Eventually the rider runs out of oomph, wobbles a bit, and then rolls back to the bottom. Pilots are taught to recover from stalls, but it’s not a skill they routinely practice with a planeful of passengers. In commercial aviation the emphasis is on avoiding stalls—forestalling them, so to speak. Airliners have mechanisms to detect an imminent stall and warn the pilot with lights and horns and a “stick shaker” that vibrates the control yoke. On Flight 610, the captain’s stick was shaking almost from start to finish.

Some aircraft go beyond mere warnings when a stall threatens. If the aircraft’s nose continues to pitch upward, an automated system intervenes to push it back down—if necessary overriding the manual control inputs of the pilot. MCAS is designed to do exactly this. It is armed and ready whenever two criteria are met: The flaps are up (generally true except during takeoff and landing) and the airplane is under manual control (not autopilot). Under these conditions the system is triggered whenever an aerodynamic quantity called angle of attack, or AoA, rises into a dangerous range.

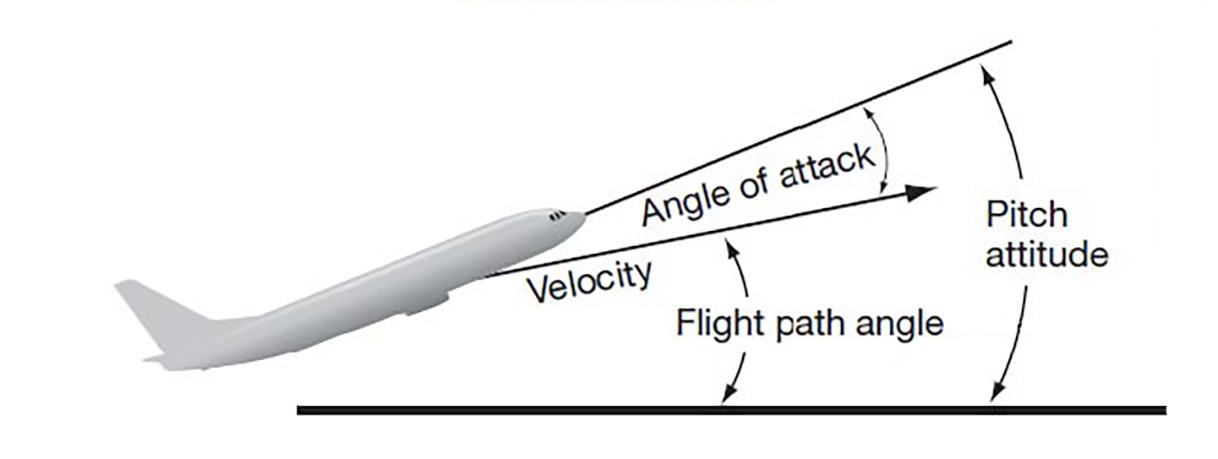

Angle of attack is a concept subtle enough to merit a diagram:

The various angles at issue are rotations of the aircraft body around the pitch axis, a line parallel to the wings, perpendicular to the fuselage, and passing through the airplane’s center of gravity. If you’re sitting in an exit row, the pitch axis might run right under your seat. Rotation about the pitch axis tilts the nose up or down. Pitch attitude is defined as the angle of the fuselage with respect to a horizontal plane. The flight-path angle is measured between the horizontal plane and the aircraft’s velocity vector, thus showing how steeply it is climbing or descending. Angle of attack is the difference between pitch attitude and flight-path angle. It is the angle at which the aircraft is moving through the surrounding air (assuming the air itself is motionless, i.e., no wind).

AoA affects both lift (the upward force opposing the downward tug of gravity) and drag (the dissipative force opposing forward motion and the thrust of the engines). As AoA increases from zero, lift is enhanced because of air impinging on the underside of the wings and fuselage. For the same reason, however, drag also increases. As the angle of attack grows even steeper, the flow of air over the wings becomes turbulent; beyond that point lift diminishes but drag continues increasing. That’s where the stall sets in. The critical angle for a stall depends on speed, weight, and other factors, but usually it’s no more than 15 degrees.

Neither the Lion Air nor the Ethiopian flight was ever in danger of stalling, so if MCAS was activated, it must have been by mistake. The working hypothesis mentioned in many press accounts is that the system received and acted upon erroneous input from a failed AoA sensor.

A sensor to measure angle of attack is conceptually simple. It’s essentially a weathervane poking out into the airstream. In the photo below, the angle-of-attack sensor is the small black vane just forward of the “737 MAX” legend. Hinged at the front, the vane rotates to align itself with the local airflow and generates an electrical signal that represents the vane’s angle with respect to the axis of the fuselage. The 737 MAX has two angle-of-attack vanes, one on each side of the nose. (The protruding devices above the AoA vane are pitot tubes, used to measure air speed. Another device below the word MAX is probably a temperature sensor.)

Angle of attack was not among the variables displayed to the pilots of the Lion Air 737, but the flight data recorder did capture signals derived from the two AoA sensors:

There’s something dreadfully wrong here. The left sensor is indicating an angle of attack about 20 degrees steeper than the right sensor. That’s a huge discrepancy. There’s no plausible way those disparate readings could reflect the true state of the airplane’s motion through the air, with the left side of the nose pointing sky-high and the right side near level. One of the measurements must be wrong, and the higher reading is the suspect one. If the true angle of attack ever reached 20 degrees, the airplane would already be in a deep stall. Unfortunately, on Flight 610 MCAS was taking data only from the left-side AoA sensor. It interpreted the nonsensical measurement as a valid indicator of aircraft attitude, and worked relentlessly to correct it, up to the very moment the airplane hit the sea.

Cockpit automation

The tragedies in Jakarta and Addis Ababa are being framed as a cautionary tale of automation run amok, with computers usurping the authority of pilots. The Washington Post editorialized:

A second fatal airplane accident involving a Boeing 737 MAX 8 may have been a case of man vs. machine…. The debacle shows that regulators should apply extra review to systems that take control away from humans when safety is at stake.

Tom Dieusaert, a Belgian journalist who writes often on aviation and computation, offered this opinion:

What can’t be denied is that the Boeing of Flight JT610 had serious computer problems. And in the hi-tech, fly-by-wire world of aircraft manufacturers, where pilots are reduced to button pushers and passive observers, these accidents are prone to happen more in the future.

The button-pushing pilots are particularly irate. Gregory Travis, who is both a pilot and software developer, summed up his feelings in this acerbic comment:

“Raise the nose, HAL.”

“I’m sorry, Dave, I can’t do that.”

Even Donald Trump tweeted on the issue:

Airplanes are becoming far too complex to fly. Pilots are no longer needed, but rather computer scientists from MIT. I see it all the time in many products. Always seeking to go one unnecessary step further, when often old and simpler is far better. Split second decisions are….

….needed, and the complexity creates danger. All of this for great cost yet very little gain. I don’t know about you, but I don’t want Albert Einstein to be my pilot. I want great flying professionals that are allowed to easily and quickly take control of a plane!

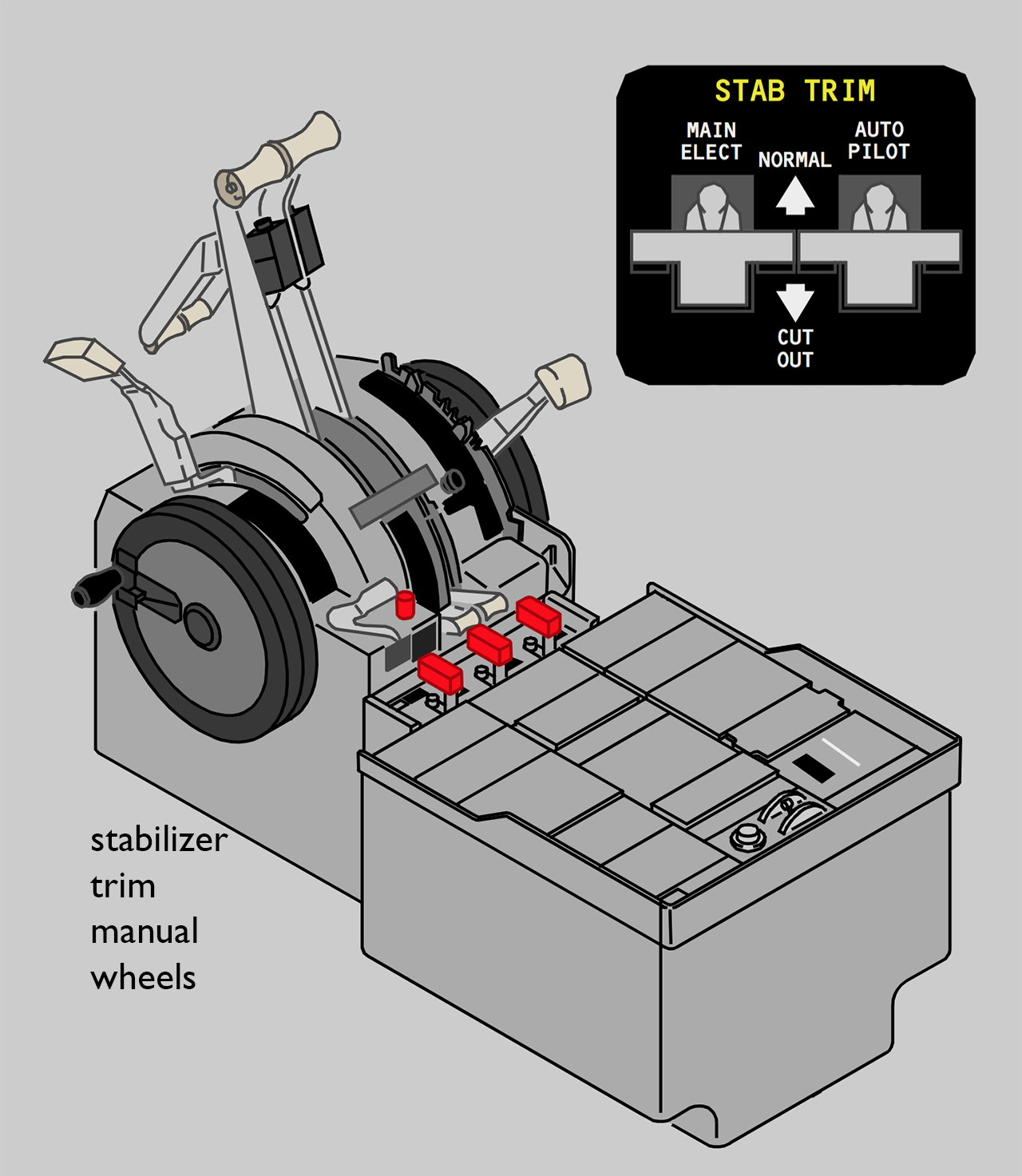

There’s considerable irony in the complaint that the 737 is too automated; in many respects the aircraft is in fact quaintly old-fashioned. The basic design goes back more than 50 years, and even in the latest MAX models quite a lot of 1960s technology survives. The primary flight controls are hydraulic, with a spider web of high-pressure tubing running directly from the control yokes in the cockpit to the ailerons, elevator, and rudder. If the hydraulic systems should fail, there’s a purely mechanical backup, with cables and pulleys to operate the various control surfaces. For stabilizer trim the primary actuator is an electric motor, but again there’s a mechanical fallback, with crank wheels near the pilots’ knees pulling on cables that run all the way back to the tail.

Other aircraft are much more dependent on computers and electronics. The 737′s principal competitor, the Airbus A320, is a thoroughgoing fly-by-wire vehicle. The pilot flies the computer, and the computer flies the airplane. Specifically, the pilot decides where to go—up, down, left, right—but the computer decides how to get there, choosing which control surfaces to deflect and by how much. Boeing’s own more recent designs, the 777 and 787, also rely on digital controls. Indeed, the latest models from both companies go a step beyond fly-by-wire to fly-by-network. Most of the communication from sensors to computers and onward to control surfaces consists of digital packets flowing through a variant of Ethernet. The airplane is a computer peripheral.

Thus if you want to gripe about the dangers and indignities of automation on the flight deck, the 737 is not the most obvious place to start. And a Luddite campaign to smash all the avionics and put pilots back in the seat of their pants would be a dangerously off-target response to the current predicament. There’s no question the 737 MAX has a critical problem. It’s a matter of life and death for those who would fly in it and possibly also for the Boeing Company. But the problem didn’t start with MCAS. It started with earlier decisions that made MCAS necessary. Furthermore, the problem may not end with the remedy that Boeing has proposed—a software update that will hobble MCAS and leave more to the discretion of pilots.

Maxing out the 737

The 737 flew its first passengers in 1968. It was (and still is) the smallest member of the Boeing family of jet airliners, and it is also the most popular by far. More than 10,000 have been sold, and Boeing has orders for another 4,600. Of course there have been changes over the years, especially to engines and instruments. A 1980s update came to be known as 737 Classic, and a 1997 model was called 737 NG, for “next generation.” (Now, with the MAX, the NG has become the previous generation.) Through all these revisions, however, the basic structure of the airframe has hardly changed.

Ten years ago, it looked like the 737 had finally come to the end of its life. Boeing announced it would develop an all-new design as a replacement, with a hull built of lightweight composite materials rather than aluminum. Competitive pressures forced a change of course. Airbus had a head start on the A320neo, an update that would bring more efficient engines to their entry in the same market segment. The revised Airbus would be ready around 2015, whereas Boeing’s clean-slate project would take a decade. Customers were threatening to defect. In particular, American Airlines—long a Boeing loyalist—was negotiating a large order of A320neos.

In 2011 Boeing scrapped the plan for an all-new design and elected to do the same thing Airbus was doing: bolt new engines onto an old airframe. This would eliminate most of the up-front design work, as well as the need to build tooling and manufacturing facilities. Testing and certification by the FAA would also go quicker, so that the first deliveries might be made in five or six years, not too far behind Airbus.

The original 1960s 737 had two cigar-shaped engines, long and skinny, tucked up under the wings (left photo above). Since then, jet engines have grown fat and stubby. They derive much of their thrust not from the jet exhaust coming out of the tailpipe but from “bypass” air moved by a large-diameter fan. Such engines would scrape on the ground if they were mounted under the wings of the 737; instead they are perched on pylons that extend forward from the leading edge of the wing. The engines on the MAX models (right photo) are the fattest yet, with a fan 69 inches in diameter. Compared with the NG series, the MAX engines are pushed a few inches farther forward and hang a few inches lower.

A New York Times article by David Gelles, Natalie Kitroeff, Jack Nicas, and Rebecca R. Ruiz describes the plane’s development as hurried and hectic.

Months behind Airbus, Boeing had to play catch-up. The pace of the work on the 737 Max was frenetic, according to current and former employees who spoke with The New York Times…. Engineers were pushed to submit technical drawings and designs at roughly double the normal pace, former employees said.

The Times article also notes: “Although the project had been hectic, current and former employees said they had finished it feeling confident in the safety of the plane.”

Pitch instability

Sometime during the development of the MAX series, Boeing got an unpleasant surprise. The new engines were causing unwanted pitch-up movements under certain flight conditions. When I first read about this problem, soon after the Lion Air crash, I found the following explanation is an article by Sean Broderick and Guy Norris in Aviation Week and Space Technology (Nov. 26–Dec. 9, 2018, pp. 56–57):

Like all turbofan-powered airliners in which the thrust lines of the engines pass below the center of gravity (CG), any change in thrust on the 737 will result in a change of flight path angle caused by the vertical component of thrust.

In other words, the low-slung engines not only push the airplane forward but also tend to twirl it around the pitch axis. It’s like a motorcycle doing wheelies. Because the MAX engines are mounted farther below and in front of the center of gravity, they act through a longer lever arm and cause more severe pitch-up motions.

I found more detail on this effect in an earlier Aviation Week article, a 2017 pilot report by Fred George, describing his first flight at the controls of the new MAX 8.

The aircraft has sufficient natural speed stability through much of its flight envelope. But with as much as 58,000 lb. of thrust available from engines mounted well below the center of gravity, there is pronounced thrust-versus-pitch coupling at low speeds, especially with aft center of gravity (CG) and at light gross weights. Boeing equips the aircraft with a speed-stability augmentation function that helps to compensate for the coupling by automatically trimming the horizontal stabilizer according to indicated speed, thrust lever position and CG. Pilots still must be aware of the effect of thrust changes on pitching moment and make purposeful control-wheel and pitch-trim inputs to counter it.

The reference to an “augmentation function” that works by “automatically trimming the horizontal stabilizer” sounded awfully familiar, but it turns out this is not MCAS. The system that compensates for thrust-pitch coupling is known as speed-trim. Like MCAS, it works “behind the pilot’s back,” making adjustments to control surfaces that were not directly commanded. There’s yet another system of this kind called mach-trim that silently corrects a different pitch anomally when the aircraft reaches transonic speeds, at about mach 0.6. Neither of these systems is new to the MAX series of aircraft; they have been part of the control algorithm at least since the NG came out in 1997. MCAS runs on the same computer as speed-trim and mach-trim and is part of the same software system, but it is a distinct function. And according to what I’ve been reading in the past few weeks, it addresses a different problem—one that seems more sinister.

Most aircraft have the pleasant property of static stability. When an airplane is properly trimmed for level flight, you can let go of the controls—at least briefly—and it will continue on a stable path. Moreover, if you pull back on the control yoke to point the nose up, then let go again, the pitch angle should return to neutral. The layout of the airplane’s various airfoil surfaces accounts for this behavior. When the nose goes up, the tail goes down, pushing the underside of the horizontal stabilizer into the airstream. The pressure of the air against this tail surface provides a restoring force that brings the tail back up and the nose back down. (That’s why it’s called a stabilizer!) This negative feedback loop is built in to the structure of the airplane, so that any departure from equilibrium creates a force that opposes the disturbance.

However, the tail surface, with its helpful stablizing influence, is not the only structure that affects the balance of aerodynamic forces. Jet engines are not designed to contribute lift to the airplane, but at high angles of attack they can do so, as the airstream impinges on the lower surface of each engine’s outer covering, or nacelle. When the engines are well forward of the center of gravity, the lift creates a pitch-up turning moment. If this moment exceeds the counterbalancing force from the tail, the aircraft is unstable. A nose-up attitude generates forces that raise the nose still higher, and positive feedback takes over.

Is the 737 MAX vulnerable to such runaway pitch excursions? The possibility had not occurred to me until I read a commentary on MCAS on the Boeing 737 Technical Site, a web publication produced by Chris Brady, a former 737 pilot and flight instructor. He writes:

MCAS is a longitudinal stability enhancement. It is not for stall prevention or to make the MAX handle like the NG; it was introduced to counteract the non-linear lift of the LEAP-1B engine nacelles and give a steady increase in stick force as AoA increases. The LEAP engines are both larger and relocated slightly up and forward from the previous NG CFM56-7 engines to accommodate their larger fan diameter. This new location and size of the nacelle cause the vortex flow off the nacelle body to produce lift at high AoA; as the nacelle is ahead of the CofG this lift causes a slight pitch-up effect (ie a reducing stick force) which could lead the pilot to further increase the back pressure on the yoke and send the aircraft closer towards the stall. This non-linear/reducing stick force is not allowable under

FAR = Federal Air Regulations. Part 25 deals with airworthiness standards for transport category airplanes. FAR §25.173 “Static longitudinal stability”. MCAS was therefore introduced to give an automatic nose down stabilizer input during steep turns with elevated load factors (high AoA) and during flaps up flight at airspeeds approaching stall.

Brady cites no sources for this statement, and as far as I know Boeing has neither confirmed nor denied. But Aviation Week, which earlier mentioned the thrust-pitch linkage, has more recently (issue of March 20) gotten behind the nacelle-lift instability hypothesis:

The MAX’s larger CFM Leap 1 engines create more lift at high AOA and give the aircraft a greater pitch-up moment than the CFM56-7-equipped NG. The MCAS was added as a certification requirement to minimize the handling difference between the MAX and NG.

Assuming the Brady account is correct, an interesting question is when Boeing noticed the instability. Were the designers aware of this hazard from the outset? Did it emerge during early computer simulations, or in wind tunnel testing of scale models? A story by Dominic Gates in the Seattle Times hints that Boeing may not have recognized the severity of the problem until flight tests of the first completed aircraft began in 2015.

According to Gates, the safety analysis that Boeing submitted to the FAA specified that MCAS would be allowed to move the horizontal stabilizer by no more than 0.6 degree. In the airplane ultimately released to the market, MCAS can go as far as 2.5 degrees, and it can act repeatedly until reaching the mechanical limit of motion at about 5 degrees. Gates writes:

That limit was later increased after flight tests showed that a more powerful movement of the tail was required to avert a high-speed stall, when the plane is in danger of losing lift and spiraling down.

The behavior of a plane in a high angle-of-attack stall is difficult to model in advance purely by analysis and so, as test pilots work through stall-recovery routines during flight tests on a new airplane, it’s not uncommon to tweak the control software to refine the jet’s performance.

The high-AoA instability of the MAX appears to be a property of the aerodynamic form of the entire aircraft, and so a direct way to suppress it would be to alter that form. For example, enlarging the tail surface might restore static stability. But such airframe modifications would have delayed the delivery of the airplane, especially if the need for them was discovered only after the first prototypes were already flying. Structural changes might also jeopardize inclusion of the new model under the old type certificate. Modifying software instead of aluminum must have looked like an attractive alternative. Someday, perhaps, we’ll learn how the decision was made.

By the way, according to Gates, the safety document filed with the FAA specifying a 0.6 degree limit has yet to be amended to reflect the true range of MCAS commands.

Flying while unstable

Instability is not necessarily the kiss of death in an airplane. There have been at least a few successful unstable designs, starting with the 1903 Wright Flyer. The Wright brothers deliberately put the horizontal stabilizer in front of the wing rather than behind it because their earlier experiments with kites and gliders had shown that what we call stability can also be described as sluggishness. The Flyer’s forward control surfaces (known as canards) tended to amplify any slight nose-up or nose-down motions. Maintaining a steady pitch attitude demanded high alertness from the pilot, but it also allowed the airplane to respond more quickly when the pilot wanted to pitch up or down. (The pros and cons of the design are reviewed in a 1984 paper by Fred E. C. Culick and Henry R. Jex.)

Another dramatically unstable aircraft was the Grumman X-29, a research platform designed in the 1980s. The X-29 had its wings on backwards; to make matters worse, the primary surfaces for pitch control were canards mounted in front of the wings, as in the Wright Flyer. The aim of this quirky project was to explore designs with exceptional agility, sacrificing static stability for tighter maneuvering. No unaided human pilot could have mastered such a twitchy vehicle. It required a digital fly-by-wire system that sampled the state of the airplane and adjusted the control surfaces up to 80 times per second. The controller was successful—perhaps too much so. It allowed the airplane to be flown safely, but in taming the instability it also left the plane with rather tame handling characteristics.

the primary surfaces for pitch control were canards mounted in front of the wings, as in the Wright Flyer. The aim of this quirky project was to explore designs with exceptional agility, sacrificing static stability for tighter maneuvering. No unaided human pilot could have mastered such a twitchy vehicle. It required a digital fly-by-wire system that sampled the state of the airplane and adjusted the control surfaces up to 80 times per second. The controller was successful—perhaps too much so. It allowed the airplane to be flown safely, but in taming the instability it also left the plane with rather tame handling characteristics.

I have a glancing personal connection with the X-29 project. In the 1980s I briefly worked as an editor with members of the group at Honeywell who designed and built the X-29 control system. I helped prepare publications on the control laws and on their implementation in hardware and software. That experience taught me just enough to recognize something odd about MCAS: It is way too slow to be suppressing aerodynamic instability in a jet aircraft. Whereas the X-29 controller had a response time of 25 milliseconds, MCAS takes 10 seconds to move the 737 stabilizer through a 2.5-degree adjustment. At that pace, it cannot possibly keep up with forces that tend to flip the nose upward in a positive feedback loop.

There’s a simple explanation. MCAS is not meant to control an unstable aircraft. It is meant to restrain the aircraft from entering the regime where it becomes unstable. This is the same strategy used by other mechanisms of stall prevention—intervening before the angle of attack reaches the critical point. However, if Brady is correct about the instability of the 737 MAX, the task is more urgent for MCAS. Instability implies a steep and slippery slope. MCAS is a guard rail that bounces you back onto the road when you’re about to drive over the cliff.

Which brings up the question of Boeing’s announced plan to fix the MCAS problem. Reportedly, the revised system will not keep reactivating itself so persistently, and it will automatically disengage if it detects a large difference between the two AoA sensors. These changes should prevent a recurrence of the recent crashes. But do they provide adequate protection against the kind of mishap that MCAS was designed to prevent in the first place? With MCAS shut down, either manually or automatically, there’s nothing to stop an unwary or misguided pilot from wandering into the corner of the flight envelope where the MAX becomes unstable.

Without further information from Boeing, there’s no telling how severe the instability might be—if indeed it exists at all. The Brady article at the Boeing 737 Technical Site implies the problem is partly pilot-induced. Normally, to make the nose go higher and higher you have to pull harder and harder on the control yoke. In the unstable region, however, the resistance to pulling suddenly fades, and so the pilot may unwittingly pull the yoke to a more extreme position.

Is this human interaction a necessary part of the instability, or is it just an exacerbating factor? In other words, without the pilot in the loop, would there still be positive feedback causing runaway nose-up pitch? I have yet to find answers.

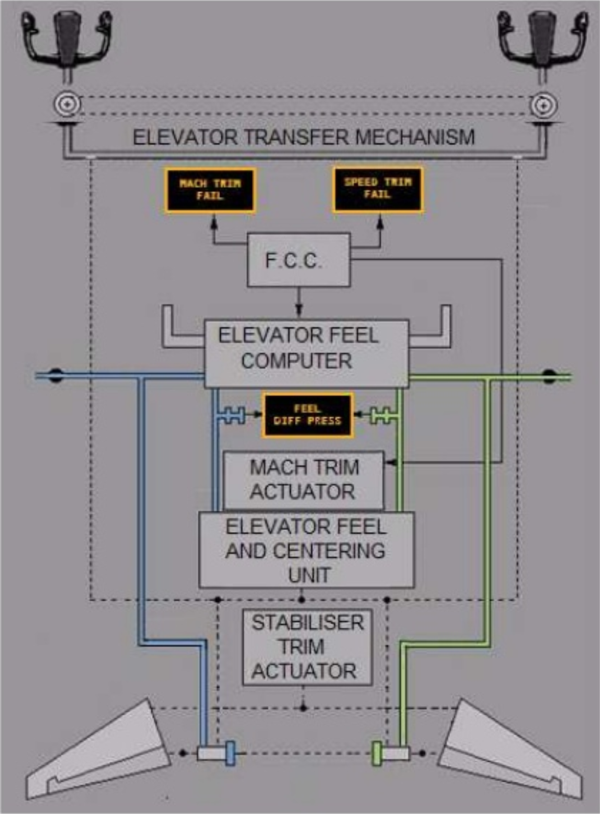

Another question: If the root of the problem is a deceptive change in the force resisting a nose-up movements of the control yoke, why not address that issue directly?  In the 737 (and most other large aircraft) the forces that the pilot “feels” through the control yoke are not simple reflections of the aerodynamic forces acting on the elevator and other control surfaces. The feedback forces are largely synthetic, generated by an “elevator feel computer” and an “elevator feel and centering unit,” devices that monitor the state of the airplane and generate appropriate hydraulic pressures pushing the yoke one way or another. Those systems could have been given the additional task of maintaining or increasing back force on the yoke when the angle of attack approaches the instability. Artificially enhanced resistance is already part of the stall warning system. Why not extend it to MCAS? (There may be a good answer; I just don’t know it.)

In the 737 (and most other large aircraft) the forces that the pilot “feels” through the control yoke are not simple reflections of the aerodynamic forces acting on the elevator and other control surfaces. The feedback forces are largely synthetic, generated by an “elevator feel computer” and an “elevator feel and centering unit,” devices that monitor the state of the airplane and generate appropriate hydraulic pressures pushing the yoke one way or another. Those systems could have been given the additional task of maintaining or increasing back force on the yoke when the angle of attack approaches the instability. Artificially enhanced resistance is already part of the stall warning system. Why not extend it to MCAS? (There may be a good answer; I just don’t know it.)

Where’s the off switch?

Even after the spurious activation of MCAS on Lion Air 610, the crash and the casualties would have been avoided if the pilots had simply turned the damn thing off. Why didn’t they? Apparently because they had never heard of MCAS, and didn’t know it was installed on the airplane they were flying, and had not received any instruction on how to disable it. There’s no switch or knob in the cockpit labeled “MCAS ON/OFF.” The Flight Crew Operation Manual does not mention it (except in a list of abbreviations), and neither did the transitional training program the pilots had completed before switching from the 737 NG to the MAX. The training consisted of either one or two hours (reports differ) with an iPad app.

Boeing’s explanation of these omissions was captured in a Wall Street Journal story:

One high-ranking Boeing official said the company had decided against disclosing more details to cockpit crews due to concerns about inundating average pilots with too much information—and significantly more technical data—than they needed or could digest.

To call this statement disingenuous would be disingenuous. What it is is preposterous. In the first place, Boeing did not withhold “more details”; they failed to mention the very existence of MCAS. And the too-much-information argument is silly. I don’t have access to the Flight Crew Operation Manual for the MAX, but the NG edition runs to more than 1,300 pages, plus another 800 for the Quick Reference Handbook. A few paragraphs on MCAS would not have sunk any pilot who wasn’t already drowning in TMI. Moreover, the manual carefully documents the speed-trim and mach-trim features, which seem to fall in the same category as MCAS: They act autonomously, and offer the pilot no direct interface for monitoring or adjusting them.

In the aftermath of the Lion Air accident, Boeing stated that the procedure for disabling MCAS was spelled out in the manual, even though MCAS itself wasn’t mentioned. That procedure is given in a checklist for “runaway stabilizer trim.” It is not complicated: Hang onto the control yoke, switch off the autopilot and autothrottles if they’re on; then, if the problem persists, flip two switches labeled “STAB TRIM” to the “CUTOUT” position. Only the last step will actually matter in the case of an MCAS malfunction.

This checklist is considered a “memory item”; pilots must be able to execute the steps without looking it up in the handbook. The Lion Air crew should certainly have been familiar with it. But could they recognize that it was the right checklist to apply in an airplane whose behavior was unlike anything they had seen in their training or previous 737 flying experience? According to the handbook, the condition that triggers use of the runaway checklist is “Uncommanded stabilizer trim movement occurs continuously.” The MCAS commands were not continuous but repetitive, so some leap of inference would have been needed to make this diagnosis.

By the time of the Ethiopian crash, 737 pilots everywhere knew all about MCAS and the procedure for disabling it. A preliminary report issued last week by Ethiopian Airlines indicates that after a few minutes of wrestling with the control yoke, the pilots on Flight 302 did invoke the checklist procedure, and moved the STAB TRIM switches to CUTOUT. The stabilizer then stopped responding to MCAS nose-down commands, but the pilots were unable to regain control of the airplane.

It’s not entirely clear why they failed or what was going on in the cockpit in those last minutes. One factor may be that the cutout switch disables not only automatic pitch trim movements but also manual ones requested through the buttons on the control yoke. The switch cuts all power to the electric motor that moves the stabilizer. In this situation the only way to adjust the trim is to turn the hand crank wheels near the pilots’ knees. During the crisis on Flight 302 that mechanism may have been too slow to correct the trim in time, or the pilots may have been so fixated on pulling the control yoke back with maximum force that they did not try the manual wheels. It’s also possible that they flipped the switches back to the NORMAL setting, restoring power to the stabilizer motor. The report’s narrative doesn’t mention this possibility, but the graph from the flight data recorder suggests it (see below).

The single point of failure

There’s room for debate on whether the MCAS system is a good idea when it is operating correctly, but when it activates mistakenly and sends an airplane diving into the sea, no one would defend it. By all appearances, the rogue behavior in both the Lion Air and the Ethiopian accidents was triggered by a malfunction in a single sensor. That’s not supposed to happen in aviation. It’s unfathomable that any aircraft manufacturer would knowingly build a vehicle in which the failure of a single part would lead to a fatal accident.

Protection against single failures comes from redundancy, and the 737 is so committed to this principle that it almost amounts to two airplanes wrapped up in a single skin.

There’s one asterisk in this roster of redundancy: A device called the flight control computer, or FCC, apparently gets special treatment. There are two FCCs, but according to the Boeing 737 Technical Site only one of them operates during any given flight. All the other duplicated components run in parallel, receiving independent inputs, doing independent computations, emitting independent control actions. But for each flight just one FCC does all the work, and the other is put on standby. The scheme for choosing the active computer seems strangely arbitrary. Each day when the airplane is powered up, the left side FCC gets control for the first flight, then the right side unit takes over for the second flight of the day, and the two sides alternate until the power is shut off. After a restart, the alternation begins again with the left FCC.

Aspects of this scheme puzzle me. I don’t understand why redundant FCC units are treated differently from other components. If one FCC dies, does the other automatically take over? Can the pilots switch between them in flight? If so, would that be an effective way to combat MCAS misbehavior? I’ve tried to find answers in the manuals, but I don’t trust my interpretation of what I read.

I’ve also had a hard time learning anything about the FCC itself. I don’t know who makes it, or what it looks like, or how it is programmed.  On a website called Closet Wonderfuls an item identified as a 737 flight control computer is on offer for $43.82, with free shipping.

On a website called Closet Wonderfuls an item identified as a 737 flight control computer is on offer for $43.82, with free shipping.

In the context of the MAX crashes, the flight control computer is important for two reasons. First, it’s where MCAS lives; this is the computer on which the MCAS software runs. Second, the curious procedure for choosing a different FCC on alternating flights also winds up choosing which AoA sensor is providing input to MCAS. The left and right sensors are connected to the corresponding FCCs.

If the two FCCs are used in alternation, that raises an interesting question about the history of the aircraft that crashed in Indonesia. The preliminary crash report describes trouble with various instruments and controls on five flights over four days (including the fatal flight). All of the problems were on the left side of the aircraft or involved a disagreement between the left and right sides.

| date | route | trouble reports | maintenance |

|---|---|---|---|

| Oct 26 | Tianjin → Manado | left side: no airspeed or altitude indications |

test left Stall Management and Yaw Damper computer; passed |

| ? | Manado → Denpasar | ? | ? |

| Oct 27 | Denpasar → Manado | left side: no airspeed or altitude indications speed trim and mach trim warning lights |

test left Stall Management and Yaw Damper computer; failed reset left Air Data and Inertial Reference Unit retest left Stall Management and Yaw Damper computer; passed clean electrical connections |

| Oct 27 | Manado → Denpasar | left side: no airspeed or altitude indications speed trim and mach trim warning lights autothrottle disconnect |

test left Stall Management and Yaw Damper computer; failed reset left Air Data and Inertial Reference Unit replace left AoA sensor |

| Oct 28 | Denpasar → Jakarta | left/right disagree warning on airspeed and altitude stick shaker [MCAS activation] |

flush left pitot tube and static port clean electrical connectors on elevator “feel” computer |

| Oct 29 | Jakarta → Pangkal Pinang | stick shaker [MCAS activation] |

Which of the five flights had the left-side FCC as active computer? The final two flights (red), where MCAS activated, were both first-of-the-day flights and so presumably under control of the left FCC. For the rest it’s hard to tell, especially since maintenance operations may have entailed full shutdowns of the aircraft, which would have reset the alternation sequence.

The revised MCAS software will reportedly consult signals from both AoA sensors. What will it do with the additional information? Only one clue has been published so far: If the readings differ by more than 5.5 degrees, MCAS will shut down. What if the readings differ by 4 or 5 degrees?

The present MCAS system, with its alternating choice of left and right, has a 50 percent chance of disaster when a single random failure causes an AoA sensor to spew out falsely high data. With the same one-sided random failure, the updated MCAS will have a 100 percent chance of ignoring a pilot’s excursion into stall territory. Is that an improvement?

The broken sensor

Although a faulty sensor should not bring down an airplane, I would still like to know what went wrong with the AoA vane.

It’s no surprise that AoA sensors can fail. They are mechanical devices operating in a harsh environment: winds exceeding 500 miles per hour and temperatures below –40.  A common failure mode is a stuck vane, often caused by ice (despite a built-in de-icing heater). But a seized vane would produce a constant output, regardless of the real angle of attack, which is not the symptom seen in Flight 610. The flight data recorder shows small fluctuations in the signals from both the left and the right instruments. Furthermore, the jiggles in the two curves are closely aligned, suggesting they are both tracking the same movements of the aircraft. In other words, the left-hand sensor appears to be functioning; it’s just giving measurements offset by a constant deviation of roughly 20 degrees.

A common failure mode is a stuck vane, often caused by ice (despite a built-in de-icing heater). But a seized vane would produce a constant output, regardless of the real angle of attack, which is not the symptom seen in Flight 610. The flight data recorder shows small fluctuations in the signals from both the left and the right instruments. Furthermore, the jiggles in the two curves are closely aligned, suggesting they are both tracking the same movements of the aircraft. In other words, the left-hand sensor appears to be functioning; it’s just giving measurements offset by a constant deviation of roughly 20 degrees.

Is there some other failure mode that might produce the observed offset? Sure: Just bend the vane by 20 degrees. Maybe a catering truck or an airport jetway blundered into it. Another creative thought is that the sensor might have been installed wrong, with the entire unit rotated by 20 degrees. Several writers on a website called the Professional Pilots Rumour Network explored this possibility, but they ultimately concluded it was impossible. The manufacturer, doubtless aware of the risk, placed the mounting screws and locator pins asymmetrically, so the unit will only go into the hull opening one way.

You might get the same effect through an assembly error during the manufacture of the sensor. The vane could be incorrectly attached to the shaft, or else the internal transducer that converts angular position into an electrical signal might be mounted wrong. Did the designers also ensure that such mistakes are impossible? I don’t know; I haven’t been able to find any drawings or photographs of the sensor’s innards.

Looking for other ideas about what might have gone wrong, I made a quick, scattershot survey of FAA airworthiness directives that call for servicing or replacing AoA sensors. I found dozens of them, including several that discuss the same sensor installed on the 737 MAX (the Rosemount 0861). But none of the reports I read describes a malfunction that could cause a consistent 20-degree error.

For a while I thought that the fault might lie not in the sensor itself but farther along the data path. It could be something as simple as a bad cable or connector. Signals from the AoA sensor go to the Air Data and Inertial Reference Unit (ADIRU), where the sine and cosine components are combined and digitized to yield a number representing the measured angle of attack. The ADIRU also receives inputs from other sensors, including the pitot tubes for measuring airspeed and the static ports for air pressure. And it houses the gyroscopes and accelerometers of an inertial guidance system, which can keep track of aircraft motion without reference to external cues. (There’s a separate ADIRU for each side of the airplane.) Maybe there was a problem with the digitizer—a stuck bit rather than a stuck vane.

Further information has undermined this idea. For one thing, the AoA sensor removed by the Lion Air maintenance crew on October 27 is now in the hands of investigators. According to news reports, it was “deemed to be defective,” though I’ve heard no hint of what the defect might be. Also, it turns out that one element of the control system, the Stall Management and Yaw Damper (SMYD) computer, receives the raw sine and cosine voltages directly from the sensor, not a digitized angle calculated by the ADIRU. It is the SMYD that controls the stick-shaker function. On both the Lion Air and the Ethiopian flights the stick shaker was active almost continuously, so those undigitized sine and cosine voltages must have been indicating a high angle of attack. In other words the error already existed before the signals reached the ADIRU.

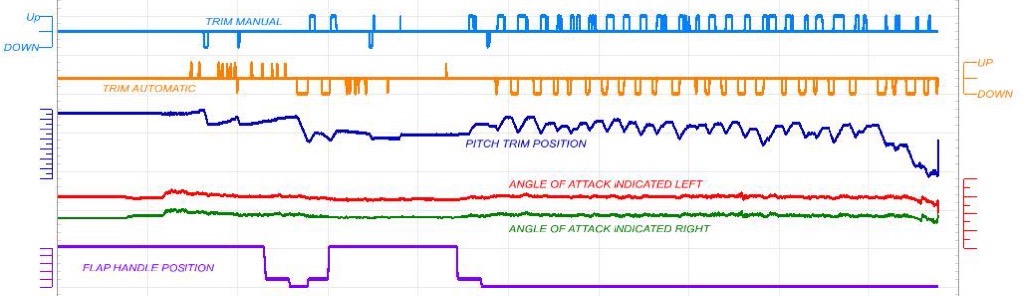

I’m still stumped by the fixed angular offset in the Lion Air data, but the question now seems a little less important. The release of the preliminary report on Ethiopian Flight 302 shows that the left-side AoA sensor on that aircraft also failed badly, but in a way that looks totally different. Here are the relevant traces from the flight data recorder:

The readings from the AoA sensors are the uppermost lines, red for the left sensor and blue for the right. At the left edge of the graph they differ somewhat when the airplane has just begun to move, but they fall into close coincidence once the roll down the runway has built up some speed. At takeoff, however, they suddenly diverge dramtically, as the left vane begins reading an utterly implausible 75 degrees nose up. Later it comes down a few degrees but otherwise shows no sign of the ripples that would suggest a response to airflow. At the very end of the flight there are some more unexplained excursions.

By the way, in this graph the light blue trace of automatic trim commands offers another clue to what might have happened in the last moments of Flight 302. Around the middle of the graph, the STAB TRIM switches were pulled, with the result that an automatic nose-down command had no effect on the stabilizer position. But at the far right, another automatic nose-down command does register in the trim-position trace, suggesting that the cutout switches may have been turned on again.

Still more stumpers

There’s so much I still don’t understand.

Puzzle 1. If the Lion Air and Ethiopian accidents were both caused by faulty AoA sensors, then there were three parts with similar defects in brand new aircraft (including the replacement sensor installed by Lion Air on October 27). A recent news item says the replacement was not a new part but one that had been refurbished by a Florida shop called XTRA Aerospace. This fact offers us somewhere else to point the accusatory finger, but presumably the two sensors installed by Boeing were not retreads, so XTRA can’t be blamed for all of them.

There are roughly 400 MAX aircraft in service, with 800 AoA sensors. Is a failure rate of 3 out of 800 unusual or unacceptable? Does that judgment depend on whether or not it’s the same defect in all three cases?

Puzzle 2. Let’s look again at the traces for pitch trim and angle of attack in the Lion Air 610 data. The conflicting manual and automatic commands in the second half of the flight have gotten lots of attention, but I’m also baffled by what was going on in the first few minutes.

During the roll down the runway, the pitch trim system was set near its maximum pitch-up position (dark blue line). Immediately after takeoff, the automatic trim system began calling for further pitch-up movement, and the stabilizer probably reached its mechanical limit. At that point the pilots manually trimmed it in the pitch-down direction, and the automatic system replied with a rapid sequence of up adjustments. In other words, there was already a tug-of-war underway, but the pilots and the automated controls were pulling in directions opposite to those they would choose later on. All this happened while the flaps were still deployed, which means that MCAS could not have been active. Some other element of the control system must have been issuing those automatic pitch-up orders. Deepening the mystery, the left side AoA sensor was already feeding its spurious high readings to the left-side flight control computer. If the FCC was acting on that data, it should not have been commanding nose-up trim.

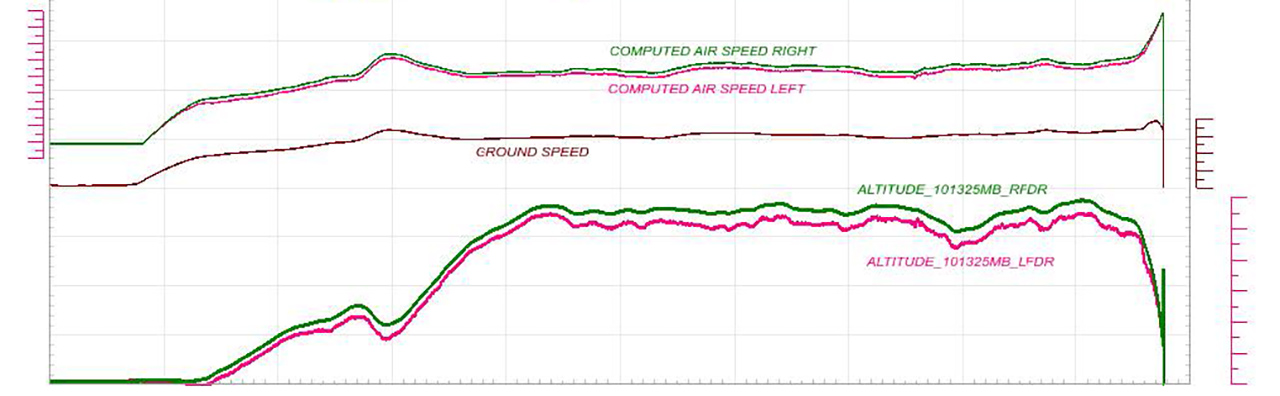

Puzzle 3. The AoA readings are not the only peculiar data in the chart from the Lion Air preliminary report. Here are the altitude and speed traces:

The left-side altitude readings (red) are low by at least a few hundred feet. The error looks like it might be multiplicative rather than additive, perhaps 10 percent. The left and right computed airspeeds also disagree, although the chart is too squished to allow a quantitative comparison. It was these discrepancies that initially upset the pilots of Flight 610; they could see them on their instruments. (They had no angle of attack indicators in the cockpit, so that conflict was invisible to them.)

Altitude, airspeed, and angle of attack are all measured by different sensors. Could they all have gone haywire at the same time? Or is there some common point of failure that might explain all the weird behavior? In particular, is it possible a single wonky AoA sensor caused all of this havoc? My guess is yes. The sensors for altitude and airspeed and even temperature are influenced by angle of attack. The measured speed and pressure are therefore adjusted to compensate for this confounding variable, using the output of the AoA sensor. That output was wrong, and so the adjustments allowed one bad data stream to infect all of the air data measurements.

Man or machine

Six months ago, I was writing about another disaster caused by an out-of-control control system. In that case the trouble spot was a natural gas distribution network in Massachusetts, where a misconfigured pressure-regulating station caused fires and explosions in more than 100 buildings, with one fatality and 20 serious injuries. I lamented: “The special pathos of technological tragedies is that the engines of our destruction are machines that we ourselves design and build.”

In a world where defective automatic controls are blowing up houses and dropping aircraft out of the sky, it’s hard to argue for more automation, for adding further layers of complexity to control systems, for endowing machines with greater autonomy. Public sentiment leans the other way. Like President Trump, most of us trust pilots more than we trust computer scientists. We don’t want MCAS on the flight deck. We want Chesley Sullenberger III, the hero of USAir Flight 1549, who guided his crippled A320 to a dead-stick landing in the Hudson River and saved all 155 souls on board. No amount of cockpit automation could have pulled off that feat.

Nevertheless, a cold, analytical view of the statistics suggests a different reaction. The human touch doesn’t always save the day. On the contrary, pilot error is responsible for more fatal crashes than any other cause. One survey lists pilot error as the initiating event in 40 percent of fatal accidents, with equipment failure accounting for 23 percent. No one is (yet) advocating a pilotless cockpit, but at this point in the history of aviation technology that’s a nearer prospect than a computer-free cockpit.

The MCAS system of the 737 MAX represents a particularly awkward compromise between fully manual and fully automatic control. The software is given a large measure of responsibility for flight safety and is even allowed to override the decisions of the pilot. And yet when the system malfunctions, it’s entirely up to the pilot to figure out what went wrong and how to fix it—and the fix had better be quick, before MCAS can drive the plane into the ground.

Two lost aircraft and 346 deaths are strong evidence that this design was not a good idea. But what to do about it? Boeing’s plan is a retreat from automatic control, returning more responsibility and authority to the pilots:

- Flight control system will now compare inputs from both AOA sensors. If the sensors disagree by 5.5 degrees or more with the flaps retracted, MCAS will not activate. An indicator on the flight deck display will alert the pilots.

- If MCAS is activated in non-normal conditions, it will only provide one input for each elevated AOA event. There are no known or envisioned failure conditions where MCAS will provide multiple inputs.

- MCAS can never command more stabilizer input than can be counteracted by the flight crew pulling back on the column. The pilots will continue to always have the ability to override MCAS and manually control the airplane.

A statement from Dennis Muilenburg, Boeing’s CEO, says the software update “will ensure accidents like that of Lion Air Flight 610 and Ethiopian Airlines Flight 302 never happen again.” I hope that’s true, but what about the accidents that MCAS was designed to prevent? I also hope we will not be reading about a 737 MAX that stalled and crashed because the pilots, believing MCAS was misbehaving, kept hauling back on the control yokes.

If Boeing were to take the opposite approach—not curtailing MCAS but enhancing it with still more algorithms that fiddle with the flight controls—the plan would be greeted with hoots of outrage and derision. Indeed, it seems like a terrible idea. MCAS was installed to prevent pilots from wandering into hazardous territory. A new supervisory system would keep an eye on MCAS, stepping in if it began acting suspiciously. Wouldn’t we then need another custodian to guard the custodians, ad infinitum? Moreoever, with each extra layer of complexity we get new side effects and unintended consequences and opportunities for something to break. The system becomes harder to test, and impossible to prove correct.

Those are serious objections, but the problem being addressed is also serious.

Suppose the 737 MAX didn’t have MCAS but did have a cockpit indicator of angle of attack. On the Lion Air flight, the captain would have felt the stick-shaker warning him of an incipient stall and would have seen an alarmingly high angle of attack on his instrument panel. His training would have impelled him to do the same thing MCAS did: Push the nose down to get the wings working again. Would he have continued pushing it down until the plane crashed? Surely not. He would have looked out the window, he would have cross-checked the instruments on the other side of the cockpit, and after some scary moments he would have realized it was a false alarm. (In darkness or low visibility, where the pilot can lose track of the horizon, the outcome might be worse.)

I see two lessons in this hypothetical exercise. First, erroneous sensor data is dangerous, whether the airplane is being flown by a computer or by Chesley Sullenberger. A prudently designed instrument and control system would take steps to detect (and ideally correct) such errors. At the moment, redundancy is the only defense against these failures—and in the unpatched version of MCAS even that protection is compromised. It’s not enough. One key to the superiority of human pilots is that they exercise judgment and sometimes skepticism about what the instruments tell them. That kind of reasoning is not beyond the reach of automated systems. There’s plenty of information to be exploited. For example, inconsistencies between AoA sensors, pitot tubes, static pressure ports, and air temperature probes not only signal that something’s wrong but can offer clues about which sensor has failed. The inertial reference unit provides an independent check on aircraft attitude; even GPS signals might be brought to bear. Admittedly, making sense of all this data and drawing a valid conclusion from it—a problem known as sensor fusion—is a major challenge.

Second, a closed-loop controller has yet another source of information: an implicit model of the system being controlled. If you change the angle of the horizontal stabilizer, the state of the airplane is expected to change in known ways—in angle of attack, pitch angle, airspeed, altitude, and in the rate of change in all these parameters. If the result of the control action is not consistent with the model, something’s not right. To persist in issuing the same commands when they don’t produce the expected results is not reasonable behavior. Autopilots include rules to deal with such situations; the lower-level control laws that run in manual-mode flight could incorporate such sanity checks as well.

I don’t claim to have the answer to the MCAS problem. And I don’t want to fly in an airplane I designed. (Neither do you.) But there’s a general principle here that I believe should be taken to heart: If an autonomous system makes life-or-death decisions based on sensor data, it ought to verify the validity of the data.

Update 2019-04-11

Boeing continues to insist that MCAS is “not a stall-protection function and not a stall-prevention function. It is a handling-qualities function. There’s a misconception it is something other than that.” This statement comes from Mike Sinnett, who is vice president of product development and future airplane development at Boeing; it appears in an Aviation Week article by Guy Norris published online April 9.

I don’t know exactly what “handling qualities” means in this context. To me the phrase connotes something that might affect comfort or aesthetics or pleasure more than safety. An airplane with different handling qualities would feel different to the pilot but could still be flown without risk of serious mishap. Is Sinnett implying something along those lines? If so—if MCAS is not critical to the safety of flight—I’m surprised that Boeing wouldn’t simply disable it temporarily, as a way of getting the fleet back in the air while they work out a permanent solution.

The Norris article also quote Sinnett as saying: “The thing you are trying to avoid is a situation where you are pulling back and all of a sudden it gets easier, and you wind up overshooting and making the nose higher than you want it to be.” That situation, with the nose higher than you want it to be, sounds to me like an airplane that might be approaching a stall.

A story by Jack Nicas, David Gelles, and James Glanz in today’s New York Times offers a quite different account, suggesting that “handling qualities” may have motivated the first version of MCAS, but stall risks were part of the rationale for later beefing it up.

The system was initially designed to engage only in rare circumstances, namely high-speed maneuvers, in order to make the plane handle more smoothly and predictably for pilots used to flying older 737s, according to two former Boeing employees who spoke on the condition of anonymity because of the open investigations.

For those situations, MCAS was limited to moving the stabilizer—the part of the plane that changes the vertical direction of the jet—about 0.6 degrees in about 10 seconds.

It was around that design stage that the F.A.A. reviewed the initial MCAS design. The planes hadn’t yet gone through their first test flights.

After the test flights began in early 2016, Boeing pilots found that just before a stall at various speeds, the Max handled less predictably than they wanted. So they suggested using MCAS for those scenarios, too, according to one former employee with direct knowledge of the conversations

Finally, another Aviation Week story by Guy Norris, published yesterday, gives a convincing account of what happened to the angle of attack sensor on Ethiopian Airlines Flight 302. According to Norris’s sources, the AoA vane was sheared off moments after takeoff, probably by a bird strike. This hypothesis is consistent with the traces extracted from the flight data recorder, including the strange-looking wiggles at the very end of the flight. I wonder if there’s hope of finding the lost vane, which shouldn’t be far from the end of the runway.

Very complete and cogent article. Maybe you aren’t “an aeronautical engineer, or an airframe mechanic, or a control theorist, or even a pilot.” (I am 2 or 3 of those). But you are obviously well versed and educated in the overall theories and applications.

Great article, thanks!

There is an interesting theory that I don’t think you mention anywhere. The theory is that, on the Ethiopian

flight, after disabling the stabilizer trim motor while there is mistrim, the aerodynamic load on the jackscrew was too great for it be movable by the copilot using their crank. The pilot was perhaps using their strength to pull the yoke back, which meant both that he was unavailable to help crank, and that the aerodynamic load was increased by the elevator directing airflow in opposition to the stabilizer.

There is an old “yo-yo maneuver” that stopped being mentioned in Boeing manuals decades ago that describes having to relieve load on the stabilizer before manually trimming, in this case by releasing the elevator and pushing the nose down even further, which I’m sure would have been very unattractive given their high airspeed and low altitude if the pilots even understood the procedure, which is no longer part of simulator training.

This may explain why the trim motor appears to have been re-enabled towards the end of the Ethiopian flight: because purely manual trim was impossible.

Any thoughts on these ideas?

This is all new to me. It sounds all too plausible. And if it should turn out to be true, it’s devastating. A mechanical backup that doesn’t work when you need it most — oof!

On the other hand, if the workaround procedure was once mentioned in the manuals and then disappeared, that ought to mean that Boeing had fixed the problem.

Followup: Just this morning the New York Times reports:

The NYTimes is probably understating it. Here’s the old

737 manual text:

Going faster than the max safe airspeed, having the stabilizer trimmed fully down and the elevator fully opposed to it seems like it might be the extreme case they’re thinking of. The NYTimes quote suggests that it would be possible with both pilots working together, but this old manual suggests to me that it was likely not.

I think we’re talking laws of physics against a mechanical system, so I don’t suspect there’s been a fix: seems like instead this kind of manual flight was simply no longer necessary until Boeing added a new system that causes you to become severely mistrimmed and then have to disable the motor that can fix that.

very impressive/ detailed analysis. theres a neat relatively recent book called “complexification” that should be considered by all modern engineers. the thesis is that the algorithmic age is taking over and we face major/ daunting complexity in many areas. think you have worthwhile takeaways. its interesting to see that market pressure played some role in this.

briefly talking this over with another engineer reminds me of the concept of “code coverage”. there are millions of lines of code in the control systems but there is no “easy” way of knowing what code is getting exercised in even “supposedly extensive” tests. my feeling is that code coverage awareness/ analysis could have averted some of this disaster. its extraordinary that many complex systems are deployed with sometimes far less than 100% code coverage in tests, and achieving 100% is probably unrealistic. but it has to be weighed. # of lines of code needs to be seen as having a “sweet spot” and this is a mostly alien concept wrt modern engineering. too little and the control system is not sophisticated. too much and you get lower code coverage and “potentially ugly surprises in PROD” so to speak.

code coverage is tricky to measure because you almost need another computer to monitor your algorithm. so tracking code coverage typically adds execution time overhead. but building this monitoring into the chips themselves with low execution overhead is a possibility that is probably not much explored.

another key relevant concept is “happy path vs sad path”. most testing focuses on “happy path”. then “sad path” scenarios can be extremely challenging to simulate. they are basically “edge cases”. its possible MCAS was never very thoroughly tested because its mostly a “sad path” mechanism to deal with an “edge case”. another concept in code is if-then complexity. amount of conditional logic ideally should be minimized because if you have ‘x’ branches then you have 2^x paths of code to test and that decreases code coverage possibility.

Wow, great article. I read the whole thing and it really opens another point of view to the two crashes. Thanks again and I am hoping for more good articles like this one.

Hello,

You might already be aware of that original MCAS was designed as back as 2012 when Ray Craig chief pilot at Boeing (retired in 2015) flew MAX in simulator and experienced the

instability during high speed evasive maneuvers (tight turns).

MCAS of that time relied on accelerometers plus AoA.

MCAS was extended in 2016, to low speed behavior, after maiden flight, I have not found details about the reasons.

BR

NYT investigated AoA failure rate and found that narrowed to reported incidents there are 5-10 cases each year. plus unknown number not reported to authorities. Common reason is bird strike.

having read most articles on the topic it seems to me most (or all) Boeing engineerd dis the best they could in designing the MAX.

what Boeing missed was a kind of chief designer having an “opponent” point of view, not lost in details but asking creative questions to separate work teams. so many decisions were made in MAX design that created a “chain of events” resulting in the catastrophic failure — shoud *any* of them done the other way MAX could have become a success…

…engineers did…

(edit)

I believe that :

a) 737-Max 8/9 will NEVER FLY AGAIN!

b) No FAA individual will sign an AIR WORTHINESS CERTIFICATE NOW!

c) This will be the end of the Boeing Dominance in Commercial aviation & AIRBUS will be the top manufacturer for the foreseeable future!

ALL SELF INFLICTED AS WELL!

I was initially critical of a few points but withdrew my post having read on. The article gives the impression that having the author in a professional discussion group would be constructive and a pleasure.

Being a dinosaur has allowed me to read in every night since October 18 - many thousands of posts. I of course have some unanswered questions, but would like to make just a few points.

In the early stages it’s suggested that there is a higher rotational force due to the new engine mount position. The MAX pylon is in fact shorter in the vertical axis. I think the cowling’s flat base is a more important contender. The vectored thrust will presumably have more effectivity.

I don’t think the failure statistics are mathematically significant. The replacement part possibly coming from a high time 737 NG is profoundly significant. One disturbing fact is that the AoA sensors suffered quite disparate failures. As bizarre as it seems, a great deal of Boeing’s woes are the result of an horrific coincidence. Their culpability will no doubt, in part, come from the component’s lone ability to cause a ‘Catastrophic’ event.

The seemingly wrong way pulses are almost certainly STS corrections. Remember, one of the MAX pilots called that it was like was STS running the wrong way.

The loaded Stabilizer and the Yo-yo response. Not been significant in the past? With three degrees of separation, a PPRuNe writer describes the Toronto 707 crisis in which their struggles saw the aircraft pass by a fuel station sign. I’d say they’d used up their last Yo-yo.

One additional thought on possible stall characteristics - relates to possible turbulence from the MAX nacelles. The MAX engine nacelles are larger in diameter and mounted higher than on the NG; how big and how high is made evident in the side views of the MAX on the 737 Technical Site. Can it be (in addition to increased lift and nose-up pitch from their shape, forward location and size) that at higher angles of attack those nacelles also project into and obstruct the airstream that flows over the wing? Could such projection and obstruction create turbulence that causes portions of the wing behind the nacelle to stall? If so, is consequent loss of lift in those areas another factor that contributes to nose up pitch moment? And what would be the effect on nose up pitch - minimal, or significant and nonlinear? Especially at lower speeds such as in a turn on approach?

In your well-researched and exquisitely written article: “The MAX Mess” I found a disagreement.

Under the subtitle “Maxing out the 737” you write: “The MAX engines are pushed a few centimeters further forward and hang a few centimeters lower“.

But the MAX’s CFM LEAP 1B are larger in diameter than the NG’s CFM56-7, so they need to hang higher to avoid scraping the ground (not lower). But if you let them just hang higher, they would collide with the wing, so they had to be placed higher and further forward. Of course, a higher suspension would reduce the resulting pitch torque, but the increased thrust of the CFM LEAP 1B compensates for this.

But it is mainly the aerodynamic forces generated by the larger and further forward engine nacelles that generate the pitch-up torque from a certain angle of attack.

These two reasons made the aircraft vulnerable to stal and were the reason for the development of the unholy MCAS.

Do we really disagree about this? Or are we just measuring from different points of reference? The new LEAP-1B engine on the MAX is eight inches fatter than the CFM-56 engine of the previous model. It’s mounted so that the top is higher, but because of the larger diameter the bottom hangs down farther below the wing. If you measure from the ground up, the bottom is actually an inch higher, but that’s because the nose-wheel strut was lengthened by eight inches to improve ground clearance, making the whole airplane stand higher off the ground.

This picture, a comparison between a 737NG and a 737MAX shows:

Bottom of engine nacelle: same high above ground

Center of the engine: MAX a bit higher

Top of engine nacelle: MAX clearly higher

https://leehamnews.com/wp-content/uploads/2018/11/737NG-vs-MAX-nacelles.png