I was doing some reading in the history of cryptography when I came upon a reference to a 1996 article by Solomon W. Golomb. Golomb always has something interesting to say, so I had to go off and find the paper, especially since the title was intriguing and a little mysterious: “On Factoring Jevons’ Number.” That would surely be William Stanley Jevons, 19th-century economist and statistician. I’ve run into Jevons before—he gets two chapters in Stephen Stigler’s Statistics on the Table—but I never knew he had a number.

Golomb’s paper lives behind a paywall, so I’ll quote at some length from the introductory paragraph:

In his book The Principles of Science: A Treatise on Logic and Scientific Method, written and published in the 1870′s, William S. Jevons observed that there are many situations where the “direct” operation is relatively easy, but the “inverse” operation is significantly more difficult. One example mentioned briefly is that enciphering (encryption) is easy while deciphering (decryption) is hard. In the same section of Chapter 7: Induction titled “Induction an Inverse Operation”, much more attention is devoted to the principle that multiplication of integers is easy, but finding the (prime) factors of the product is much harder. Thus, Jevons anticipated a key feature of the RSA algorithm for public key cryptography, though he certainly did not invent the concept of public key cryptography.

Golomb calls attention to the following passage from the Jevons book (p. 123 in the second edition, issued in 1874):

Can the reader say what two numbers multiplied together will produce the number 8,616,460,799? I think it unlikely that anyone but myself will ever know; for they are two large prime numbers, and can only be rediscovered by trying in succession a long series of prime divisors until the right one be fallen upon.

Obviously Jevons did not reckon on rapid progress in computing machinery. In our gigahertz age, even the crudest version of the trial-division algorithm finds the two prime factors of that 10-digit number in milliseconds. And of course Jevons was wrong in suggesting that trial division is the only method possible.

Golomb’s critique of Jevons’s claim is even harsher:

With a 10-place hand-held calculator, using only one memory location, and only the operations of subtraction, square and square-root, it took me less than six minutes to factor Jevons’ number…. This success led me to consider how easy or difficult it would have been for someone in the 1870′s, using only hand calculation, to have succeeded in finding this factorization. I concluded that at most a few hours, and quite possibly less than an hour, would have been sufficient.

Just in case anyone would like to put Golomb’s assertion to the test, I’ll refrain from giving the factors here. Sharpen your pencils!

Golomb was not the first to crack the Jevons number. Derrick Lehmer the elder presented a factorization at a 1903 meeting of the American Mathematical Society. He added this note to the published account of the talk: “I think that the number has been resolved before, but I do not know by whom.”

All of these results tend to make Jevons look a bit of a fool for so badly misjudging the difficulty of his factoring challenge. And his reputation is somewhat doubtful for another reason as well. Economists make fun of his theory that business cycles and sunspot cycles are causally connected. (Let us quietly ignore the close correlation between the most recent sunspot minimum and the financial unpleasantness of 2008–09.)

I’m not going to take it on myself to rehabilitate Jevons, but I can report that I’ve spent a few days browsing in his Principles of Science, and I find it charming. Jevons was one of those frightfully prolific Victorian scribblers. He lived only to age 46, but in his short life he published at least a dozen substantial works. Principles of Science runs to almost 800 pages. His aim in this volume is to assemble a complete mental toolkit for doing science, starting with logic (Jevons was an early champion of Boole’s Laws of Thought), and proceeding through probability, measurement, experiment and on to various aspects of theory-building (generalization, classification, analogy). A theme that runs through the whole narrative is the importance of inductive inference, which Jevons sees as an inverse problem—how to deduce causes from effects.

Consider the moment when Jevons was writing. Darwin’s Origin of Species had been published 15 years before. The debate over corpuscular and undulatory theories of light was in full cry. Phlogiston was long gone, but the luminferous ether was still permeating the universe. The apparent conflict between the antiquity of the earth and the measured flux of heat from the planet’s interior was a great puzzlement. (It would not be resolved for another 20 years, with the discovery of radioactivity.) Thermodynamics was beginning to emerge as a science. Reading a contemporary’s account of these developments offers a certain voyeuristic excitement. Jevons didn’t yet know how these stories were going to turn out.

There’s a fair amount of math in the book, but even more noteworthy is how much math isn’t in the book. In my perusal of the text, I found not one differential equation or even a use of elementary calculus. The square root of –1 appears just once. Instead of analysis, there is a strong emphasis on areas we would now call discrete mathematics, especially combinatorics and probability, as well as the interface between logic and the foundations of arithmetic. I was quite surprised by this slant. I tend to think of discrete math as a modern enthusiasm, inspired in part by the rise of computer science.

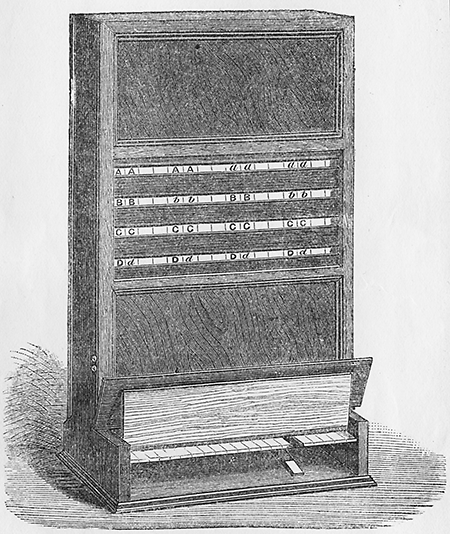

Maybe Jevons should be considered a modern in this respect. He certainly seems to enjoy counting and calculating things. His approach to symbolic logic begins with the enumeration of all possible propositions with a given number of terms. Elsewhere he estimates the number of English words with various combinations of letters, and the number of chemical compounds with a given number of elemental constituents. He performs 20,480 coin tosses to test the law of large numbers. He correctly calculates that 2^2^2^2^2 has 19,729 decimal digits. This is a person who would have been thrilled to have access to the sort of computing machinery we now take for granted. He built an early digital device of his own—a Logic Machine, sometimes called the Logical Piano, for evaluating Boolean formulas.

Jevons seems to have a particular fascination with permutations, combinations and factorials, bringing them up even in contexts where you might not expect them to have much bearing. For example, there’s this curious definition of Euler’s number:

At the base of all logarithmic theory is the mysterious natural constant commonly denoted by e, or ε, being equal to the infinite series $$1 + \frac{1}{1} + \frac{1}{1 \cdot 2} + \frac{1}{1 \cdot 2 \cdot 3} + \frac{1}{1 \cdot 2 \cdot 3 \cdot 4} + \cdots,$$ and thus consisting of the sum of the ratios between the numbers of permutations and combinations of 0, 1, 2, 3, 4, &c. things. [p. 330]

The formula \(e = \sum{1/n!}\) is standard, but what’s that about ratios of permutations and combinations? Do they have anything to do with the value of e? (I’m not even sure what ratios are being summed.)

Another instance:

It is worth noting that this Law of Error, abstruse though the subject may seem, is really founded upon the simplest principles. It arises entirely out of the difference between permutations and combinations, a subject upon which I may seem to have dwelt with unnecessary prolixity in previous pages. [p. 383]

Is the law of error—the normal distribution—entirely a matter of permutations and combinations?

And Jevons returns to the same topic a third time at the very end of his final chapter, as he is summing up his views on life, the universe and everything.

There formerly seemed to me to be something mysterious in the denominators of the binomial expansion (p. 190), which are reproduced in the natural constant ε, or $$1 + \frac{1}{1} + \frac{1}{1 \cdot 2} + \frac{1}{1 \cdot 2 \cdot 3} + \cdots$$ and in many results of mathematical analysis. I now perceive, as already explained (pp. 33, 160, 383), that they arise out of the fact that the relations of space do not apply to the logical conditions governing the numbers of combinations as contrasted to those of permutations. [p. 769]

I have pursued the internal references that Jevons cites here, but I confess that what he formerly found mysterious is still wholly mysterious to me. If it makes sense to anyone else, I hope you’ll enlighten me.

One final digression. The full text of Principles of Science is available on Google Books. I started out reading it there, but eventually decided that ink and paper still have some advantages when it comes to 800-page tomes. So, being lucky enough to have library privileges, I went spelunking through the dim stacks of the Widener Library at Harvard and borrowed a copy. I soon realized it was the very copy that had been scanned by Google Books. The evidence lies in the distinctive marginal notes made by the volume’s original owner. For example, on page 194 and 195 we find Jevons and his pencil-wielding reader disagreeing over the value of 2^2^2^2. (Jevons is correct; the penciled corrections are not.)

A signature and stamp at the front of the book reveal the identity of the marginalist. He was George F. Swain, who held the Gordon McKay professorship at Harvard from 1909 until his death in 1931. There’s a biography among the publications of the National Academy of Sciences (but the link seems wonky; I had to hunt down Google’s cached copy). Swain was a civil engineer (now an extinct species at Harvard) who seems to have signed off on just about every railroad bridge in the Commonwealth of Massachusetts and a few other bridges farther afield (e.g., the Golden Gate). But evidently Swain also had more abstract interests. In the NAS biography a former student comments: “Logical reasoning was constantly emphasized, and I well remember his earnest recommendation that we procure copies of Jevon’s [sic] ‘Logic’ and master its contents.”

Reading over the shoulder of Professor Swain adds one more layer to the palimpsest. At the bottom we have Jevons himself, deeply embedded in the world of late-19th-century science, speaking familiarly of his contemporaries Professor Maxwell and Dr. Joule and Mr. Venn. Then Swain enters with his itchy annotator’s pencil, calling attention to those passages that still resonated 50 years later—and showing occasional impatience with ideas that no longer seemed so compelling. And we have our own knowing, modern perspective, you and I and Solomon Golomb, with all our computational power tools.

Update 2012-09-15: As mentioned above, Derrick Lehmer’s 1903 paper on the Jevons number includes the footnote: “I think that the number has been resolved before, but I do not know by whom.” Josh Jordan may have now answered the “by whom” question. He searched Google Books for “8616460799″ and turned up an 1889 article in Nature by Charles J. Busk that presents a factoring algorithm and illustrates its application with the Jevons number. Busk claims that his scheme is “different from any previously tried,” but Jordan points out that in fact Busk has reinvented Fermat’s method, based on expressing an odd product of two factors as a2–b2 = (a+b)(a–b). Fermat described the method in 1643.

But perhaps we shouldn’t be too hard on Charles Busk. A little more Googling turns up an 1898 discussion of Busk’s ideas in Mathematical Questions and Solutions from the Educational Times (the MathOverflow of the 19th century). None of the other contributors mention the Fermat precedent either.

Maybe the thing about e is derangements, who are roughly 1/e as numerous as permutations?

I think this is what he means. Fix a large number m.

The number of “permutations” of 4 objects out of these m is m(m-1)(m-2)(m-3), while

the number of “combinations” of 4 objects out of these m is m(m-1)(m-2)(m-3)/4!.

Thus the ratio between the number of these “permutations” and “combinations” is 4!. In general, to use the notation I learnt at school but don’t like, nPk/nCk = k!.

Supporting evidence is found in his sentence that “the fact that the relations of space do not apply to the logical conditions governing the numbers of combinations as contrasted to those of permutations” — I think by “relations of space do not apply”, he means “order does not matter” (for combinations as contrasted with permutations).

About the Law of Error / the normal distribution being something that “arises entirely out of the difference between permutations and combinations”, my guess is that again he means “ignoring order”, and is thinking of something like the central limit theorem: in a sequence of N coin tosses, each particular sequence of results (HHTHTT, etc.) has probability 1/2^N, but if you ignore the order and consider only the “combination” (reporting it as the number of heads), you get the normal distribution (in the limit for large N).

This is fascinating. Javons may have been factually wrong in choosing a too small number, but his was morally right and quite prescient in understanding that factoring an integer is much hard than multiplying.

While the main ingredient in inventing public key cryptography was Diffie, Hellman and Merkle’s realization that this is a valid question to be asked, I believe it took some time until they realized that number theory could be a source for problems useful for it. My understanding is that it was Hellman’s colleague John Gill who suggested modular exponentiation as an easy to compute but hard to invert problem.

About that expansion of e, my guess is somewhat similar to Svat. Consider the definition \(e = \lim_{n\rightarrow\infty} (1+1/n)^n\). If you take some large \(n\) and look at \((1+1/n)^n = (1+1/n) (1+1/n) \cdots (1+1/n)\), and open up the parenthesis, then the term corresponding to taking \(k\) times the factor \(1/n\) and the rest of the times the factor \(1\) will be \(\binom{n}{k}/n^k\). In other words, its the ratio between choosing \(k\) unordered elements from a universe of size \(n\) without repetition and choosing \(k\) ordered elements from this universe with repetition. As \(n\) goes to infinity, the issue of repetitions is negligible, and hence this ratio becomes just the number of orderings of \(k\) elements, which is \(k!\), thus giving rise to the formula \(e = \sum_{k=1}^{\infty} \tfrac{1}{k!}\).

My thanks to the commenters, who have cleared away some cobwebs (in my mind, not Jevons’s).

The suggestion of a connection with derangements is really intriguing, but I’m afraid I can find no evidence in the text that Jevons was thinking along those lines.

Based on the peculiar set of operations he mentions, I suspect Golomb used Fermat’s factorization method.

@ Michael Lugo: Yes, Golomb did use the Fermat method. So did Derrick Lehmer in 1903.

A small typo: In the first excerpt from Jevons, you quote him as saying “…the numbers of permutations and combinations of 0, 1, 2, 4, &c. things.” This gave me pause, so I went and looked. You left out the “3.”

–Fixed. Brian

This essay is a wonderful mix of detail and historical sweep, beautifully written. Thank you.

Really nice article. I enjoyed reading all these titbits about Jevons which I never knew. Thanks.

What neither Jevons nor I anticipated was that I would do all my day-to-day calculation with Wolfram Alpha. Whether checking a sum or ‘factor 8,616,460,799′, the site is a marvel.

This is fascinating to me, because my mother’s maiden name was Jevons, and my late father once told me that we were related through her to a “Victorian economist who tried to tie the London stock market to sunspot cycles.”

This article gives me more information than I had about him, and I’m pleased to have found it.

A couple of (very!) inconsequential remarks on the factorization of the Jevons number.

Firstly, the article by Busk (“To find the Factors of any Proposed Number”): as the preview on Google Books is only available in the US (I guess), let me note that the article is also available on archive.org or in djvu format here (dolnośląska Biblioteka Cyfrowa / Lower Silesian Digital Library).

Secondly, the slides “Algorithmic Number Theory Before Computers” by Jeffrey Shallit contain on p. 6 a timeline, prepared in 2000, that mentions both the 1874 claim by Jevons that nobody else “will ever know” the factorization of the number, and the 1889 factorization by C. J. Busk. Looking at that is what reminded me of this post.

Jevons: “I now perceive, as already explained (pp. 33, 160, 383), that they arise out of the fact that the relations of space do not apply to the logical conditions governing the numbers of combinations as contrasted to those of permutations.”

Perhaps Jevons was making a philosophical statement here, with “space” referring to a three-dimensional space, which at any instant can only have one permutation or another, but not multiple combinations simultaneously.

I wasted an afternoon as an undergraduate in the early 1980s cracking Jevons’ number by hand with the help of a 19th century book of squares tables.

Doesn’t sound like a wasted afternoon to me!