Fourteen years ago I noted that disk drives were growing so fast I couldn’t fill them up. Between 1997 and 2002, storage capacity doubled every year, allowing me to replace a 3 gigabyte drive with a new 120 gigabyte model. I wrote:

Extrapolating the steep trend line of the past five years predicts a thousandfold increase in capacity by about 2012; in other words, today’s 120-gigabyte drive becomes a 120-terabyte unit.

Extending that same growth curve into 2016 would allow for another four doublings, putting us on the threshold of the petabyte disk drive (i.e., \(10^{15}\) bytes).

None of that has happened. The biggest drives in the consumer marketplace hold 2, 4, or 6 terabytes. A few 8- and 10-terabyte drives were recently introduced, but they are not yet widely available. In any case, 10 terabytes is only 1 percent of a petabyte. We have fallen way behind the growth curve.

The graph below extends an illustration that appeared in my 2002 article, recording growth in the areal density of disk storage, measured in bits per square inch:

The blue line shows historical data up to 2002 (courtesy of Edward Grochowski of the IBM Almaden Research Center). The bright green line represents what might have been, if the 1997–2002 trend had continued. The orange line shows the real status quo: We are three orders of magnitude short of the optimistic extrapolation. The growth rate has returned to the more sedate levels of the 1970s and 80s.

What caused the recent slowdown? I think it makes more sense to ask what caused the sudden surge in the 1990s and early 2000s, since that’s the kink in the long-term trend. The answers lie in the details of disk technology. More sensitive read heads developed in the 90s allowed information to be extracted reliably from smaller magnetic domains. Then there was a change in the geometry of the domains: the magnetic axis was oriented perpendicular to the surface of the disk rather than parallel to it, allowing more domains to be packed into the same surface area. As far as I know, there have been no comparable innovations since then, although a new writing technology is on the horizon. (It uses a laser to heat the domain, making it easier to change the direction of magnetization.)

As the pace of magnetic disk development slackens, an alternative storage medium is coming on strong. Flash memory, a semiconductor technology, has recently surpassed magnetic disk in areal density; Micron Technologies reports a laboratory demonstration of 2.7 terabits per square inch. And Samsung has announced a flash-based solid-state drive (SSD) with 15 terabytes of capacity, larger than any mechanical disk drive now on the market. SSDs are still much more expensive than mechanical disks—by a factor of 5 or 10—but they offer higher speed and lower power consumption. They also offer the virtue of total silence, which I find truly golden.

Flash storage has replaced spinning disks in about a quarter of new laptops, as well as in all phones and tablets. It is also increasingly popular in servers (including the machine that hosts bit-player.org). Do disks have a future?

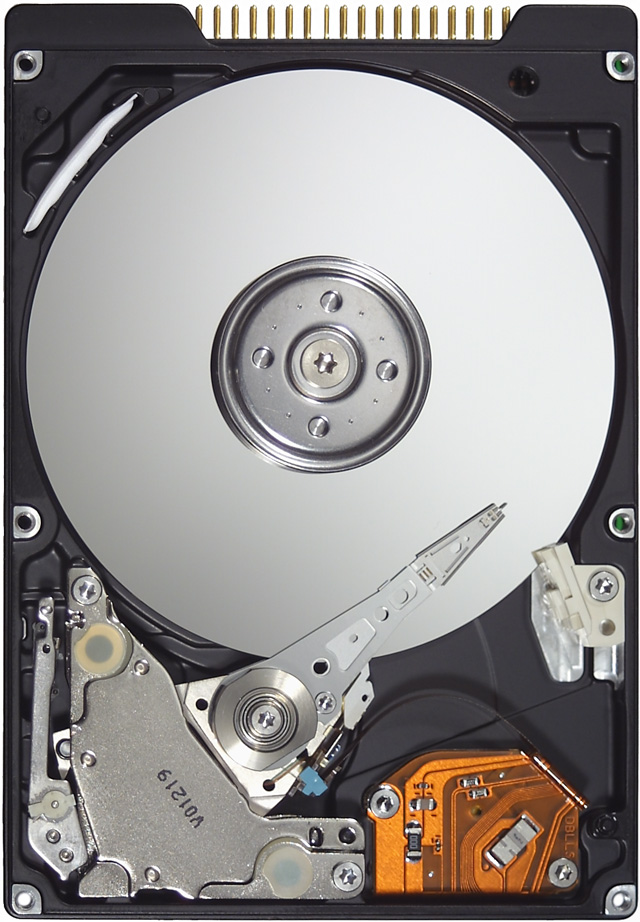

In my sentimental moments, I’ll be sorry to see spinning disks go away. They are such jewel-like marvels of engineering and manufacturing prowess. And they are the last link in a long chain of mechanical contrivances connecting us with the early history of computing—through Turing’s bombe and Babbage’s brass gears all the way back to the Antikythera mechanism two millennia ago. From here on out, I suspect, most computers will have no moving parts.

Maybe in a decade or two the spinning disk will make a comeback, the way vinyl LPs and vacuum tube amplifiers have. “Data that comes off a mechanical disk has a subtle warmth and presence that no solid-state drive can match,” the cogniscenti will tell us.

“You can never be too rich or too thin,” someone said. And a computer can never be too fast. But the demand for data storage is not infinitely elastic. If a file cabinet holds everything in the world you might ever want to keep, with room to spare, there’s not much added utility in having 100 or 1,000 times as much space.

In 2002 I questioned whether ordinary computer users would ever fill a 1-terabyte drive. Specifically, I expressed doubts that my own files would ever reach the million megabyte mark. Several readers reassured me that data will always expand to fill the space available. I could only respond “We’ll see.” Fourteen years later, I now have the terabyte drive of my dreams, and it holds all the words, pictures, music, video, code, and whatnot I’ve accumulated in a lifetime of obsessive digital hoarding. The drive is about half full. Or half empty. So I guess the outcome is still murky. I can probably fill up the rest of that drive, if I live long enough. But I’m not clamoring for more space.

One factor that has surely slowed demand for data storage is the emergence of cloud computing and streaming services for music and movies. I didn’t see that coming back in 2002. If you choose to keep some of your documents on Amazon or Azure, you obviously reduce the need for local storage. Moreover, offloading data and software to the cloud can also reduce the overall demand for storage, and thus the global market for disks or SSDs. A typical movie might take up 3 gigabytes of disk space. If a million people load a copy of the same movie onto their own disks, that’s 3 petabytes. If instead they stream it from Netflix, then in principle a single copy of the file could serve everyone.

In practice, Netflix does not store just one copy of each movie in some giant central archive. They distribute rack-mounted storage units to hundreds of internet exchange points and internet service providers, bringing the data closer to the viewer; this is a strategy for balancing the cost of storage against the cost of communications bandwidth. The current generation of the Netflix Open Connect Appliance has 36 disk drives of 8 terabytes each, plus 6 SSDs that hold 1 terabyte each, for a total capacity of just under 300 terabytes. (Even larger units are coming soon.) In the Netflix distribution network, files are replicated hundreds or thousands of times, but the total demand for storage space is still far smaller than it would be with millions of copies of every movie.

A recent blog post by Eric Brewer, Google’s vice president for infrastructure, points out:

The rise of cloud-based storage means that most (spinning) hard disks will be deployed primarily as part of large storage services housed in data centers. Such services are already the fastest growing market for disks and will be the majority market in the near future. For example, for YouTube alone, users upload over 400 hours of video every minute, which at one gigabyte per hour requires more than one petabyte (1M GB) of new storage every day or about 100x the Library of Congress.

Thus Google will not have any trouble filling up petabyte drives. An accompanying white paper argues that as disks become a data center specialty item, they ought to be redesigned for this environment. There’s no compelling reason to stick with the present physical dimensions of 2½ or 3½ inches. Moreover, data-center disks have different engineering priorities and constraints. Google would like to see disks that maximize both storage capacity and input-output bandwidth, while minimizing cost; reliability of individual drives is less critical because data are distributed redundantly across thousands of disks.

The white paper continues:

An obvious question is why are we talking about spinning disks at all, rather than SSDs, which have higher [input-output operations per second] and are the “future” of storage. The root reason is that the cost per GB remains too high, and more importantly that the growth rates in capacity/$ between disks and SSDs are relatively close . . . , so that cost will not change enough in the coming decade.

If the spinning disk is remodeled to suit the needs and the economics of the data center, perhaps flash storage can become better adapted to the laptop and desktop environment. Most SSDs today are plug-compatible replacements for mechanical disk drives. They have the same physical form, they expect the same electrical connections, and they communicate with the host computer via the same protocols. They pretend to have a spinning disk inside, organized into tracks and sectors. The hardware might be used more efficiently if we were to do away with this charade.

Or maybe we’d be better off with a different charade: Instead of dressing up flash memory chips in the disguise of a disk drive, we could have them emulate random access memory. Why, after all, do we still distinguish between “memory” and “storage” in computer systems? Why do we have to open and save files, launch and shut down applications? Why can’t all of our documents and programs just be everpresent and always at the ready?

In the 1950s the distinction between memory and storage was obvious. Memory was the few kilobytes of magnetic cores wired directly to the CPU; storage was the rack full of magnetic tapes lined up along the wall on the far side of the room. Loading a program or a data file meant finding the right reel, mounting it on a drive, and threading the tape through the reader and onto the take-up reel. In the 1970s and 80s the memory/storage distinction began to blur a little. Disk storage made data and programs instantly available, and virtual memory offered the illusion that files larger than physical memory could be loaded all in one go. But it still wasn’t possible to treat an entire disk as if all the data were all present in memory. The processor’s address space wasn’t large enough. Early Intel chips, for example, used 20-bit addresses, and therefore could not deal with code or data segments larger than \(2^{20} \approx 10^6\) bytes.

We live in a different world now. A 64-bit processor can potentially address \(2^{64}\) bytes of memory, or 16 exabytes (i.e., 16,000 petabytes). Most existing processor chips are limited to 48-bit addresses, but this still gives direct access to 281 terabytes. Thus it would be technically feasible to map the entire content of even the largest disk drive onto the address space of main memory.

In current practice, reading from or writing to a location in main memory takes a single machine instruction. Say you have a spreadsheet open; the program can get the value of any cell with a load instruction, or change the value with a store instruction. If the spreadsheet file is stored on disk rather than loaded into memory, the process is quite different, involving not single instructions but calls to input-output routines in the operating system. First you have to open the file and read it as a one-dimensional stream of bytes, then parse that stream to recreate the two-dimensional structure of the spreadsheet; only then can you access the cell you care about. Saving the file reverses these steps: The two-dimensional array is serialized to form a linear stream of bytes, then written back to the disk. Some of this overhead is unavoidable, but the complex conversions between serialized files on disk and more versatile data structures in memory could be eliminated. A modern processor could address every byte of data—whether in memory or storage—as if it were all one flat array. Disk storage would no longer be a separate entity but just another level in the memory hierarchy, turning what we now call main memory into a new form of cache. From the user’s point of view, all programs would be running all the time, and all documents would always be open.

Is this notion of merging memory and storage an attractive prospect or a nightmare? I’m not sure. There are some huge potential problems. For safety and sanity we generally want to limit which programs can alter which documents. Those rules are enforced by the file system, and they would have to be re-engineered to work in the memory-mapped environment.

Perhaps more troubling is the cognitive readjustment required by such a change in architecture. Do we really want everything at our fingertips all the time? I find it comforting to think of stored files as static objects, lying dormant on a disk drive, out of harm’s way; open documents, subject to change at any instant, require a higher level of alertness. I’m not sure I’m ready for a more fluid and frenetic world where documents are laid aside but never put away. But I probably said the same thing 30 years ago when I first confronted a machine capable of running multiple programs at once (anyone remember Multifinder?).

The dichotomy between temporary memory and permanent storage is certainly not something built into the human psyche. I’m reminded of this whenever I help a neophyte computer user. There’s always an incident like this:

“I was writing a letter last night, and this morning I can’t find it. It’s gone.”

“Did you save the file?”

“Save it? From what? It was right there on the screen when I turned the machine off.”

Finally the big questions: Will we ever get our petabyte drives? How long will it take? What sorts of stuff will we keep on them when the day finally comes?

The last time I tried to predict the future of mass storage, extrapolating from recent trends led me far astray. I don’t want to repeat that mistake, but the best I can suggest is a longer-term baseline. Over the past 50 years, the areal density of mass-storage media has increased by seven orders of magnitude, from about \(10^5\) bits per square inch to about \(10^{12}\). That works out to about seven years for a tenfold increase, on average. If that rate is an accurate predictor of future growth, we can expect to go from the present 10 terabytes to 1 petabyte in about 15 years. But I would put big error bars around that number.

I’m even less sure about how those storage units will be used, if in fact they do materialize. In 2002 my skepticism about filling up a terabyte of personal storage was based on the limited bandwidth of the human sensory system. If the documents stored on your disk are ultimately intended for your own consumption, there’s no point in keeping more text than you can possibly read in a lifetime, or more music than you can listen to, or more pictures than you can look at. I’m now willing to concede that a terabyte of information may not be beyond human capacity to absorb. But a petabyte? Surely no one can read a billion books or watch a million hours of movies.

This argument still seems sound to me, in the sense that the conclusion follows if the premise is correct. But I’m no longer so sure about the premise. Just because it’s my computer doesn’t mean that all the information stored there has to be meant for my eyes and ears. Maybe the computer wants to collect some data for its own purposes. Maybe it’s studying my habits or learning to recognize my voice. Maybe it’s gathering statistics from the refrigerator and washing machine. Maybe it’s playing go, or gossiping over some secret channel with the Debian machine across the alley.

We’ll see.

Heya,

One thing to be aware of, in 2011 there was a major flood in Thailand, where most of the world’s spinning hard drives are manufactured - http://spectrum.ieee.org/computing/hardware/the-lessons-of-thailands-flood

http://vrworld.com/2011/10/19/thailand-flooding-has-a-worldwide-impact-on-hard-disk-delivery/

http://www.theguardian.com/technology/2011/oct/25/thailand-floods-hard-drive-shortage

Sometimes nature trumps Moore’s law,

I really loved the article though!

Why not run a regression using your data to predict where we’ll be in 2020? It can do the job of putting the error bars around your forecast.

Minor typo “A 64-bit processor can poentially” -> “A 64-bit processor can potentially”

I would imagine virtual reality is going to up the ante of what storage we’ll need on our local computer. Just my guess, but if VR is really going to be viable there is going to have be a free virtual world to continually explore and modify. Something like google earth on steroids. Being able to virtually fly through the Grand canyon, or around Vienna, not to mention Everest and the Sistine Chapel (all at HD resolution) I suspect will suddenly make a petabyte of storage not seem so large at all.

Things to do with storage:

- record your life, every second of it (black mirror has an episode on this)

- local storage of your special files is often important to keep data free. centralized storage of information means it can be controlled

- higher def. There is always higher definition

- logs logs logs and more logs.

- backups of backups

Local storage is important for freedom, information, and importance, and it’s still substantially cheaper.

With NVMe memory, the memory / storage paradigm becomes even more muddled. Startup/boot isn’t necessary anymore except to reset, a computer should be always on as phones essentially have become…

There will always be higher definition, but how long will that matter?

Inevitably we’ll get to a point in which we surpass the resolution of the human eye; yes, it will be helpful to be able to have extreme zoom, but to our eyes the entire picture will eventually blur the lines between reality and virtuality.

A 1TB drive is insignificant compared to the needs for 1080p video, even more so for 4K video.

Even a single movie/documentary/etc production, can fill 1TB drive with ease, and if we’re talking edit-ready formats, like ProRes, even faster…

And if you’re a professional photographer shooting 30+ MP RAW images, that 1TB drive won’t even last you a year of shootings…

I shoot video and stills and capture long-term multi-camera timelapse in an industrial setting. My storage budget for a day video job is 200Gb; the source files alone from my timelapse cameras are the same figure, per month, and I have had as many as eight cameras running at the same time. Still photography storage demands are a trivial few tens of Gb per shoot. There’s a nice new 50Tb storage array whirring away in my office, and a clone in a server room, and they will need to be upgraded soon.

So, some of us would *kill* for Pb drives!

At one time, I worked for one of the “Big Three” networks, in the group that wrote the software for video encoding. We had a storage issue. We used up about a terabyte/day (and this was mostly low res - Not the stuff off the edit suite). We actually had to go to a FIFO purge, because the SAN we had was out of space - That was 6 years ago. Petabytes? Would love it, but again, not really for “personal” use.

One of the other places I worked for a short period of times stores a lot more than that - they want to store every FRAME of every movie the company ever did in full res (aka not MPG KeyFrame+diffs - every frame as key) - and they have oh, 75-80 years of moves they want to encode…

I don’t understand the logic of not wanting more books/music/whatever on your hard drive than you’d read in your lifetime. In the 1950s you might have had an unabridged dictionary on your shelf, with entries for a million words. You might look up a word once or twice a month, maybe 2000 lookups in a lifetime or 0.2% utilization. But the dictionary is still a perfectly legitimate thing. I still want a dictionary on my shelf or hard drive, not in the cloud where I’m reliant on an internet connection and my ISP/government/etc are profiling me by words I look up. Similarly I’m glad to be able to download Wikipedia, which is 80GB or so (highly compressed) in the full-history version but no pictures. If I get interested in the novels of Balzac (he wrote dozens of them), why wouldn’t I download a “complete works” collection and browse around in it, instead of picking them one at a time? If I had a PB of storage I’m sure I could fill it. My main worry about massive cheap storage is the amount of surveillance and retention it enables, not that there’s too few uses for it.

It’s hard to fill a petabyte (million GB) hard drive with downloads if the best ISP offering service where you live limits your household to 300 GB (Comcast) or, worse, 10 GB (Exede) per month.

Not hard if you generate your own data!

This is actually a really great point. Doing some back of the napkin math, 1PB is 8 billion megabits. Assuming a 60Mbps internet connection (higher than average, I know, but it’s what I get from my ISP), it would take 253 years to download the 1PB of material needed to fill it. Assuming a 1 Gbps Google Fiber connection gets us into the reasonable 15 year territory, but then again, if we’re assuming 15 years for 100x increase in storage, by that time, we’d have a 100PB drive to fill up.

You made an error in your calculations, Brian.

It would take just over 4 years on a 60Mbps connection.

About 3 months using a 1 Gbps Google Fiber connection.

Thanks for the correction. You’re quite right; I missed a factor of 60. Evidently I was figuring on the back of the wrong napkin.

The distinction between memory and storage is first and foremost one of persistence. It has nothing to do with file systems. There are in-memory file systems just as there is disk-backed virtual memory.

We use file systems not because of technical limitations of storage but to organize things that last beyond the lifetime of a single process. Abolishing file systems would be as bad an idea as abolishing memory allocators or virtual memory.

Slight counter argument to expecting movies to not grow significantly in size by the time petabyte drives come around - most HD movies today are 1080p@23FPS, weighing in at 0.5-1.0 GB per half hour of content. I would expect that movies will instead be 8k UHD @ 90 FPS and 3D (if it’s not a fad, halve the following numbers otherwise); weighing in at a much heftier 50-100 GB per half hour of content, supposing we can get a laser disc or replacement format that can hold half a terabyte of video data and stream it at a high enough rate to be usable. Or maybe we’ll see companies ship movies on dedicated disk drives. That’d be a sight.

Do keep in mind however, that such a movie would only be 250-500 Mb/s, easily fitting into even today’s high-end Gigabit internet connections. As your other readers pointed out before me - data will always expand to fit the available space.

An additional note about virtual reality: such applications generally emphasize interactivity, which raises the ratio between data stored and information viewed. Just having a choice of which room in a virtual building to walk into means that the storage requirements are much higher than panoramic streaming video. Throw in interactive and time-based events, and detailed replication of one building can easily exceed the petabyte range. I take the pessimistic view that this will never occur, and virtual reality will mainly be limited to simple scenes without high data and bandwidth requirements.

The problems of VR in terms of storage and streaming etc. are exactly the same as for an computer game viewed on a 2D monitor.

(Rendering and displaying two somewhat separate images, and doing so fast enough to make a smooth experience does require a faster CPU and graphics card compared to displaying the same image on a monitor, but only about as much as the difference in spec between what’s required to run this years games compared to one from a few years ago. Roughly.)

Fundamentally, there’s not any difference between a VR scene, and a first person computer game when it comes to the amount of saved data that needs to be loaded and streamed.

So, if we want to know how VR will change storage requirements we can just look at how the requirements of computer gaming have changed over time.

One reason to have an abstraction of a “file” is that “save” provides a point to which the user can “revert”. The Apple Newton PDA had automatic saving for everything, and I ended up losing data because I accidentally made an unwanted deletion. Ideally, revision history would allow playback of the creation of a file from start to finish, but even then “save” provides important things, such as a set of timestamped revisions deemed “important”, as well as a way to export the latest revision without the threat of disclosure of embarrassing earlier revisions.

Some of your concern about the boundary between permanent and temporary memory is exacerbated by the appearance of Intel’s 3D XPoint memory that is both dense and fast. It’s also expensive, but maybe that will be solved with time.

It’s interesting to look at a few other things. We went smoothly from 4 bits to 32, but the transition to 64 bits took a loooooong time, and there is no evidence that anyone even wants 128-bit bus widths, pointer sizes, and fixed-width integers or floats. Similarly, neither 8-bit nor 16-bit character encodings are sufficient, but we aren’t going to surpass the Unicode range of 0 to 0x10FFFF unless we join the Galactic Empire or go insane with emoji — there just aren’t that many distinct character repertoires out there.

The reason we don’t have petabyte HDDs is simply the the industry has run out of physics. The superparamagnetic limit finally caught up with available materials.

FYI, I believe that your reality line is actually much worse than depicted as, IIRC, there was a stagnant areal density period.

Both SMR and TDMR have and will help but they are limited and more or less one time upgrades. There are still a lot of problems with HAMR as evidenced by Seagate’s production delays.

This is already the way it works on smartphones. There’s no difference between “launching” or “showing” an app, and in many cases you can only hide apps but the OS decides when they will be terminated. And many apps will automatically save anything you enter (or maybe they will just keep it in RAM but this is mostly fine since they keep running in the background).

So differences RAM and long-term storage are pretty much hidden from the user. In fact, even differences between local long-term storage and cloud-based long-term storage are increasingly hidden away through automatic synchronization.

Actually there is precedent in this area. Most OS’es these days back up real RAM by using a raw paging space out on disk. So if you are using up all physical RAM then you are somewhat protected by using disk-based paging space. Although that is usually a last resort situation, with faster flash drives I could see smarter OS’es moving unused documents into a flash virtual RAM space, and keeping real RAM available for running programs. Flash I/O speeds would have to get about 2 orders of magnitude faster before this becomes practical however.

I can say that, many companies nowadays trying to decrease speed of technological innovations for private persons in order to make more and more money on us. This one of the reasons why there is no petabytes drives. All their new technologies are targeting business to business clients that’s why it is not cheap. Is someone here agree with me and my statements?

I like the article, the problem with your extrapolation is assuming that the growth would continue in a linear fashion. The growth wasn’t linear from the beginning. At best the curve appears to be more logarithmic or”S” shaped. One would expect that due to natural constraints such as physics that the growth would start tapering off and eventually reach a maximum.

I remember thinking my commodore with 128k of RAM and a 5.5inch floppy had plenty of storage. Look at how quickly that changed. The needs (and convenience) of faster storage and larger amounts will continue to drive growth in a variety of areas. I don’t foresee physical disks disappearing any faster than fossil fuel vehicles. Form factors may change. It may make more sense to make larger disks with larger/faster buses for data center usage. Ultimately, like most things in life, the evolution of storage will be cost driven.

The difference between storage and ram is that storage preserves data over a reboot and RAM will not. That has not changed so the distinction should not either.

Also. RAM will not wear out and NAID has a limited number of write cycles. You would not want to be writing excessively to it and your .

Also their is a difference between a program running in ram and being in ram. Don’t believe me? Go find a linux live CD like gparted.

Since they are in RAM applications load much quicker but you can still start and stop them.

In the case of the LIVE cd, a “disk” is created in RAM and then read from. Not all liveCD work that way some keep some in RAM and some on the disk until accessed.

This leads me to my final point. The user experience of the the HDD / RAM analogy and the workings of the underlying technology are unrelated by design and entirely independent of one another.

No, the industry did not run out of physics but before the peta hard drive, it’s going to be peta DVD. 1,000 of today’s 1TB HD on one single disk. All your existing movies, pictures, music and documents, that you can easily and cheaply duplicate for back-up… and distibute !

I can foresee street peddlers haggling you on street corners : Hey wanna buy all the books every written, and all the music ever recorded for 10$ ?? Effective piracy will be able to reach every non-technical mom and pop and everyone in lands where bandwidth is not available. Porn will die, music industry maybe, and you’ll have the whole library of congress on a single disk with plenty room to spare. :-)

http://gizmodo.com/researchers-have-found-a-way-to-cram-1-000-terabytes-on-531549229

Oh, and for 4K, 1TB discs are on the way

http://www.extremetech.com/gaming/178166-1tb-per-disc-sony-and-panasonic-team-up-on-next-gen-blu-ray

Its really great to know that hard drives are increasing its capacity year after year but the demands of software space is also increasing. So the ratio between the storage device and software sizes are the same many years ago.

A spot of history on this – the idea of Single Level Store dates back to Multics!

A couple of other disk drive parameters are not keeping up with even the slower growth curve of drive capacity.

- Input/output speed. Disk interface speed growth has lagged far, far behind capacity growth. The time required to fill (or empty) a spinning disk drive is growing dramatically.

- Error rates. We tend to think of drive error rates as an eyes-glaze-over, don’t-need-to-worry numbers. While capacities have grown exponentially, error rates have not shrunk as quickly. On a 1 PB drive with roughly 10 petabits (10^15 bits), that’s a lot of broken bits, and a lot of work to detect and correct those broken bits. Add the proven high error rates on drive interfaces and communication channels where virtually no error correction takes place, and sites with petabytes and exabytes of data can’t trust what they’ve got. Hence the rise of filesystems with builtin checksums, like ZFS. Which slow down i/o speeds. Some hard tradeoffs there.

Both of those reasons (and others) are focusing R&D on flash drives instead of spinning disks. We’re just starting to break free from SATA i/o limits with new designs in laptops. And since flash drives started with some high error rate problems, designers have poured a lot of energy into solving them.

Some flash drive problems are only now showing up. Recent work says flash drive error rates go up with age, no matter how much or how little the drive is used. You might have a hard time reading files from 10 year old USB sticks.

Finally - I programmed Multics Single Level Store programs, on a mainframe with real core, drums, and disks. Made certain programs much easier to write, but we still pushed persistent data to files. The concept of a named file, independent of memory address, capable of being reused much later by other programs, or on other systems, is still quite useful.

I miss the old full height drives, I can’t remember the numbers now but i did a calc that had a FH 5 1/2 drive, depending on how big the spindle was and how many platters ya could get at least 500tb on one

You say that 1TB is difficult to fill? Does anyone besides me remember when 1GB drives came out? Everyone (myself included) thought that you could not fill it in a lifetime! How utterly false that turned out to be.

Just because you don’t have that much data doesn’t mean everyone is that way. I have roughly ~1TB of data (across multiple disks, see next part) on this computer alone (which does not include some things that have been archived elsewhere; does not include compression; other things). And are you ignoring technology like RAID? Redundancy in computing is a very good thing (which of course is different from backups). You want redundancy? Well you’re going to have to sacrifice some disk space, aren’t you? Different RAID levels have different ratios too (Yes, I fully realise that it’s across multiple disks but my point is it isn’t as simple as it seems you’re making it out to be; that is to say it isn’t only about capacity versus size of data alone).

Incidentally, my brother works in the studios and they absolutely have much more than a few terabytes (and other hardware). I don’t know how much but you can be sure that even if it was let’s say 100TB (would be surprised if it’s not way more) it will be constantly expanding. Meanwhile, the fact I’m not an audiophile (I have hearing problems) and the fact I’m not into videos (which shouldn’t be surprising given I have hearing problems) means my disk usage is relatively low yet I still (again on this computer alone) use around 1TB.

And you say that there will be a time when a computer won’t have any spinning parts? I suppose you’re right - a computer shut off and a dead computer won’t; but all others sort of need fans (especially that CPU). You can call that semantics if you like but it’s still true.

‘Surely no one can read a billion books or watch a million hours of movies.’

With that logic digital libraries shouldn’t exist and similar for videos. You might also remember that there exists rapid reading and the like.

And as someone else stated, file systems are very important for organising the files but that’s not their only purpose.

One of my first thoughts of why NOT to rely only on e.g. SSD: cost to capacity ratio. Then I saw the paper you cite also mentions this.

In short: you are underestimating people (and the future) by a huge margin. You’re making assumptions on what humans can (supposedly) not do but those kinds of assumptions only slow things down even more (and there are many things that hinder advances in technology and everything else). It’s more or less saying [you] can’t go further. Maybe 1TB is enough for you but it isn’t nearly enough for many people.

I have virtually filled a 1 tb seagate external disc with pirated media.

Re: mapping drive space to memory

Aren’t you describing IBM’s AS/400 architecture?

I thought for a couple of years ago also that the petabyte drive was just around the corner for my PC. But no the rate of ever expanding harddisks surely has faded out. I want a petabyte drive. I would love to have unlimited space and open direct files at all times. I would by then stop compressing files and use uncompressed files all the time. Also if the computer was unlimited fast (haha I know thats impossible) I could spare a lot of time exporting huge files. I work as a tv-producer and I need an absurd amount of storage. Think about it, 1 hour movie at Full HD uncompressed. I just made an experiment exporting a 1920×1080, square pixel, PAL, 25 frames pr sec full HD file in the most lossless format I could find. It was the arri dpx 2K 1920×1080 format. 1hour file in that format is 680,4 GB. I kknow that I have 2000 hours of masters. In the above described format it would be something like estimated 1360,8 TB. And I am only talking about masters. I have also camera footage at an amount of 4 times the masters size. That will be around 6804 TB in total of my files if it was in the Arri codec. Thats 6,8 Peta. There you go.

So I need 7 x 1 petabyte disks to storage that. Ok this is a mind experiment but anyhow I would love to have it like that.