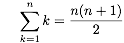

The new issue of American Scientist is up on the Web, and the print edition will soon be delighting readers everywhere. My Computing Science column in this issue of the magazine revisits a famous bit of mathematical folklore—the story about young Carl Friedrich Gauss confounding his boorish schoolmaster. In one popular version of the story, the teacher, whose name was Büttner, instructed his class to sum all the whole numbers from 1 through 100. Büttner expected an hour of peace and quiet while the pupils labored over this task, but Gauss almost instantly chalked a number on his slate: 5,050. Gauss had somehow derived the formula n(n+1)/2 for the sum of the integers from 1 through n.

Let us transpose this fable to a modern classroom, where laptop computers have replaced slates. The enlightened 21st-century Büttner would never dream of giving out such a dreary assignment as adding up a long series of numbers, but perhaps he might propose the following exercise: Write a program that for any given integer n returns the sum of the first n integers. The aim here is not just to get the arithmetic right but to produce the “best” program. And thus the question becomes: What does “best” mean in this context? In my column, I suggest that the dull, brute-force approach—just adding up the elements of the series, one by one—might actually be preferable to a program based on Gauss’s ingenious formula. My rationale for this assertion is that the straightforward program would be easier to get right and easier to generalize. But is it true?

Friends have been bugging me to give up my Lispish ways and do some programming in Python, so I’ll use that language for the examples given here. Here’s a Python version of Gauss’s trick:

def gauss(n):

return n*(n+1)/2

Not much to it, I have to admit. And the only way I can imagine getting it wrong would be to leave out the parentheses, so that the function would return n2 + 1/2. (In Python, for what it’s worth, “1/2” is equal to 0, so the actual returned value would be simply n2.)

The brute-force summation could also be written as a Python two-liner:

def brute(n):

return sum(range(1,n+1))

However, relying on Python’s built-in sum function is not quite fair here, in my view; it assumes the solution to the problem, without telling us anything about what’s going on behind the scenes. For all we know, sum could implement the n(n+1)/2 algorithm, in which case it would not be brutish at all. A more-perspicuous version of the brute function might look like this:

def brute(n):

total = 0

for k in range(1,n+1):

total += k

return total

Now it’s clear (isn’t it?) that we are looping through a series of numbers, adding each element of the series to a running total.

Which of these functions, gauss or brute, is the “better” program? Which one would deserve higher marks in Büttner’s class? As far as I can tell, they both return correct results for every positive integer n—at least until the memory capacity of the computer is exceeded. But the gauss version has an important advantage in efficiency: It is an O(1) program, meaning that the running time is essentially constant, regardless of the value of n, whereas the brute program is O(n), with running time proportional to n. The difference is undetectable for Büttner’s original problem of summing the numbers from 1 to 100, but if you’re interested in larger values of n, I would strongly recommend gauss(10**10) over brute(10**10). So score one for gauss.

Efficiency isn’t everything, however. My initial thought was that the brute program would be simpler or more straightforward—it would translate the definition of the problem directly into code—and thus would be the most reliable way to avoid errors. Now I’m not so sure. For one thing, I have to confess that I got the brute program wrong on my first try. In the for loop I wrote range(1,n), believing that this Python expression would return a list of the integers from 1 through n inclusive; in fact the list extends from 1 through n—1. I sincerely hope you will agree that my expectation in this case is perfectly sensible, and the language is doing something utterly goofy, but in the end that’s not the point. The uncomfortable fact is, regardless of programming-language quirks, off-by-one errors are a hazard whenever and however you write a loop.

Eschewing Python’s range feature doesn’t really solve the problem. Here is a version of brute with a more-explicit specification of the loop structure:

def brute(n):

k = 1

total = 0

while k <= n:

total += k

k += 1

return total

I’m going to assert that this is a correct program, but to verify my claim you may need to think carefully about the loop-termination condition (perhaps it should be k < n?) as well as the order of the two statements within the while loop. If anything, this version of the program offers more opportunities to go wrong.

All of these difficulties suggest that the gauss program may well be the simpler and more-reliable one after all, but I am going to persist in trying to defend the brute-force approach. For starters, how do we know that the gauss program is correct? It’s easy enough to verify that the Python code n*(n+1)/2 matches the mathematical expression n(n+1)/2, but how do we know the mathematics is correct—that the expression actually represents the sum of the natural numbers up to n? There are several different conjectures about how the schoolboy Gauss might have discovered the identity:

.

.

(For a discussion of these speculations, see my column; for the texts of 100+ versions of the story, see this HTML document.) As it happens, there’s no doubt the formula is correct; an inductive proof is not hard to follow. Nevertheless, when you consider the derivation of the formula as part of the process of writing the program, the gauss procedure is no longer quite so quick and easy.

The outlook gets even cloudier when you consider variations and generalizations. Suppose Büttner assigns a followup problem: Write a program that for any positive integer n computes the sum of the squares of the integers from 1 through n. For all the dullards who wrote something like the brute routine for the first exercise, this will be trivial modification of the earlier code:

def brute_squares(n):

total = 0

for k in range(1,n+1):

total += k*k

return total

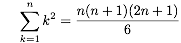

But what will Gauss do? Maybe he knows (or can derive on the spot) the identity:

,

,

which leads immediately to the Python function:

def gauss_squares(n):

return n*(n+1)*((2*n)+1)/6.

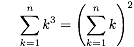

So far so good. But then Büttner can ask for the sum of the cubes of the first n numbers. Again, the looping, iterative program is easy to modify; it’s just a matter of replacing k*k with k*k*k or k**3 or pow(k,3). Young Gauss has a harder task; he needs yet another flash of insight to come up with the nonobvious identity:

,

,

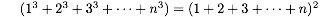

or in other words:

.

.

The corresponding Python program,

def gauss_cubes(n):

return gauss(n)**2

is wonderfully clear and succinct, but I think most of us would agree that the mathematical thought behind it is nontrivial.

With Carl Friedrich Gauss in the classroom, this sort of bantering competition between closed-form formulas and iterative computations could go on for some time, but I think Büttner could finally bring it to a close with this assignment: Write a program that accepts two arguments, namely a positive integer n and a function f that accepts an integer argument and returns a real result; the program must return the sum of f(1) through f(n). For the brutes among us, even this generalization of the original task is not much of a challenge. The program might look like this:

def brute_f(n,f):

total = 0

for k in range(1,n+1):

total += f(k)

return total

Invoking this program as brute_f(100,log) would calculate the sum of the natural logarithms of the first 100 integers, and brute_f(100,lambda(x):x**x) would yield the sum 11 + 22 + 33 + … + 100100. Perhaps Gauss could come up with a gauss_f program that would compute all such results in closed form—but I sure can’t.

At the end of the day, what’s the verdict on these two approaches to the summation process? I have to admit that my original intuition—my sense that the iterative program would be easier to write without errors—does not hold up very well to the test of experiment. But I am stubborn enough to go on defending the brute programs anyway. My new excuse is that they capture the abstraction, or the pattern, of summing a series in a way that the cleverer gauss programs do not. With explicit loops, you can see just what’s being computed; the formulas embedded in the various gauss programs seem opaque and mysterious by comparison. But maybe I’m just sore because I’m not sharp enough to invent those Gaussian formulas; in Büttner’s class, I know I’d be one of the other kids, the ones who add up all those numbers the painstaking and tedious way.

Whoa there. The Gauss program is not O(1), because multiplication isn’t. The standard, grade school multiplication algorithm is O(n^2), and so is your brute force algorithm. Which doesn’t mean the former wont run a lot more quickly in practice.

But there are other multiplication algorithms that are better than O(n^2). Karatsuba multiplication is O(n^(ln3/ln2)). (About O(n^1.58)) And the great thing is, Python uses Karatsuba multiplication automatically for large enough numbers. (When there are less than about 100 digits in the numbers multiplied, the grade school algorithm is faster.)

To the previous commenter: please get a clue. The standard, grade school multiplication algorithm is quadratic in the number of digits, not in n.

With that out of the way:

“For all we know, sum could implement the n(n+1)/2 algorithm”

That’s not possible, because python doesn’t have lazy evaluation and therefore sum cannot realize that it is being called on a range. All it sees is a list. I’m just picking nits here, but also wondering if you wrote that because of your Lisp background :)

Also, isn’t “reduce(lambda x, y: x+y, [f(x) for x in range(1,n+1)])” more pythonic than a for loop?

Anways, nice article.

To follow up on Arvind’s comment: that is to say, the gauss program runs in O((log n)^2) steps, and the brute program runs in AT LEAST n^2 steps. An exponential difference…