Newton and Notwen

by Brian Hayes

Published 7 February 2006

This is another loose end from my new column on reversible computing (now available online; also see the earlier bit-player item on swapping).

In the column I mention Henry G. Baker’s idea of reversing Newton’s method for approximating the square root of a number. Undoing the extraction of square roots doesn’t seem like much of a trick: After all, you can just multiply. But that’s not good enough for a reversible computer, which has to be able to back up through all the stages of a calculation, step by step.

Baker wrote, in a 1992 paper:

The Newton iterative square root algorithm x[i+1]=(x[i]+N/x[i])/2 exemplifies a large class of computations in which the output appears to be independent of the input, since the Newton iteration produces the square root independent of the initial approximation. Nevertheless, if this algorithm is run a fixed number of iterations on infinite precision rational numbers, and if x[0]>=sqrt(N), it is reversible! Newton’s iteration essentially encodes the initial conditions into lower and lower order bits, until these bits become insignificant. It is the erasure of these low order bits through rounding and/or truncation that dissipates the information needed for reversibility. In a sense, Newton’s iteration converges only because Newton’s inverted iteration produces chaos.

I first read this passage at least 10 years ago, and it has stuck with me ever since. Exploring the zany idea of a reversible algorithm whose output seems to be independent of its input was one of the reasons I wanted to write about reversible computing in the first place. Nevertheless, when my column went to press a couple of weeks ago, I still had not looked closely at the inverse-square-root algorithm; I was simply reporting Baker’s comments. This is a situation that always leaves me feeling nervous. It’s not that I worried Baker might be wrong; he’s a reliable source (moreso than I am). It’s that my own understanding of the inverted algorithm was much too shallow, and if anyone asked me to explain it in greater detail, I would have been stumped. In particular, why does Baker mention that reversibility is guaranteed only when the initial guess x0 is greater than or equal to the square root of N? I had only the vaguest notion of the answer. But now, after a pleasant weekend immersed in algebra and Lisp, I have a somewhat clearer sense of what’s going on.

To reiterate, Newton’s method is based on this iterative computation:

In other words, given a guess xi about the value of the square root of n, we take the average of xi and n/xi, which becomes the new guess xi+1 to be fed back into the same formula. Each stage of the iteration yields a closer estimate of the square root—unless of course the estimate is already exact, in which case x is equal to n/x.

Here’s an example of how the process goes. Let’s take the square root of n=2, starting with an initial guess of x0=2. Plugging these values into the formula yields (2 + 2/2)/2, or 3/2, as the next iterate, x1. Then applying the same method again yields a value of x2=17/12. Continuing in the same way, we get x3=577/408 and x4=665857/470832. The next iterate, x5=886731088897/627013566048, has a decimal value of 1.4142135623730951, which is correct to the number of places given.

In a reversible computer, we need the capacity to turn this process around, to work backward through the sequence of approximations—to convert the Newton iteration into the Notwen iteration. The first task is to define the inverse transformation mathematically. To avoid tripping over a thicket of subscripts, let’s rewrite the equation as:

Then, multiplying both sides by 2x and rearranging yields this quadratic equation:

The forward Newton iteration is what you get when you solve this equation for y. For the Notwen iteration we have to solve it for x instead. The answer comes straight out of the quadratic formula:

But this result is rather troubling. At each step in the iteration we will have to take the square root of 4y2–4n, a process that could yield an irrational value, or even an imaginary one. Can this algorithm really get us back to where we came from? Let’s try one of the examples encountered above, with n=2 and y=17/12. Then y2=289/144, and we’re looking for:

Miraculously, the value under the radical reduces to 1/36, and so its square root is 1/6. Completing the rest of the calculation recovers the previous iterate x=3/2. Of course there’s actually nothing miraculous about this. Whenever the Notwen method is used to invert a Newton calculation, it will turn out that the quantity under the radical is a perfect square. As Baker points out, all the information from the original Newton iteration has been encoded in those unwieldy fractions (or in the low-order digits of their decimal expansions). We’re exploiting all of that information, like a trail of breadcrumbs, to find our way back to the starting point of the computation.

Here’s a trace of a program descending six levels into the Newton iteration and then climbing back out again through the corresponding Notwen iterations.

x0 = 2

x1 = 3/2

x2 = 17/12

x3 = 577/408

x4 = 665857/470832

x5 = 886731088897/627013566048

x6 = 1572584048032918633353217/1111984844349868137938112

x5 = 886731088897/627013566048

x4 = 665857/470832

x3 = 577/408

x2 = 17/12

x1 = 3/2

x0 = 2

Note that it’s essential to write this program in a language that calculates with exact rational numbers. Rounding or truncating would be disastrous.

There’s just one more loose thread. Why does Baker warn us to start the Newton algorithm with an x0 greater than the square root of n? The Newton iteration converges on the square root from any starting guess (except x=0), so why shouldn’t the Notwen algorithm offer the same versatility?

Let’s try it. Here’s a trace of the Newton program’s progress toward the square root of 2 from an initial guess of 1/2, followed by the Notwen program’s attempt to recover that guess:

x0 = 1/2

x1 = 9/4

x2 = 113/72

x3 = 23137/16272

x4 = 1064876737/752970528

x5 = 2267891697076964737/1603641597827614272

x4 = 1064876737/752970528

x3 = 23137/16272

x2 = 113/72

x1 = 9/4

x0 = 4

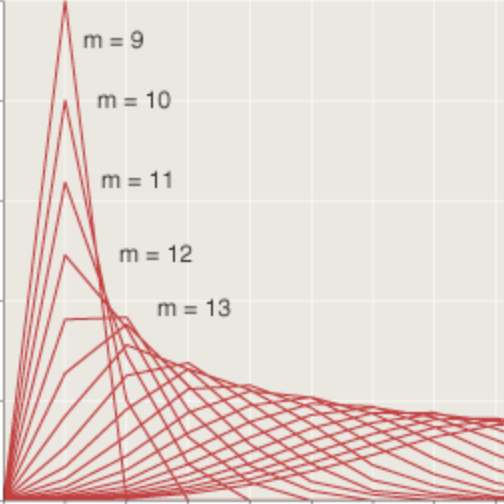

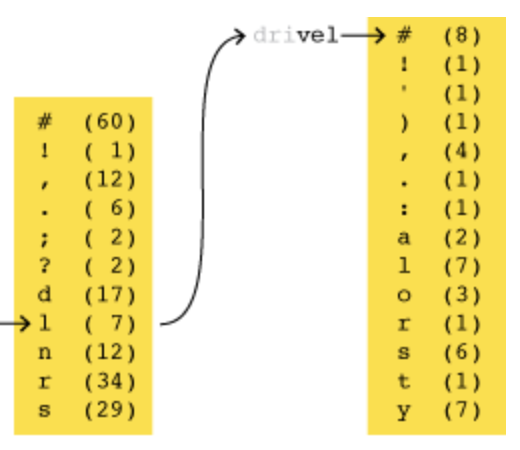

Everything goes smoothly until the very last step, when Notwen suddenly goes off the rails; applied to 9/4, it returns 4 instead of 1/2. How come? This is not just some minor bug or oversight in the program. The problem is that every quadratic equation has two roots—as indicated by the plus-or-minus symbol in the quadratic formula—and we have arbitrarily been choosing just one of those solutions, namely the larger one. That choice gives correct results as long as x0 is greater than the square root of n, but not otherwise. To apply the method more generally, we would need some scheme for keeping track of which root to choose on each iteration. The same is true of the “forward” algorithm for extracting square roots: After all, every number has two square roots, and the Newton method will converge on the negative one if given a negative initial guess.

With these bifurcations at every step, a square-root program becomes a lot more complicated than it seems on the surface, whether you run it forward or backward. That really shouldn’t come as a surprise. Algorithms only a little more elaborate—they derive from cubic equations rather than quadratic ones—underly the famous Julia and Mandelbrot sets, the very emblems and icons of complexity.

Afterthought: To reverse the Newton algorithm for extracting a square root, we have to extract a square root. Aha! We can use the Newton algorithm to calculate its own inverse! Lamentably, it won’t work. The one thing Newton’s method is no good at is finding the exact square root of a perfect square—and that’s just what we need in this case. The successive approximations of the Newton algorithm converge very quickly on the correct value, but they never actually get there.

Afterafterthought. I have discovered (or rediscovered, or remembered—it’s hard to say which) that Baker also discussed the Newton square-root algorithm in several installments of his “Garbage In/Garbage Out” column in ACM SIGPLAN Notices (the newsletter of the Association for Computing Machinery’s Special Interest Group on Programming Languages). Here are the references:

- Baker, Henry G. 1998. Garbage In/Garbage Out: You could learn a lot from a quadratic: overloading considered harmful. ACM SIGPLAN Notices 33(1):30–38.

- Baker, Henry G. 1998. Garbage In/Garbage Out: You could learn a lot from a quadratic: II: digital dentistry. ACM SIGPLAN Notices 33(2):34–39.

- Baker, Henry G. 1998. Garbage In/Garbage Out: March Möbius madness with a polynomial PostScript. ACM SIGPLAN Notices 33(3):24–35.

- Baker, Henry G. 1998. Garbage In/Garbage Out: You could learn a lot from a quadratic: Newton squares the circle. ACM SIGPLAN Notices 33(6):27–31.

Finally, while searching for these titles, I have also “discovered” yet another Baker article that has much to say about reversibility in computing systems:

- Baker, Henry G. 1994. Thermodynamics and garbage collection. ACM SIGPLAN Notices 29(4):58–63.

Publication history

First publication: 7 February 2006

Converted to Eleventy framework: 22 April 2025