Meet the Julians

by Brian Hayes

Published 2 July 2015

JuliaCon, the annual gathering of the community focused on the Julia programming language, convened last week at MIT. I hung out there for a couple of days, learned a lot, and had a great time. I want to report back with a few words about the Julia language and a few more about the Julia community.

It’s remarkable that after 70 years of programming language development, we’re still busily exploring all the nooks and crannies of the design space.  The last time I looked at the numbers, there were at least 2,500 programming languages, and maybe 8,500. But it seems there’s always room for one more. The slide at left (from a talk by David Beach of Numerica) sums up the case for Julia: We need something easier than C++, faster than Python, freer than Matlab, and newer than Fortran. Needless to say, the consensus at this meeting was that Julia is the answer.

The last time I looked at the numbers, there were at least 2,500 programming languages, and maybe 8,500. But it seems there’s always room for one more. The slide at left (from a talk by David Beach of Numerica) sums up the case for Julia: We need something easier than C++, faster than Python, freer than Matlab, and newer than Fortran. Needless to say, the consensus at this meeting was that Julia is the answer.

Where does Julia fit in among all those older languages? The surface syntax of Julia code looks vaguely Pythonic, turning its back on the fussy punctuation of the C family. Other telltale traits suggest a heritage in Fortran and Matlab; for example, arrays are indexed starting with 1 rather than 0, and they are stored in column-major order. And there’s a strong suite of tools for working with matrices and other elements of linear algebra, appealing to numericists. Looking a little deeper, some of the most distinctive features of Julia have no strong connection with any of the languages mentioned in Beach’s slide. In particular, Julia relies heavily on generic functions, which came out of the Lisp world. (Roughly speaking, a generic function is a collection of methods, like the methods of an object in Smalltalk, but without the object.)

Perhaps a snippet of code is a better way to describe the language than all these comparisons. Here’s a fibonacci function:

function fib(n)

a = b = BigInt(1)

for i in 1:n

a, b = b, a+b

end

return a

end

Note the syntax of the for loop, which is similar to Python’s for i in range(n):, and very different from C’s for (var i=0; i<n; i++). But Julia dispenses with Python’s colons, instead marking the end of a code block. And indentation is strictly for human readers; it doesn’t determine program meaning, as it does in Python.

For a language that emphasizes matrix operations, maybe this version of the fibonacci function would be considered more idiomatic:

function fibmat(n)

a = BigInt[1 1; 1 0]

return (a^n)[1, 2]

end

What’s happening here, in case it’s not obvious, is that we’re taking the nth power of the matrix \[\begin{bmatrix}1& 1\\1& 0\end{bmatrix}\] and returning the lower left element of the product, which is equal to the nth fibonacci number. The matrix-power version is 25 times faster than the loop version.

@time fib(10000) elapsed time: 0.007243102 seconds (4859088 bytes allocated) @time fibmat(10000) elapsed time: 0.000265076 seconds (43608 bytes allocated)

Julia’s base language has quite a rich assortment of built-in functions, but there are also 600+ registered packages that extend the language in ways large and small, as well as a package manager to automate their installation. The entire Julia ecosystem is open source and managed through GitHub.

When it comes to programming environments, Julia offers something for everybody. You can use a traditional edit-compile-run cycle; there’s a REPL that runs in a terminal window; and there’s a lightweight IDE called Juno. But my favorite is the IPython/Jupyter notebook interface, which works just as smoothly for Julia as it does for Python. (With a cloud service called JuliaBox, you can run Julia in a browser window without installing anything.)

I’ve been following the Julia movement for a couple of years, but last week’s meeting was my first exposure to the community of Julia developers. Immediate impression: Youth! It’s not just that I was the oldest person in the room; I’m used to that. It’s how much older. Keno Fischer is now an undergrad at Harvard, but he was still in high school when he wrote the Julia REPL. Zachary Yedidia, who demoed an amazing package for physics-based simulations and animations, has not yet finished high school. Several other speakers were grad students. Even the suits in attendance—a couple of hedge fund managers whose firm helped fund the event—were in jeans with shirt tails untucked.

Four of the ringleaders of the Julia movement. From left: Stefan Karpinski, Viral B. Shah, Jeff Bezanson, Keno Fischer.

Second observation: These kids are having fun! They have a project they believe in; they’re zealous and enthusiastic; they’re talented enough to build whatever they want and make it work. And the world is paying attention. Everybody gets to be a superhero.

By now we’re well into the second generation of the free software movement, and although the underlying principles haven’t really changed, the vibe is different. Early on, when GNU was getting started, and then Linux, and projects like OpenOffice, the primary goal was access to source code, so that you could know what a program was doing, fix it if it broke, customize it to meet your needs, and take it with you when you moved to new hardware. Within the open-source community, that much is taken for granted now, but serious hackers want more. The game is not just to control your own copy of a program but to earn influence over the direction of the project as a whole. To put it in GitHub terminology, it’s not enough to be able to clone or fork the repo, and thereby get a private copy; you want the owners of the repo to accept your pull requests, and merge your own work into the main branch of development.

GitHub itself may have a lot to do with the emergence of this mode of collective work. It puts everything out in public—not just the code but also discussions among programmers and a detailed record of who did what. And it provides a simple mechanism for anyone to propose an addition or improvement. Earlier open-source projects tended to put a little more friction into the process of becoming a contributor.

In any case, I am fascinated by the social structure of the communities that form around certain GitHub projects. There’s a delicate balance between collaboration (everybody wants to advance the common cause) and competition (everyone wants to move up the list of contributors, ranked by number of commits to the code base). Maintaining that balance is also a delicate task. The health of the enterprise depends on attracting brilliant and creative people, and persuading them to freely contribute their work. But brilliant creative people bring ideas and agendas of their own.

The kind of exuberance I witnessed at JuliaCon last week can’t last forever. That’s sad, but there’s no helping it. One reason we have those 2,500 (or 8,500) programming languages is that it’s a lot more fun to invent a new one than it is to maintain a mature one. Julia is still six tenths of a version number short of 1.0, with lots of new territory to explore; I plan to enjoy it while I can.

Quick notes on a few of the talks at the conference.

Zenna Tavares described sigma.jl, a Julia package for probabilistic programming—another hot topic I’m trying to catch up with. Probabilistic programming languages try to automate the process of statistical modeling and inference, which means they need to build things like Markov chain Monte Carlo solvers into the infrastructure of the programming language. Tavares’s language also has a SAT solver built in.

Chiyuan Zhang gave us mocha.jl, a deep-learning/neural-network package inspired by the C++ framework Caffe. Watching the demo, I had the feeling I might actually be able to set up my own multilayer neural network on my kitchen table, but I haven’t put that feeling to the test yet.

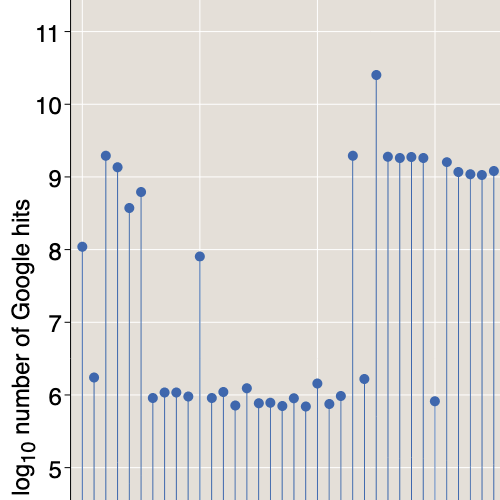

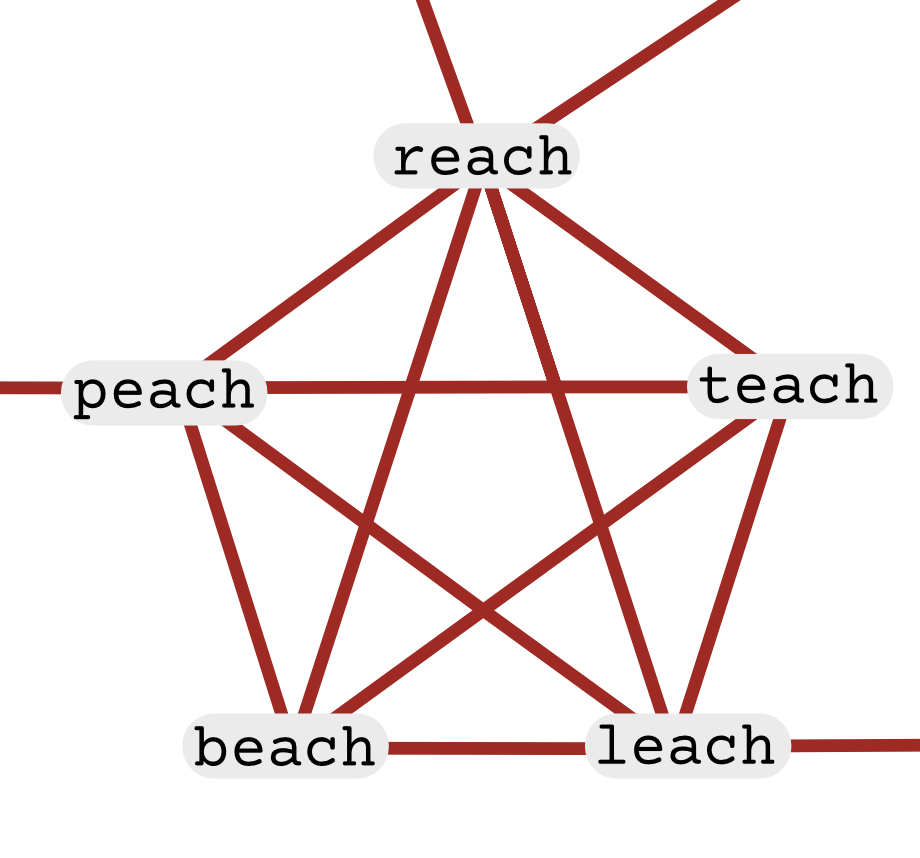

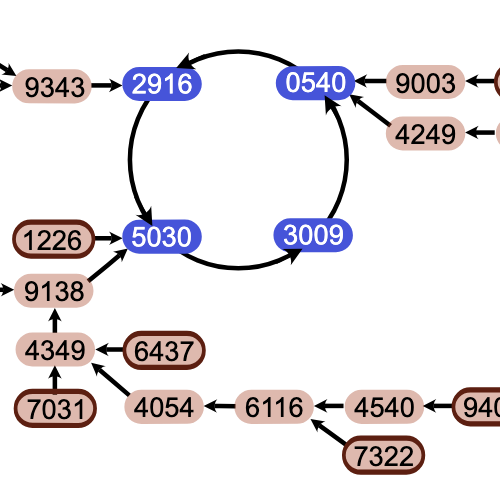

Finally, because of parallel sessions I missed the first half of Pontus Stenetorp’s talk on “Suitably Naming a Child with Multiple Nationalities using Julia.” I got there just in time for the big unveiling. I was sure the chosen name would turn out to be “Julia.” But it turns out the top three names for the offspring of Swedish and Japanese parents is:

Steneport wants to extend his algorithm to more language pairs. And he also needs to tell his spouse about the results of this work.

Responses from readers:

Please note: The bit-player website is no longer equipped to accept and publish comments from readers, but the author is still eager to hear from you. Send comments, criticism, compliments, or corrections to brian@bit-player.org.

Publication history

First publication: 2 July 2015

Converted to Eleventy framework: 22 April 2025

Julians is passe. The hip new thing is Gregorians.

At first glance, it looks like the faster runtime of fibmat is a result of the fact that the algorithm is logarithmic in n, whereas fib is linear. But while this is surely part of the story, the high memory requirements in fib seem to imply a more subtle difference. Could part of the slowness of fib arise from inefficient garbage collection in the loop?

I would bet on that log n algorithm, but there are certainly plenty of other possible factors, and I don’t know enough to estimate their importance.

The 5 megabyte memory allocation in the iterative version is probably not enough to tickle the garbage collector, but it does indicate that all the successive fibonacci numbers are being allocated on the heap. (The sum of the decimal digit counts for

fib(1) .. fib(10000)is 10,454,023.) One might wish for an in-place algorithm that would reuse a single block of memory, but that’s a lot to ask with bignums.I wondered if the cute tuple shuffle,

a, b = b, a+b, might be slowing things down. So I tried rewriting it with an explicit temporary variable for the swap:for i = 1:n tmp = a a = b b += tmp endIt made no difference. Memory allocation was identical, and timings were very similar.

In any case, I wasn’t trying to benchmark these algorithms. I’m too much of a beginner in the language to do it right. I just thought it worth noting that this language created by a bunch of matrix mavens implements matrix operations in very spiffy style.