Sleight of Handle

by Brian Hayes

Published 23 June 2008

As I mentioned, the American Scientist web site is undergoing an overhaul. One aspect of the transition that’s still in transition is redirecting http requests so that old links and bookmarks will retrieve the correct document on the new site. I wish I could snap my fingers and fix this problem globally, but that seems to be beyond my power. Over the weekend, however, I decided I could at least try to reduce the entropy of my own little corner of the WWW by repairing all the links at bit-player.org that point to American Scientist articles. It wasn’t quite as much fun as I had expected.

The first problem is that the old links are completely opaque. They look like this:

http://www.americanscientist.org/AssetDetail/assetid/48550

The identifier “assetid/48550″ offers no clue to what it is identifying. It could be anything the magazine has published in the past 10 years. The second problem is that you can’t just follow the link to find out where it leads. None of these links are working anymore—that’s the whole point.

Does this situation suggest a certain lack of foresight on my part? Oh well. I’ll just sit here in the corner until the paint on the floor dries.

For links that point to my own columns, I’m generally able to infer from their context what the proper target is. And, if I get stuck, the Wayback Machine is there to rescue me (thank you Brewster Kahle). Still, reviving a batch of dead links is a dreary exercise. I find a link; I figure out which column it refers to; I run a search on the new web site to find the updated URL; I record the mapping from old link to new, so that this information won’t be lost; I correct the link in the bit-player posting. Wash, rinse, repeat.

After fixing only about a dozen links in this way, I came to a moment of self-knowledge: During my remaining years on this planet, however few or many they may be, I never want to go through this process again. Which raises the question: What’s the best way to create permanent pointers to objects that may not stay where you put them?

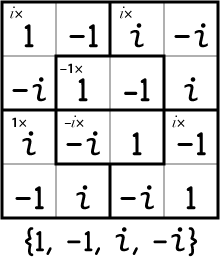

The answer to this question has been known for decades. What’s needed is double indirection: a pointer to a pointer. Also known as a handle.

- Zeroth-order indirection: I send you the document itself.

- First-order indirection: I send you an address where you can find the document.

- Second-order indirection: I send you an address where you can find the address of the document.

At order zero—with no indirection at all—the document is beyond my control from the moment I send it. With a single level of indirection, I can update or correct the document and you’ll see the new version, but if I move it, you’ll lose access. With double indirection I can change either the content of the document or its location whenever I please, as long as I take care to update the intermediary pointer—the forwarding address.

Double indirection is already a well-established technology in Internet operations. It is the basis of the Domain Name System. When you follow a link to “bit-player.org,” a nameserver looks up that string of characters and returns an IP number, such as 69.89.21.70, which specifies the real whereabouts of the page you’re now reading. I can move bit-player.org to a new host with a new IP number and you’ll still be able to find me, provided I let the world’s nameservers know the new address.

The same kind of mechanism can be made to work at a finer level of detail. In particular, Digital Object Identifiers offer an infrastructure for attaching permanent names to documents or other online resources. For example,

http://dx.doi.org/10.1511/1998.5.410

is the DOI irrevocably assigned to one of my old columns, titled “Bit Rot.” In that column, written 10 years ago, I discussed the sad tendency of digital information to go stale, to decay, to become inaccessible. Alas. As you have doubtless guessed, the DOI has lost touch with its target; following the link above will get you nothing but a “page not found” error. And fixing errant DOIs looks no easier than fixing raw, broken links. I’m afraid that “dx.doi.org/10.1511/1998.5.410″ is almost as opaque as “assetid/15568.”

In practice, the world seems to have settled on a different mechanism of double indirection for keeping track of stuff on the web. We don’t try to remember or record URLs; we simply go to Google and run a search. The trouble is, though, Google itself relies on the whole distributed network of links to trace and rank documents. If everyone counts on Google to know where everything is, Google will have no way of finding anything.

According to legends of yore, the Internet was designed to survive a nuclear attack. And at the hardware level, the Net is indeed extraordinarily resilient. But the superstructure of linked documents we’ve built atop that foundation is not so unshakeable. If we were to wake up one morning and find that all the links on all web pages were broken, it wouldn’t be much consolation that the underlying documents still existed. Much of their value lies in the connections between them.

Tim Berners-Lee, the first weaver of this web, offers some excellent advice: URLs should never change. If only we’d thought of that sooner.

Back in 1994 I wrote a column titled simply “The World Wide Web.” It was all so new then. My elaborate definitions and explanations seem very quaint now, like someone describing in meticulous detail how to dial a telephone or flush a toilet:

What is the Web? Is it a place? A program? A protocol? One of the <A HREF=”http://info.cern.ch/hypertext/WWW/TheProject”> documents</A> in which the Web describes itself offers this assessment: “The World Wide Web (W3) is the universe of network-accessible information, an embodiment of human knowledge.” That about covers it.

For something as vast as a universe, the Web is surprisingly easy to find your way around in. It works like this. On a computer connected to the Internet you start up a program called a browser; the browser goes out over the network and retrieves a document, which we can assume for the moment is simply a page of text. Within the text are some highlighted phrases, displayed in color or underlined. When you select one of the highlighted words by clicking on it with a mouse, a new document appears, with new highlighted “links.” Clicking on one of these links takes you to still another document. Each time you follow a link, you may be visiting another network site, perhaps quite distant from your original destination as well as from your own location.

Is it any surprise that the link embedded in this passage—which I have formatted so that the URL remains visible—summons a “404 Not Found” message?

Responses from readers:

Please note: The bit-player website is no longer equipped to accept and publish comments from readers, but the author is still eager to hear from you. Send comments, criticism, compliments, or corrections to brian@bit-player.org.

Publication history

First publication: 23 June 2008

Converted to Eleventy framework: 22 April 2025

“If everyone counts on Google to know where everything is, Google will have no way of finding anything”

In a talk some years ago on the linear algebra lurking beneath PageRank, the speaker referred to this prospect as “eigendeath.” I don’t remember who gave the talk or whether the term was original with him or her. Does anyone know the etymology? Google gives no hits for it!

Stale doi’s are not the only problem; the other is that the same content may be hosted by multiple entities with different doi’s, and when one entity stops hosting its content the doi gives you no way of finding the other copies. iPhylo has more here, here, and here.

The problem of broken links in the web was, sadly, easily predictable and many of the pitfalls could have been avoided had TBL et al spent a bit more time looking at prior art in the hypertext community.

Contrary to popular belief, there was a well established and thriving hypertext community long before the Web (see for example the musings of Ted Nelson). However as Scott McNealy once quipped, the only real standard is volume, and now the web is the largest (and most broken :) hypertext collection in the world.

There was a fairly sensible proposal to introduce a DNS-style indirection using URNs, where a URN server would translate a URN to a URL transparently to the user. This would work if the target of a URN were always informed that they’d been targetted, and made sure they updated the URN server with the new address (e.g. as we do for DNS name resolution).

Still, for all its faults perhaps we should just be grateful how well the web works as a whole rather than the occasional grief a 404 error causes.

Re: “The only real standard is volume” - I thought that was the way standards should work. First you get volume, THEN you write the standard. Everybody builds a better mousetrap, but it’s pretty much a debate until you get other people using it.

Maybe it’s the circle of (web) life that URLs die?

I saw one solution to this problem once that really blew me away; give data names, and network with the names instead of the addresses — a.k.a. content-centric networking. Van Jacobson, an inventor of TCP/IP, is a big proponent of this idea.

It’s the mindset of functional programming applied to networking. instead of more indirection, less indirection (i.e., zero indirection)

Tim Berners-Lee is suggesting this sort of notion by having invariant URLs. The only problem is, the invariance is not enforced by the web, and like filthy C programmers, we change URLs constantly as a result. The web unnecessarily ties DATA to LOCATION in the url schema, resulting in mutable names for data.

Here’s another clever solution: In place of the ordinary URL for a web page, use instead a “robust hyperlink”, extending the URL with a “lexical signature” consisting of (say) five well-chosen words in the page. To find the page, given the robust pointer, first try the URL. If that fails, then ask Google to find the page — which it will, if the five words were indeed chosen well, and the page is in Google’s index.

See “Robust hyperlinks cost just five words each”, by Thomas A. Phelps and Robert Wilensky, at http://www.eecs.berkeley.edu/Pubs/TechRpts/2000/CSD-00-1091.pdf. It is easy to automate both the choice of lexical signature and the dereferencing mechanism.

@Stuart Haber: I agree that the Phelps and Wilensky lexical signatures are very cool idea. And I’m sure that if such a digest were included in every URL or link, people would find other clever things to do with the information. Google would love it.

But I have to add that it’s not hard to think up scenarios where redirecting through a search engine would be unhelpful. Consider a phishing message with a link to a URL that looks legitimate but names a nonexistent document; the lexical signature is carefully crafted to send you off to a nefarious web site. (Phelps and Wilensky don’t discuss at all how their scheme would work in a world with malicious agents.)

By the way, one of the ISPs I use has implemented an analogous idea at a different level of the network infrastructure — not when a site returns a 404 error but when a DNS lookup fails. Instead of getting a proper error message, I get a “helpful” list of suggested alternatives, plus a gaggle of advertising. The only thing I can say in favor of this intervention is that it has made me a more careful typist when I enter a URL by hand.